Chance News 101

August 14, 2014 to October 13, 2014

Quotations

“It is easy to lie with statistics. It is hard to tell the truth without it.”

Submitted by Margaret Cibes

“Recently, I tried ‘treeing out’ (as the decision buffs put it) the choice [my patient] faced. …. When all the possibilities and consequences were penciled out, my decision tree looked more like a bush.”

Submitted by Margaret Cibes

From Significance, July 2014:

“Access to large databases does not reduce the need to understand why these particular samples are available.” (original emphasis)

“[In the early 1970s] I would be called to the bedside of a woman in labour; and instead of asking myself what was the best treatment for her I would find myself asking ‘Who is this woman’s consultant?’ Because all the consultants had their own favoured treatments and responses, whatever I suggested had to fit in which each of them. Nor was it evidence-based decision-making: it was eminence-based decision-making….” (emphasis added)

Note: British Clinician Archie Chalmers created the Cochrane Collaboration in early 1993 to provide medical workers with easily accessible/readable summaries of all clinical trials on various treatments. Hear a half-hour BBC radio interview with Chalmers here.

Submitted by Margaret Cibes

From the "Bits" blog at the nyt.com:

“It’s an absolute myth that you can send an algorithm over raw data and have insights pop up.”

“Current knowledge bases are full of facts, but they are surprisingly knowledge poor.”

Submitted by Bill Peterson

Forsooth

"Gates...found his ratio. 'In general,' he concluded, 'best results are obtained by introducing recitation after devoting about 40 percent of the time to reading. Introducing recitation too early or too late leads to poorer results.' The quickest way to master that Shakespearean sonnet, in other words, is to spend the first third of your time memorizing it and the remaining two-thirds of the time trying to recite it from memory."

How about flunking exams on percents and fractions?

Submitted by Bill Peterson

Submitted by Paul Alper

(More on Scotland's referendum below.)

"[Psychologist Carolyn] Aldwin directs the Center for Healthy Aging Research at Oregon State University. She used data from a huge, long-term study of male veterans enrolled in the VA health care system. She focused in on older men - average age 65 - who said they suffered stress. She found it didn't matter whether it was caused by a major life event or by day-to-day problems. The harmful effect was the same. These stressed-out men had a 32 percent increased risk of dying."

Submitted by Jeanne Albert

Naked Statistics

Margaret Cibes culled the following sampling of quotations from Charles Whelan's book Naked Statistics: Stripping the Dread from Data.

- “Every fall, several Chicago newspapers and magazines publish a ranking of the ‘best’ high schools in the region. …. Several of the high schools consistently at the top of the rankings are selective enrollment schools …. One of the most important admissions criteria is standardized test scores. So let’s summarize: (1) these schools are being recognized as ‘excellent’ for having students with high test scores; (2) to get into such a school, one must have high test scores. This is the logical equivalent of giving an award to the basketball team for doing such an excellent job of producing tall students.”

- “[T]he most commonly stolen car is not necessarily the kind of car that is most likely to be stolen. A high number of Honda Civics are reported stolen because there are a lot of them on the road; the chances that any individual Honda Civic is stolen … might be quite low. In contrast, even if 99 percent of all Ferraris are stolen, Ferrari would not make the ‘most commonly stolen’ list, because there are not that many of them to steal.”

- “What became known as Meadow’s Law – the idea that one infant death is a tragedy, two are suspicious and three are murder – is based on the notion that if an event is rare, two or more instances of it in the same family are so improbable that they are unlikely to be the result of chance. Sir Roy [Meadow in 1993] told the jury in one of these cases that there was a one in 73m chance that two of the defendant's babies could have died naturally. He got this figure by squaring 8,500—the chance of a single cot death in a non-smoking middle-class family—as one would square six to get the chance of throwing a double six.” [footnote: “The Probability of Injustice”, Economist, January 22, 2004]

- “John Ioannidis, a Greek doctor and epidemiologist, examined forty-nine studies published in three prominent medical journals [footnote: “Contradicted and Initially Stronger Effects in Highly Cited Clinical Research”, JAMA, July 13, 2005] …. Yet one-third of the research was subsequently refuted by later work. …. Dr. Ioannidis estimates that roughly half of the scientific papers published will eventually turn out to be wrong. His research was published in the Journal of the American Medical Association, one of the journals in which the articles he studied had appeared. This does create a certain mind-being irony. If Dr. Ioannidis’s research is correct, then there is a good chance that his research is wrong.” (emphasis added)

See Chance News 92 for more discussion of the book. Also, we can't resist reproducing this description of Whelan from this review in the New York Times, "a natural comedian, he is truly the Dave Barry of the coin toss set."

Atlanta cheating scandal

“Are drastic swings in CRCT scores valid?”

by John Perry and Heather Vogell, The Atlanta Journal-Constitution

(Updated July 5, 2011 from original post October 19, 2009)

The trial of school employees allegedly involved in a widespread cheating scandal is underway in Georgia with respect to the Criterion-Referenced Competency Tests (CRCT) in some Atlanta schools. Charges stem from student test results that showed “extraordinary gains or drops in scores” between spring of 2008 and 2009. There are about a dozen defendants in this trial; a number of others have accepted plea agreements or received postponements. (See the formal indictment.)

In West Manor and Peyton Forest elementary schools, for instance, students went from among the bottom performers statewide to among the best over the course of one year [on average]. The odds of making such a leap were less than 1 in a billion.

The Atlanta Journal-Constitution conducted a study of average grade-level scores in reading, math and language arts for students in grades 3 to 5. It found that Peyton Forest School had an average math score that rose from among the lowest in the state for 2008 third-graders to fourth in the state for 2009 fourth-graders (a gain of over 6 standard deviations). It also noted that the fourth-graders had scored at the lowest level on math practice tests administered two months before the actual tests in 2009. (The newspaper’s general methodology is described at the end of the article.)

The study also found that West Manor School’s statewide math ranking rose from 830 for fourth-graders in 2008 to the highest statewide for fifth-graders in 2009. The average score had risen by nearly 90 points year to year (a gain of over 6 standard deviations), compared the statewide average rise of about 15 points. Similarly, the gain in average score from practice test to actual test in 2009 was highly unusual.

A third school’s results showed results in the opposite direction with respect to average writing scores – from the top statewide average score for fourth-graders in 2008 to an extremely low average score for fifth-graders in 2009.

Two common aspects of a statistical analyses of average scores are erasure marks - with an unusual number of answers changed from incorrect to correct - and unexpected patterns of responses - with students getting more hard than easy questions correct. State investigators found strong evidence of the former in the Atlanta schools.

A district spokesman has offered some "explanations": some schools might teach their curricula differently, the changes might be random, class sizes might be small, or teacher turnover could be high in Atlanta.

The Atlanta Journal-Constitution also examined test results for 69,000 schools in 49 states and found “high concentrations of suspect scores” in 196 school districts. See these results, along with a more detailed methodology and a list of study consultants with their feedback here. The New Yorker has a detailed story about the people/logistics involved in cheating at an Atlanta middle school:

- Wrong Answer: In an era of high-stakes testing, a struggling school made a shocking choice

- by Rachel Aviv, New Yorker, 21 July 2014

Submitted by Margaret Cibes

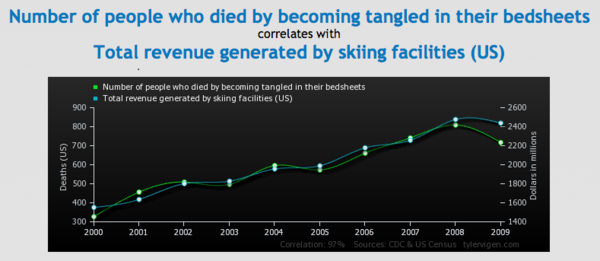

More spurious correlations

Chance News 99 observed that Tyler Vigan's amusing Spurious Correlations project had been referenced on the Rachel Maddow show. Margaret Cibes sent the post below, where we see that Business Insider has also picked up on this.

- “These Hilarious Charts Will Show You That Correlation Doesn’t Mean Causation”

- by Dina Spector, Business Insider, May 9, 2014

- The author provides some sample charts, all of which – and more - can be found at the website Spurious Correlations, by Tyler Vigan, who also provides raw data for each chart, although not always clear sources for the data. There is also a short video by Vigan.

- Here’s one that shows the number of people who died by becoming entangled in their bedsheets versus the total revenue generated by skiing facilities, where r = +0.969724:

The hot hand, revisited

Ethan Brown sent the following links to the Isolated Statisticians e-mail list:

- Does the 'hot hand' exist in basketball?, by Ben Cohen, Wall Street Journal, 27 February 2014

- The hot hand: A new approach to an old “fallacy”, by Andrew Bocskocsky, John Ezekowitz, and Carolyn Stein, MIT Sloan Spots Analytics Conference, 28 February-1 March, 2014

The WSJ story is reporting on a new study presented at the MIT conference. The researchers found a "small yet significant hot-hand effect" after examining a data set consisting of some 83,000 shots from last year's NBA games. The analysis was facilitated by the extensive video records now available. In contrast to earlier studies, their model took into account the potential increase of difficulty of successive shots in a streak. For example, "hot" players might attempt longer shots, or draw tighter coverage from defenders. Conditioning on the difficulty of the current shot, the researchers found a 1.4 to 2.8 percentage point improvement in success rate after several previous shots had been made.

See also The hot hand effect gets hot again by Stephanie Kovalchik in a web-exclusive article for Significance.

Discussion

1. On practical vs. statistical significance: How important would such an effect be in a game? Can you think of a way to measure it?

2. It certainly sounds plausible, as suggested in the paper, that a player who feels "hot" might attempt more difficult shots and/or draw more defenders. Do you think the many fans who believe in the hot hand take such things into account? That is, would a player whose shot percentage was constant, but whose shot difficulty was increasing, be popularly regarded as "hot"? What about a player, who, facing increased defensive pressure, passed to teammates receiving less focus from defenders?

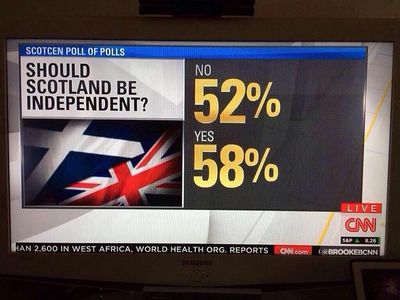

Scotland's independence referendum

There has been a lot of news surrounding the Scottish referendum. Paul Alper sent this

Scotland’s ‘No’ vote: A loss for pollsters and a win for betting markets

by Justin Wolfers, "The Upshot" blog, New York Times, 19 September 2014

Wolfers reports on how badly the pollsters did on Scotland's referendum: pollsters predicted a very close result but was in the end there was actually a ten point difference. He writes, "Typically, asking people who they think will win yields better forecasts, possibly because it leads them to also reflect on the opinions of those around them, and perhaps also because it may yield more honest answers. ...Indeed, in giving their expectations, some respondents may even reflect on whether or not they believe recent polling."

Margaret Cibes also sent links to this and also a pre-election piece by the same author:

Betting markets not budging over poll on Scottish independence

by Justin Wolfers, "The Upshot" blog, New York Times, 8 September 2014

At that time, a new opinion poll had just predicted a win for the independence movement, upsetting British financial markets. Wolfers wrote:

The fast-moving news cycle may further shift public opinion — whether it’s recent promises for greater autonomy if the Scots remain within Britain or the announcement of another royal pregnancy. These broader uncertainties are never included in the so-called “margin of error” that comes with these polls.

But political prediction markets, in which people bet on the likely outcome, are all about evaluating and quantifying the full range of risks. And currently, bettors aren’t buying into the pro-independence hype.

Indeed, the betting markets showed much less volatility than the opinion polls, and in the end had it right.

In The Scotland independence polls were pretty bad (FiveThiryEight, 19 September 2014), Nate Silver had some musings about what went wrong with the polls. We know that in voluntary response polls, strong (and especially negative) opinions tend to be overrepresented. Moreover, Silver suggests that less passionate voters--even when actually contacted by pollsters--might have been less willing to state a preference. Both these factors would understate support for remaining in the UK. But since the turnout rate for the referendum was very high, he concludes, this support ultimately mattered.

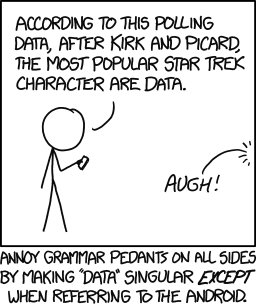

Is data plural?

Priscilla Bremser sent this humorous response from xkcd.com:

For a discussion on use in news sources, see

Data are or data is? "Datablog", Guardian, 8 July 2012. Authorities cited there range from

the Wall Street Journal to the OED.

Paul Alper sent the following comments (excerpt of a piece he sent to a British journal, Higher Education Review, in 2004).

...according to a well-known linguist at Stanford University, Geoffrey Nunberg, who wrote this for the American Heritage Dictionary, "Since scientists and researchers think of data as a singular mass entity like information, it is entirely natural that they should have come to talk about it as such and that others should defer to their practice. Sixty percent of the Usage Panel accepts the use of data with a singular verb and pronoun in the sentence 'Once the data is in, we can begin to analyze it.'" A quick look at my own dictionary, Webster's New Collegiate Dictionary (1977, so not all that new) indicates that data is

- n pl but sing or pl in constr

The rub, of course, is that "datum," the supposed singular form, sounds so pretentious. To my astonishment, there is even a "datums" in my dictionary. It is a plural of datum but used almost exclusively in the field of geodesy which my dictionary reveals "is a branch of applied mathematics which determines the exact position of points and the figures and areas of large portions of the earth's surface, the shape and size of the earth, and the variations of terrestrial gravity and magnetism." To the best of my knowledge, I have never met a geodesist but it is my suspicion that the use of datums in place of data is some sort of affectation, an imposition of jargon to keep away or otherwise confuse outsiders.

Testing males and females

Tim Hesterberg sent the following editorial to the Isolated Statisticians list, who suggested it as a topic for discussion--and also wondered if a retraction would be forthcoming:

Testing males and females in every medical experiment is a bad idea

byy R. Douglas Fields, Scientific American, 19 August 2014

As reported in Labs are told to start including a neglected variable: females (New York Times, 14 May 2014), the National Institutes of Heath has called for an end to sex bias in medical studies.

The Scientific American editorial takes exception, claiming that the policy would waste resources. We read

If scientists must add a second factor—sex—to their experiment, two things happen: the sample size is cut in half, and variation increases. Both reduce the researchers' ability to detect differences between the experimental and control groups. One reason variation increases is the simple fact that males and females are different; these differences increase the range of scores, just as they would if males and females competed together in Olympic weight lifting. The result is that when males and females are mixed together, scientists might fail to detect the beneficial effect of a drug—say, one that reduces blood pressure in males and females equally well.

In the comments section, one reader objected, writing:

The author completely missed the reason for the new rules. It's clear than in many/most cases, the medical results differ depending on which sex is using the med. To avoid identifying these differences (and resulting impacts/efficacy) for money reasons misses the points of the medical test in the first place. Products may work well for men, less well for women.

The preceding quotes seem focused on clinical trials. For more on the policy, which also involves preclinical research, see this commentary from Nature.

Baysian statistics in the news

Annette Gourgey sent this interesting article to the Isolated Statisticians list.

The odds, continually updated

by F. D. Flam, New York Times, 29 September, 2014

This essay focuses on the rise of Bayesian techniques in statistics, noting that increases in computing power have enabled analysis that would have been intractable a generation ago. Among the diverse contemporary statistical applications mentioned here are a successful Coast Guard search for a missing fisherman, the search for planets outside the solar system, and estimates of the age of the universe.

The article actually traces the history of statistics much further back, beginning with John Arbuthnot's analysis of demographic data (citing Stephen Senn's Dicing with Death)! As an early example of a hypothesis test, it discusses Daniel Bernoulli's calculations involving the orbits of planets (which all lie near to a plane rather than appearing random). Columbia University statistician Andrew Gelman is quoted raising concerns over the frequentist approach here. Of course, the article mentions the Reverend Thomas Bayes. Alas, it cannot avoid invoking the infamous Monty Hall problem as an example of a counterintuitive result of Bayes Theorem! Indeed, a large picture of the Monty himself in front of his game show curtain appears front and center!

Chance News has often extracts mangled descriptions of frequentist p-values from news stories. So it is something of a relief to read the following:

The essence of the frequentist technique is to apply probability to data. If you suspect your friend has a weighted coin, for example, and you observe that it came up heads nine times out of 10, a frequentist would calculate the probability of getting such a result with an unweighted coin. The answer (about 1 percent) is not a direct measure of the probability that the coin is weighted; it’s a measure of how improbable the nine-in-10 result is — a piece of information that can be useful in investigating your suspicion.

More Marilyn

Having seen yet another mention of Monty Hall, we wonder if Marilyn vos Savant's column is still fanning the flames. In the 31 August 2014 installment we find:

Say that 1 million scratch-off cards are sold. Under the silver-coated area, one card reads, “You win a date with the man or woman of your dreams.” (You get to pick.) All the other cards read, “You lose.” After buying a card for myself, I observe 999,998 other contestants scratch off their cards to read, “You lose.” A 999,999th contestant, his unscratched card still in his back pocket, is playing Frisbee with his dog. Should I offer him a lot of money to swap scratch-off cards with me? —N. B., Gaithersburg, Md.

Marilyn answers, "No, because your chance of winning (now 50-50) wouldn’t improve. Instead, offer him a lot of money to sell his card to you."

Laurie Snell used to wonder if Marilyn was somehow engineering questions to get probability puzzles, or if people were really trying to trick her. Either way, it's hard to miss the parallel here with her original answer to the Monty Hall problem:

Yes; you should switch. The first door has a 1/3 chance of winning, but the second door has a 2/3 chance. Here’s a good way to visualize what happened. Suppose there are a million doors, and you pick door #1. Then the host, who knows what’s behind the doors and will always avoid the one with the prize, opens them all except door #777,777. You’d switch to that door pretty fast, wouldn’t you?

In any case, her 29 September 2014 column contains a request from a reader who, while graciously complimenting her, adds that "it might have been good to have pointed out that the problem as posed is different from the game show paradox even if you didn’t have space to elaborate." Indeed.

Submitted by Bill Peterson

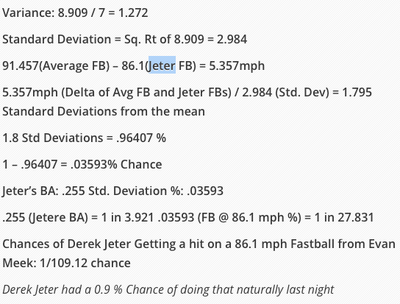

Derek Jeter defies the odds

Peter Doyle forwarded the following story (originally from Bob Drake), which might be an extended Forsooth!

Derek Jeter Truther wants you to know the captain's final home game was rigged

by Dan Carson, Bleacher Report 26 September 2014

Baseball fans will know that Derek Jeter's Yankee Stadium career ended in storybook fashion with a game-winning hit in his final at-bat. Skeptics were soon suggesting that the opposing pitcher had served up a soft pitch to enable these heroics. But one naysayer went so far as to conduct his own statistical analysis, which is reproduced in the article.

Readers might be amused trying to figure out what is going on here. The vocabulary is quirky, but the central claim seems to be that the pitch in question was 1.8 standard deviations below the pitcher's average velocity. But surely Jeter's batting average does not represent the chance he can hit an easy pitch.

(With no tortured statistics, we do know that earlier this year National League pitcher Adam Wainwright basically confessed to giving Jeter some pitches to hit in the All Star Game).