Chance News 88: Difference between revisions

m (→Discussion) |

|||

| Line 2: | Line 2: | ||

==Forsooth== | ==Forsooth== | ||

“Odds of becoming a top ranked NASCAR driver: 1 in 125 billion” | |||

<div aiign=right>from an advertisement by Autism Speaks in ''Sports Illustrated''</div> | |||

==Impact and retract== | ==Impact and retract== | ||

Revision as of 12:43, 7 September 2012

Quotations

Forsooth

“Odds of becoming a top ranked NASCAR driver: 1 in 125 billion”

Impact and retract

As unlikely as it may seem, there are many thousands (!) of health/medical journals published each month. Obviously, some carry more clout than others when it comes to promotion and reputation of contributing authors. Those journals are said to have high “impact factors.” The de facto and default definition of IF, according to Wikipedia “was devised by Eugene Garfield, the founder of the Institute for Scientific Information (ISI), now part of Thomson Reuters. Impact factors are calculated yearly for those journals that are indexed in Thomson Reuters Journal Citation Reports.”

The calculation of IF is a bit involved:

In a given year, the impact factor of a journal is the average number of citations received per paper published in that journal during the two preceding years. For example, if a journal has an impact factor of 3 in 2008, then its papers published in 2006 and 2007 received 3 citations each on average in 2008. The 2008 impact factor of a journal would be calculated as follows:

- A = the number of times articles published in 2006 and 2007 were cited by indexed journals during 2008.

- B = the total number of "citable items" published by that journal in 2006 and 2007. ("Citable items" are usually articles, reviews, proceedings, or notes; not editorials or Letters-to-the-Editor.)

- 2008 impact factor = A/B.

(Note that 2008 impact factors are actually published in 2009; they cannot be calculated until all of the 2008 publications have been processed by the indexing agency.)

Of course, when there is an “A over B” you can be sure that some journals might be tempted to inflate A and/or lower B to obtain a higher IF.

A journal can adopt editorial policies that increase its impact factor. For example, journals may publish a larger percentage of review articles which generally are cited more than research reports. Therefore review articles can raise the impact factor of the journal and review journals will therefore often have the highest impact factors in their respective fields. Journals may also attempt to limit the number of "citable items", ie the denominator of the IF equation, either by declining to publish articles (such as case reports in medical journals) which are unlikely to be cited or by altering articles (by not allowing an abstract or bibliography) in hopes that Thomson Scientific will not deem it a "citable item". (As a result of negotiations over whether items are "citable", impact factor variations of more than 300% have been observed.)

Then, there is “coercive citation”

in which an editor forces an author to add spurious self-citations to an article before the journal will agree to publish it in order to inflate the journal's impact factor.

The pressure on a researcher to publish in high IF journals according to Björn Brembs is extremely high:

As a scientist today, it is very difficult to find employment if you cannot sport publications in high-ranking journals. In the increasing competition for the coveted spots, it is starting to be difficult to find employment with only few papers in high-ranking journals: a consistent record of ‘high-impact’ publications is required if you want science to be able to put food on your table. Subjective impressions appear to support this intuitive notion: isn’t a lot of great research published in Science and Nature while we so often find horrible work published in little-known journals? Isn’t it a good thing that in times of shrinking budgets we only allow the very best scientists to continue spending taxpayer funds?

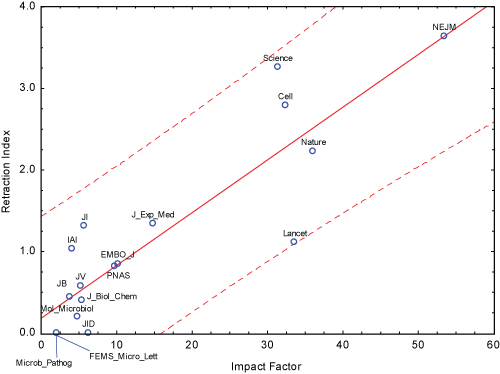

Ah, but Brembs then points out that as plausible as the above argument is regarding the superiority of high IF journals, the data do not support that statement. He refers to an article by Fang and Casadevall from which he obtains this stunning regression graph:

The retraction index is the number of retractions in the journal from 2001 to 2010, multiplied by 1000, and divided by the number of published articles with abstracts. The p-value for slope is exceedingly small and the coefficient of determination is .77. Thus, “at least with the current data, IF indeed seems to be a more reliable predictor of retractions than of actual citations.” He reasons that

If your livelihood depends on this Science/Nature paper, doesn’t the pressure increase to maybe forget this one crucial control experiment, or leave out some data points that don’t quite make the story look so nice? After all, you know your results are solid, it’s only cosmetics which are required to make it a top-notch publication! Of course, in science there never is certainty, so such behavior will decrease the reliability of the scientific reports being published. And indeed, together with the decrease in tenured positions, the number of retractions has increased at about 400-fold the rate of publication increase.

Discussion

1. Obtain a (very) good dictionary to see how the grammatical uses of the word “impact” has differed down through the centuries with a shift taking place somewhere in the post-World-War-II world. Ask an elderly person for his view of “impact” as a verb let alone as an adjective. Do the same for the word “contact” which had a grammatical shift in the 1920s.

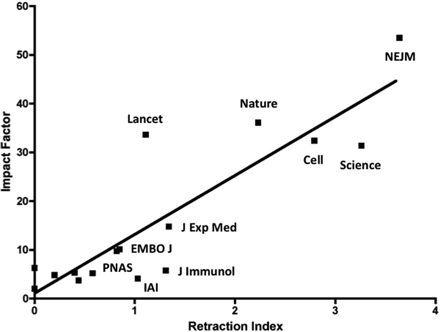

2. The Fang and Casadevall paper had the graph presented this way:

Why is Brembs’ version more suggestive of a cause (IF) and effect (retraction index) relationship?

3. Give a plausibility argument for why many low-level IF journals might have a virtually zero retraction index.

4. For an exceedingly interesting interview with Fang and Casadevall see Carl Zimmer’s NYT article.

Several factors are at play here, scientists say. One may be that because journals are now online, bad papers are simply reaching a wider audience, making it more likely that errors will be spotted. “You can sit at your laptop and pull a lot of different papers together,” Dr. Fang said.

But other forces are more pernicious. To survive professionally, scientists feel the need to publish as many papers as possible, and to get them into high-profile journals. And sometimes they cut corners or even commit misconduct to get there.

Each year, every laboratory produces a new crop of Ph.D.’s, who must compete for a small number of jobs, and the competition is getting fiercer. In 1973, more than half of biologists had a tenure-track job within six years of getting a Ph.D. By 2006 the figure was down to 15 percent.

The article is packed with intriguing discussion points about funding and ends with Fang’s pessimistic/realistic lament:

“When our generation goes away, where is the new generation going to be?” he asked. “All the scientists I know are so anxious about their funding that they don’t make inspiring role models. I heard it from my own kids, who went into art and music respectively. They said, ‘You know, we see you, and you don’t look very happy.’ ”

Submitted by Paul Alper