Chance News 82: Difference between revisions

m (→Quotations) |

m (→Forsooth) |

||

| Line 43: | Line 43: | ||

"The Original Tooth Fairy Poll® has generally been a good barometer of the economy's overall direction. In fact, the trend in average giving has tracked with movement of the Dow Jones Industrial Average (DJIA) in seven of the past 10 years." | "The Original Tooth Fairy Poll® has generally been a good barometer of the economy's overall direction. In fact, the trend in average giving has tracked with movement of the Dow Jones Industrial Average (DJIA) in seven of the past 10 years." | ||

<div align=right>[http://www.theoriginaltoothfairypoll.com/news-release/ Tooth Fairy Poll® news release], 23 February 2012</div> | <div align=right>[http://www.theoriginaltoothfairypoll.com/news-release/ Tooth Fairy Poll® news release], 23 February 2012</div> | ||

Note. Their website also presents a [http://www.theoriginaltoothfairypoll.com/wp-content/themes/toothFairy/resources/ToothFairyIndex.jpg double y-axis plot] "demonstrating" this trend. | |||

Submitted by Bill Peterson, with thanks to Paul Alper | Submitted by Bill Peterson, with thanks to Paul Alper | ||

Revision as of 19:50, 27 February 2012

Quotations

"I focus on the most important form of innumeracy in everyday life, statistical innumeracy--that is, the inability to reason about uncertainties and risk."

Submitted by Bill Peterson

“Those [Madoff investors] who doubted the absence of variability in the reported returns could have saved themselves; instead, most placed blind faith in the average.”

McGraw Hill, 2010, p. 156

See also “The World’s Largest Fund Is a Fraud”, a 2005 report submitted to the SEC by Harry Markopolos, an independent fraud investigator, who had studied the Madoff operation for nine years and had submitted several previously ignored reports to the SEC.

Submitted by Margaret Cibes

“[I]n real labs, you don’t let the rats design the experiment.” [p.73]

“I asked my flight instructor what he thought accounted for the difference [between pilots and doctors, where pilots are more likely to accept computerized advice based on past evidence] …. He told me, ‘It is very simple …. Unlike pilots, doctors don’t go down with their planes.'” [p. 110]

“Some researchers have so comprehensively tortured the data that their datasets become like prisoners who will tell you anything you want to know. Statistical analysis casts a patina of scientific integrity over a study that can obscure the misuse of mistaken assumptions.” [p. 206]

“We have to get students to learn this stuff …. We have to get over this phobia and we have to get over this view that somehow statistics is illiberal. There is this crazy view out there that statistics are right-wing.” [Economist Ben Polak, cited on pp.237-238]

Submitted by Margaret Cibes

Forsooth

“[The] ballad ‘Someone Like you’ … has risen to near-iconic status recently, due in large part to its uncanny power to elicit tears and chills from listeners. …. Last year, [scientists] at McGill University reported that emotionally intense music releases dopamine in the pleasure and reward centers of the brain, similar to the effects of food, sex and drugs. …. Measuring listeners' responses, [the] team found that the number of goose bumps observed correlated with the amount of dopamine released, even when the music was extremely sad.”

Submitted by Margaret Cibes

"The average gift from the Tooth Fairy dropped to $2.10 last year, down 42 cents from $2.52 in 2010, according to no less an authority than the Original Tooth Fairy Poll, which is sponsored by Delta Dental Plans Association. 'But the good news,' their PR folks hasten to add, 'is she’s still visiting nearly 90 percent of homes throughout the United States.' "

Submitted by Paul Alper

"The Original Tooth Fairy Poll® has generally been a good barometer of the economy's overall direction. In fact, the trend in average giving has tracked with movement of the Dow Jones Industrial Average (DJIA) in seven of the past 10 years."

Note. Their website also presents a double y-axis plot "demonstrating" this trend.

Submitted by Bill Peterson, with thanks to Paul Alper

Predictioneering

Bruce Bueno de Mesquita has written a fascinating, readable book, The Predictioneer’s Game: Using the Logic of Brazen Self-Interest to See and Shape the Future. A lengthy and generally positive review of Bueno de Mesquita’s views may be found in a NYT article, Can game theory predict when Iran will get the bomb?, by Clive Thompson (12 August 2009).

His game-theory-based track record is indicated by:

For 29 years, Bueno de Mesquita has been developing and honing a computer model that predicts the outcome of any situation in which parties can be described as trying to persuade or coerce one another. Since the early 1980s, C.I.A. officials have hired him to perform more than a thousand predictions; a study by the C.I.A., now declassified, found that Bueno de Mesquita’s predictions “hit the bull’s-eye” twice as often as its own analysts did.

In the introduction to his book, Bueno de Mesquita says, “I have been predicting future events for three decades, often in print before the fact, and mostly getting them right.” Furthermore, “In my experience, government and private business want firm answers. They get plenty of wishy-washy predictions from their staff. They are looking for more than ‘On the one hand this, but on the other hand that’--and I give it to them.”

Discussion

1. In that NYT article may be found a statement shocking to the world of statistics and probability:

Bueno de Mesquita does not express his forecasts in probabilistic terms; he says an event will transpire or it won’t.

Why is this a shocking statement to statisticians and probabilists?

2. In the NYT article is found the following criticism by Stephen Walt, a professor of international affairs at Harvard:

While Bueno de Mesquita has published many predictions in academic journals, the vast majority of his forecasts have been done in secret for corporate or government clients, where no independent academics can verify them. “We have no idea if he’s right 9 times out of 10, or 9 times out of a hundred, or 9 times out of a thousand,” Walt says. Walt also isn’t impressed by Stanley Feder’s C.I.A. study showing Bueno de Mesquita’s 90 percent hit rate. “It’s one midlevel C.I.A. bureaucrat saying, ‘This was a useful tool,’ ” Walt says.

Along these lines, suppose someone avers his hit rate is 100% when it involves forecasting a male birth, that is Prob (male predicted|male) = 1. Why might this be less than impressive?

3. Another critic may be found here regarding a prediction about Libya.

In February 2011 Bueno de Mesquita predicted that the unrest in the Arab world will not spread to such places as Saudia Arabia and ... Libya. Yes, Libya. Watch and listen carefully to the segment starting at 1:51 min into the interview.

Other incorrect predictions made by Bueno de Mesquita are also noted on this web site, including what this author calls “The n factorial debacle” whereby Bueno de Mesquita misconstrues the number of possible interactions between n individuals (game participants). This web site also brings up the issue of the so-called “black swans” when it comes to predicting outcomes of the game. What is a black swan and why does a black swan have an impact on prediction?

4. Brazen Self-Interest and its mathematical logic rest on game theory which asserts that morality or any other nicety is counter productive to achieving success. Bueno de Mesquita’s particular computer model starts with data of expert opinion and then somehow via simulation iterates to a conclusion. Comment on the problem of local minimums/maximums.

5. Health care is in the news today as it was back in the 1990s. The NYT article notes that “In early 1993, a corporate client asked him to forecast whether the Clinton administration’s health care plan would pass, and he said it would.” The black swan in this instance was Congressman Daniel Rostenkowski who [page 125] “was the key to getting health care legislation through Congress.” Google Daniel Rostenkowski to see why Rostenkowski was a black swan and “contrary to my expectations, nothing passed through Congress.”

Submitted by Paul Alper

Flood of data means flood of job opportunities

The Age of Big Data, Steve Lohr, The New York Times, February 11, 2012.

If you like working with data, you have great career opportunities ahead of you. We are seeing an

an explosion of data, Web traffic and social network comments, as well as software and sensors that monitor shipments, suppliers and customer

This means a big deal for the job market.

A report last year by the McKinsey Global Institute, the research arm of the consulting firm, projected that the United States needs 140,000 to 190,000 more workers with “deep analytical” expertise and 1.5 million more data-literate managers, whether retrained or hired.

It is a trend that occurs in more than business. This article cites major changes in Political Science and Public Health. The article introduces a term "big data" which it defines as

shorthand for advancing trends in technology that open the door to a new approach to understanding the world and making decisions.

While the article extols the virtues of data analysis, for the most part, there are some cautionary statements.

Big Data has its perils, to be sure. With huge data sets and fine-grained measurement, statisticians and computer scientists note, there is increased risk of “false discoveries.” The trouble with seeking a meaningful needle in massive haystacks of data, says Trevor Hastie, a statistics professor at Stanford, is that 'many bits of straw look like needles.'

Now data analysis demanding more attention from business circles and more.

Veteran data analysts tell of friends who were long bored by discussions of their work but now are suddenly curious. “Moneyball” helped, they say, but things have gone way beyond that. “The culture has changed,” says Andrew Gelman, a statistician and political scientist at Columbia University. “There is this idea that numbers and statistics are interesting and fun. It’s cool now.”

Submitted by Steve Simon

Note: The theme for Math Awareness Month this April is Mathematics, Statistics, and the Data Deluge.

Martin Gardner's "mistake"

“Martin Gardner’s Mistake”

by Tanya Khovanova, The College Mathematics Journal, January 2012

Martin Gardner first discussed the following problem in 1959:

Mr. Smith has two children. At least one of them is a boy. What is the probability that both children are boys?

His answer at that time follows:

If Smith has two children, at least one of which is a boy, we have three equally probable cases: boy-boy, boy-girl, girl-boy. In only one case are both children boys, so the probability that both are boys is 1/3.

Gardner later wrote a "correction" to his original solution, indicating that “the answer depends on the procedure by which the information is ‘at least one is a boy’ is obtained.”

He suggested two potential procedures.

(i) Pick all the families with two children, one of which is a boy. If Mr. Smith is chosen randomly from this list, then the answer is 1/3.

(ii) Pick a random family with two children; suppose the father is Mr. Smith. Then if the family has two boys, Mr. Smith says, “At least one of them is a boy.” If he has two girls, he says, “At least one of them is a girl.” If he has a boy and a girl he flips a coin to choose one or another of those two sentences. In this case the probability that both children are the same sex is 1/2.

Khovanova discusses a number of other scenarios related to being given both the sex and the day of the week on which the given child was born. The results may surprise students - and/or probability amateurs like this Chance contributor.

The pdf file containing this article is accessible to all and contains active links to her references, which include two 2010 articles by Keith Devlin, both discussing day-of-the-week scenarios and real-life cultural differences which might impact solutions: “Probability Can Bite” and “The Problem with Word Problems”. (See also CN 64: A probability puzzle and CN 65: Tuesday's child.)

Discussion

Do you think that Gardner made a mistake? Why or why not?

Submitted by Margaret Cibes

Parkinson’s disease and biking

What Parkinson’s teaches us about the brain

by Gretchen Reynolds, New York Times, 12 October 2011

Health care remains a hot-button issue. When it comes to degenerative diseases which affect the increasing number of elderly people, the Holy Grail is to find an inexpensive treatment which has few side effects and actually works. According to this New York Times article, a surprisingly effective treatment for Parkinson’s disease is bike riding on the back of a tandem:

[T]he rider in front had been instructed to pedal at a cadence of about 90 r.p.m. and with higher force output or wattage than the patients had produced on their own. The result was that the riders in back had to pedal harder and faster than was comfortable for them.

After eight weeks of hourlong sessions of forced riding, most of the patients in Dr. [Jay] Alberts’s study showed significant lessening of tremors and better body control, improvements that lingered for up to four weeks after they stopped riding.

Compared to voluntary exercise,

The forced pedaling regimen, on the other hand, did lead to better full-body movement control, prompting Dr. Alberts to conclude that the exercise must be affecting the riders’ brains, as well as their muscles, a theory that was substantiated when he used functional M.R.I. machines to see inside his volunteers’ skulls. The scans showed that, compared with Parkinson’s patients who hadn’t ridden, the tandem cyclists’ brains were more active.

Dr. Alberts suspects that in Parkinson’s patients, the answer may be simple mathematics. More pedal strokes per minute cause more muscle contractions than fewer pedal strokes, which, in consequence, generate more nervous-system messages to the brain. There, he thinks, biochemical reactions occur in response to the messages, and the more messages, the greater the response.

Discussion

1. Part of the mystique of bike riding and Parkinson’s disease is evident from this startling video:

A 58-year-old man with a 10-year history of idiopathic Parkinson's disease presented with an incapacitating freezing of gait. However, the patient's ability to ride a bicycle was remarkably preserved.…

2. The NYT article, like most lay publications, left out all the important numbers that a statistician might be interested in. From a 2009 publication we learn the following:

Ten patients with mild to moderate PD were randomly assigned to complete 8 weeks of FE [forced exercise] or VE [voluntary exercise]. With the assistance of a trainer, patients in the FE group pedaled at a rate 30% greater than their preferred voluntary rate, whereas patients in the VE group pedaled at their preferred rate.

There were five in each group, eight men and two women in total. The output measures of success are quite technical; the paper uses averages and error bars (standard deviation) for each of the two groups for the respective output measures but the publication does not appear to do a t-test of the difference of any output measure. Comment on the small sample sizes and the lack of a t-test of the difference.

3. There is also a 2011 publication in another journal in a different field. Many of the figures and the data appear to be the same as in the 2009 publication.

4. Obviously, not every Parkinson’s patient can obtain unlimited use of a rider in front. Therefore, it is not surprising that there is a need for a special motorized stationary bike where a patient who is handicapped can pedal solo.

The Theracycle motorized exercise bicycle has been identified as one of the few exercise devices able to replicate the 80 – 90 RPM needed for the Cleveland Clinic bike study. Over 150 participants with Parkinson's disease have been chosen to study the effects of forced exercise on the Theracycle exercise bicycle.

A video of the Theracycle may be found here. Testimonials for Theracycle claim that it is useful not only for people who have Parkinson’s disease but also for people who suffer from multiple sclerosis, digestion, stroke, spinal cord injury, arthritis, diabetes and obesity. Why would a 150 patient study be more impressive than a ten patient study?

Submitted by Paul Alper

Retroactive intercessory prayer

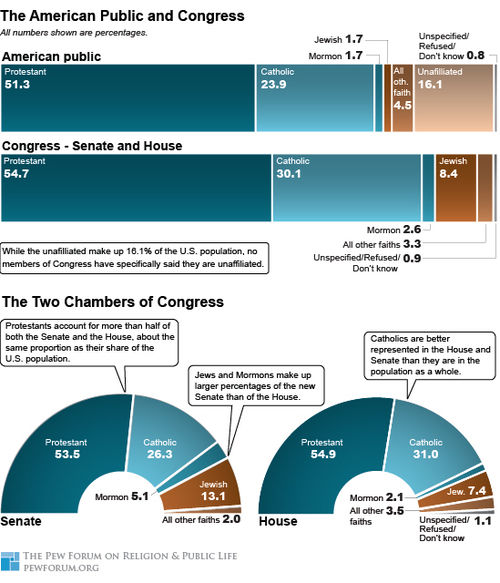

A quick look at the following graph indicates that when it comes to religion, the United States stands apart from other industrial nations; of the 535 members of Congress, only one (Pete Stark of California) openly admits to being an atheist.

People in the U.S. do a great deal of praying for all sorts of things, not the least of which is health. Measuring the power of prayer is a long-standing issue in statistics; Francis Galton, who many view as the founder of the discipline, studied this at length in an essay entitled Statistical Inquiries Into The Efficacy Of Prayer in the 19th century. He numerically compared (1) the longevity of the royal family to the longevity of its peer group, (2) stillbirths at a religiously run hospital to stillbirths at a state run hospital, and (3) insurance rates offered by Quakers to religious believers and non-believers. In each instance, Galton deemed prayer to have no effect.

Statistics has come a long way since then but so have attempts to prove the efficacy of prayer. In particular, intercessory prayer, which may be defined as prayer by someone for an individual not known to the person doing the praying and the person being prayed for being unaware of the person praying, seems to be the rage. This web site lists 24 modern studies of intercessory prayer and healing.

Included in this list is the remarkable Effects of remote, retroactive intercessory prayer on outcomes in patients with bloodstream infection: randomised controlled trial by Leonard Leibovici. Remarkable because this was a retroactive intercessory study, done 4-10 years after the 3393 patients who suffered bloodstream infection concluded their stay in the hospital. In the paper we read:

Three primary outcomes were compared: the number of deaths in hospital, length of stay in hospital from the day of the first positive blood culture to discharge or death, and duration of fever.

Mortality was 28.1% (475/1691) in the intervention group and 30.2% (514/1702) in the control group (P for difference = .4). The length of stay in hospital and duration of fever were significantly shorter in the intervention group (P = .01 and P = .04, respectively).

Leibovici states, “This intervention is cost effective, probably has no adverse effects.” Further, “The very design of the study assured perfect blinding to patients and medical staff of allocation of patients and even the existence of the trial.” However, “Regrettably, the very same design meant that was not possible to obtain the informed consent of the patients.” He justifies the retroactive aspect with “As we cannot assume a priori that time is linear, as we perceive it, or that God is limited by a linear time, as we are.”

Discussion

1. Leibovici concludes with “No mechanism known today can account for the effects of remote, retroactive intercessory prayer said for a group of patients with a bloodstream infection. However, the significant results and the flawless design prove that an effect was achieved.” He then refers to the clinical trial conducted by James Lind in 1753 for curing scurvy by lemons and limes; the underlying natural explanation, ascorbic acid, was unknown at the time and would take centuries to articulate. Discuss the similarities and difference between retroactive intercessory prayer and citrus fruits.

2. As to the retroactive intercession, “A list of the first names of the patients in the intervention group was given to a person who said a short prayer for the well being and full recovery of the group as a whole. There was no sham intervention.” Why that last sentence?

3. Deeply held religious belief may not be the only motivating factor for studying prospective or retroactive intercessory prayer. We read here that the Templeton Foundation “funded the largest study of intercessory prayer in medicinal settings in the world with its two million dollar award to Dr. Herbert Benson of the Harvard Medical School.” Aside from funding, what other aspects of prospective or retroactive intercessory prayer make it desirable for studies involving statistics?

4. America’s deepest thinker of the second half of the twentieth century, Tom Lehrer, is on record as saying “Political satire became obsolete when Henry Kissinger was awarded the [1973] Nobel Peace Prize.” Clearly, Leibovici is attempting to prove Lehrer was mistaken. As always with satire, not everyone gets the point. See the article by Olshansky and Dossey entitled “Retroactive prayer: a preposterous hypothesis?”. They argue that quantum mechanics provides a physical basis for retroactive prayer. What prompted them to invoke quantum mechanics?

An article by the physicists Bishop and Stenger reviews some of the bizarre criticism leveled at Leibovici including that of Olshansky and Dossey; Bishop and Stenger indicate that some of the previous studies purportedly showing the benefits of intercessory prayer were fraught with failing to consider how p-values should be treated when there are multiple comparisons. Bishop and Stenger conclude: “Current scientific theory does not support effectual benefit of prayer distant in space or time.” Why the phrase “prayer distant in space or time”?

5. Of the 24 modern studies of intercessory prayer and healing, 15 were “Studies which found a positive response to prayer.” Presumably, the translation of “a positive response to prayer” means a p-value less than .05. What does this imply about using p-value as a metric? How would a Bayesian approach possibly change the conclusions?

By far, of the 15, the largest sample size occurred in Leibovici’s study.

6. Previous Chance News wikis regarding intercessory prayer may be found at Chance News 17, at Chance News 22 and at Chance News 25.

Submitted by Paul Alper

Probability of a 100-year hurricane

Numbers Rule Your World, by Kaiser Fung, McGraw-Hill, 2010, p. 88

“Terms like ‘100-year hurricane’ create a false sense of security”, by Sheila Grissett, The Times-Picayune, August 4, 2008

“A Report on the Economic Impact of a 1-in-100 Year Hurricane in the State of Florida”, prepared for the Florida Department of Financial Services, March 1, 2010

Kaiser includes this excerpt in his new book:

Statisticians tell us that the concept of a 100-year storm concerns probability not frequency; the yardstick is economic losses, not calendar years. They say the 100-year hurricane is one that wreaks more economic destruction than 99 percent of hurricanes in history. In any given year, the chance is 1 percent that a hurricane making landfall will produce greater losses than 99 percent of past hurricanes. This last statement is often equated to "once in a century." But consider this: if, for each year, the probability of a 100-year hurricane is 1 percent, then over ten years, the chance of seeing one or more 100-year hurricanes must cumulate to more than 1 percent …. Thus, we should be less than surprised when powerful hurricanes strike Florida in succession. The "100-year hurricane is a misnomer, granting us a false sense of security.

Grissett discusses the concern of government agencies like FEMA about the public’s misinterpretation of the phrase “100-year hurricane.”

The Florida report discusses definitions of the term “100-year hurricane” with respect to insurance companies and their clients.

Discussion

Answer the questions based on the given definition of “100-year hurricane.”

1. What is the probability of one or more “100-year hurricanes” during a random 10-year period? Would you be "less than surprised" if one or more of these hurricanes occurred during a random 10-year period?

2. What is the probability of one or more of these hurricanes during a random 100-year period? Would you be surprised if one or more of these hurricanes occurred during a random 100-year period?

3. What is the probability of exactly one “100-year hurricane” during a random100-year period? Is it appropriate to think of a 100-year hurricane as occurring “once in a century”?

4. How would you describe a 100-year hurricane using the term “percentile”? Specify the scale of the distribution of hurricane losses.

5. Do you think that we can assume that “100-year hurricanes” are independent events? Are there any other factors/assumptions that might affect your reliance on the results your calculations?

Submitted by Margaret Cibes

Selectivity in drug testing

Numbers Rule Your World

by Kaiser Fung, McGraw-Hill, 2010, pp. 106-107

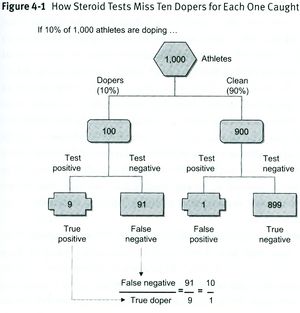

The following text and chart are excerpted from a chapter illustrating the trade-off between false positives and false negatives in testing. The author feels that false negatives are the real problem.

In particular, pay attention to these two numbers: the proportion of samples declared positive by drug-testing laboratories and the proportion of athletes believed to be using steroids. Of thousands of tests conducted each year, typically 1 percent of samples are declared positive. Therefore, if 10 percent of athletes are drug cheats, then the vast majority – at least 9 percent of them – would have tested negative, and they would have been false negatives.

Discussion

1. What number in the last sentence of the text is incorrect according to the chart? (Maybe it's a typo.)

2. Based on the chart, which group - "dopers" or "cleans" - does this hypothetical test do a better job of identifying? That is, what are the selectivity and sensitivity rates?

3. Would you advise using this test on a widespread basis? What factor(s) might influence your decision?

4. If both the selectivity and sensitivity rates had been 90%, what would the chart have looked like? How, if at all, would your responses to questions 2 and 3 have changed?

5. For what selectivity rates would false negatives present a greater problem than true positives? Would you want to use a test with those selectivity rates? Why or why not?

Submitted by Margaret Cibes

Retroactive intercessory prayer—II

With reference to the post earlier in this issue, Professor Leibovici was kind enough to respond to a request concerning the reactions to his paper Effects of remote, retroactive intercessory prayer on outcomes in patients with bloodstream infection which is discussed there. Below is a slightly edited version of his response:

It was written after a heated argument with Iain Chalmers, the founding father of the Cochrane collaboration, on whether the results of a perfect RCT [randomized clinical trial] counts if the intervention has no biological plausibility whatsoever. At the same time the Archives of Internal Medicine published an RCT on [another] remote prayer [study] that was infuriating from a number of reasons: no plausibility, the inconsistency of believing in God and testing God in a RCT, and the belief that God can be so amoral as to allow John to die only because he was randomized to the non prayer group.

I was sure that it will be recognized as a satire if only because of the references (one of them to a piece by Borges [dealing with the fallacy of time]). It [i.e., Leibovici’s study] was [however, recognized] by some [as satire]– it is used as an example and exercise in several courses on statistics and epidemiology. And it wasn’t by many. There are some scathing pieces [i.e., responses] on the folly of the trial (I didn’t mind them, although they didn’t speak too well of the intelligence of their writers). I felt embarrassed because some people quoted it as a sign of God’s powers, and I didn’t intend it as a parody on honest belief.

It achieved one good purpose – it was helpful in undermining a Cochrane review on remote prayer (they included it in the first version of the review). And another – it’s not a bad exercise for students.

Disappointingly, satire, reductio ad absurdum, are bad rhetorical tools when addressing large audiences.

Leibovici further directed Chance News to his comments from 2002 on the response to his study:

The purpose of the BMJ [British Medical Journal] piece was to ask the reader the following question: Given a 'study' that looks methodologically correct, but tests something that is completely out of our frame (or model) of the physical world (e.g., retroactive intervention or badly distilled water for asthma) would you believe in it?

He states in addition

To deny from the beginning that empirical methods can be applied to questions that are completely outside our scientific model of the physical world. Or in a more formal way, if the pre-trial probability is infinitesimally low, the results of the trial will not really change it, and the trial should not be performed. This is what, to my mind, turns the BMJ piece into a 'non-study' although the details provided in the publication (randomization done only once, statement of a wish, analysis, etc.) are correct.

The article has nothing to do with religion. I believe that prayer is a real comfort and help to a believer. I do not believe it should be testedin controlled trials.

The reader is encouraged to peruse the archived responses on the BMJ web site to see how varied the engendered views/opinions can be. There are 91 published letters.

Measuring bias in standardized testing

Numbers Rule Your World

by Kaiser Fung, McGraw-Hill, 2010

Educational Testing Service has been working for years on ways to ensure the fairness of the SAT to all test takers, especially to level the playing field for blacks and whites. Even when I worked in its math department in the late 1960s, bias was a serious concern, but we only had qualitative means of trying to identify potential bias.

After several efforts to try to quantify potential bias after the 1960s, and to try and resolve a Simpson’s paradox issue described in Fung’s book, ETS now first stratifies each group – blacks and whites – according to ability level. Then it compares the success rates, on individual items in the experimental pre-test sections, between comparable groups so that items with wide disparities in success rates can be considered for exclusion. The procedure is called differential item functioning (DIF) analysis.

In practice, ETS uses five ability groups based on total test score. …. DIF analysis requires classifying examinees by their “ability,” which also happens to be what the SAT is supposed to measure. Thus, no external source exists for such classification.

For more information, see the book Methods for Identifying Biased Test Items, Sage, 1994.

A review of the Methods book appeared in the Journal of Educational Measurement[1], Summer 1996, pp. 253-256.

A central theme throughout this book is that DIF indexes yielded by the application of statistical procedures must be differentiated from item bias.

Discussion

How do you think that ETS identifies student “ability” without access to any student academic records or other “IQ” test results? Explain why this may be a case of circular reasoning.

Submitted by Margaret Cibes