Chance News 60: Difference between revisions

| (82 intermediate revisions by 3 users not shown) | |||

| Line 16: | Line 16: | ||

==Forsooth== | ==Forsooth== | ||

<blockquote> | |||

"When the average age of the halftime act [at the Superbowl] is older than 47, the NFC team, the New Orleans Saints this year, has won nearly two-thirds of the time, and the games are about three times as likely to be blowouts." | |||

</blockquote> | |||

[http://online.wsj.com/article/SB10001424052748704107204575039203193816386.html For Sunday's halftime show, bet the over,] <br>by Ben Austen, Wall Street Journal, 2 February 2010. | |||

Submitted by Paul Alper | |||

<hr> | |||

<blockquote>"Last year, nearly 5,000 teens | |||

died in car crashes. Making it safer for a teen to be in a war zone than on a highway."<br> | |||

</blockquote> | |||

Allstate [http://www.allstate.com/content/refresh-attachments/Teen_Cemetery_Stndcrop.pdf advertisement] promoting a national Graduated Driver's License law. | |||

Submitted by Bill Peterson | |||

<hr> | |||

<blockquote>[My kids' science-fair] experiments never turned out the way they were supposed to, and so we were always having to fudge the results so that the projects wouldn't be screwy. I always felt guilty about that dishonesty ... but now I feel like we were doing real science.</blockquote> | |||

Parent reacting to ongoing scandals in the scientific research community, in [http://online.wsj.com/article/SB10001424052748704041504575045334195791838.html#articleTabs%3Darticle "New Episodes of Scientists Behaving Badly"], by Eric Felten, <i>The Wall Street Journal</i>, February 4, 2010 | |||

<blockquote>Maybe they need 'AA' meetings for scientists..."Hi, my name is ________, I'm a Greedy, Jealous, Pier Reviewer of my scientific colleagues.... I have a PHD and I have lost my ‘moral’ center, and have brought shame to me, my profession, and my University ...."<br> | |||

Chorus ... "Hi ________!”</blockquote> | |||

Blogger responding to parent's comment [http://online.wsj.com/article/SB10001424052748704041504575045334195791838.html#articleTabs%3Dcomments]<br> | |||

Submitted by Margaret Cibes | |||

==Does corporate support really subvert the data analysis== | ==Does corporate support really subvert the data analysis== | ||

| Line 61: | Line 88: | ||

== An interesting problem== | == An interesting problem== | ||

This hasn't hit the news yet but Bob Drake wrote us about an interesting problem. He wrote | This hasn't hit the mainstream news yet but Bob Drake wrote us about an interesting problem. He wrote | ||

<blockquote> Here is an example of e turning up unexpectedly. Select a random number between 0 and 1. Now select another and add it to the first, piling on random numbers. How many random numbers, on average, do you need to make the total greater than 1?</blockquote> | |||

This appears in the notes of Derbyshire's book "Prime Obsession" pg 366 A proof of this can be found http://www.olimu.com/riemann/FAQs.htm here]. | |||

A version of the problem was posed in a 2004 Who's Counting column, entitled [http://abcnews.go.com/Technology/WhosCounting/story?id=99501&page=1 Imagining a Hit Thriller With Number 'e'], where John Allen Paulos wrote: | |||

<blockquote> | |||

Using a calculator, pick a random whole number between 1 and 1,000. (Say you pick 381.) Pick another random number (Say 191) and add it to the first (which, in this case, results in 572). Continue picking random numbers between 1 and 1,000 and adding them to the sum of the previously picked random numbers. Stop only when the sum exceeds 1,000. (If the third number were 613, for example, the sum would exceed 1,000 after three picks.)<br><br> | |||

How many random numbers, on average, will you need to pick? | |||

</blockquote> | |||

We mentioned this to Charles Grinstead who wrote: | We mentioned this to Charles Grinstead who wrote: | ||

<blockquote>It appears in Feller, vol. 2. But more interesting than that problem is the following generalization: Pick a positive real number M, and play the same game as before, i.e. stop when the sum first equals or exceeds M. Let f(M) denote the average number of summands in this process (so the game that he was looking at corresponds to M = 1, and he saw that it is known that f(1) = e). | <blockquote>It appears in Feller, vol. 2. But more interesting than that problem is the following generalization: Pick a positive real number M, and play the same game as before, i.e. stop when the sum first equals or exceeds M. Let f(M) denote the average number of summands in this process (so the game that he was looking at corresponds to M = 1, and he saw that it is known that f(1) = e). | ||

Clearly, since the average size of the summands is 1/2, f(M) should be about 2M, or perhaps slightly greater than 2M. For example, when M = 1, f(M) is slightly greater than 2. It can be shown that as M goes to infinity, f(M) is asymptotic to 2M + 2/3.</blockquote> | Clearly, since the average size of the summands is 1/2, f(M) should be about 2M, or perhaps slightly greater than 2M. For example, when M = 1, f(M) is slightly greater than 2. It can be shown that as M goes to infinity, f(M) is asymptotic to 2M + 2/3.</blockquote> | ||

| Line 80: | Line 113: | ||

by Sian L. Beilock, Elizabeth A. Gunderson, Gerardo Ramirez, and Susan C. Levine, <i>Proceedings of the National Academy of Sciences</i>, January 25, 2010<br> | by Sian L. Beilock, Elizabeth A. Gunderson, Gerardo Ramirez, and Susan C. Levine, <i>Proceedings of the National Academy of Sciences</i>, January 25, 2010<br> | ||

Four University of Chicago psychologists studied math anxiety and its effect on math achievement | Four University of Chicago psychologists studied math anxiety and its effect on the math achievement of 65 girls and 52 boys taught by 17 female elementary-school teachers. Extensive details about methodology and statistics are provided in the paper, its two appendices, and the supporting information. | ||

The researchers summarized their conclusions in the Abstract: | |||

<blockquote>…. There was no relation between a teacher’s math anxiety and her students’ math achievement at the beginning of the school year. By the school year’s end, however, the more anxious teachers were about math, the more likely girls (but not boys) were to endorse the commonly held stereotype that “boys are good at math, and girls are good at reading” and the lower these girls’ math achievement.</blockquote> | |||

At the end of the paper they state: | |||

<blockquote>… [W]e did not find gender differences in math achievement at either the beginning ... or end ... of the school year. However, … by the school year’s end, girls who confirmed traditional gender ability roles performed worse than girls who did not and worse than boys more generally. We show that these differences are related to the anxiety these girls’ teachers have about math. .... [I]t is an open question as to whether there would be a relation between teacher math anxiety and student math achievement if we had focused on male instead of female teachers.</blockquote> | |||

Submitted by Margaret Cibes at the suggestion of Cathy Schmidt | |||

==Political illiteracy == | |||

[http://www.nytimes.com/2010/01/30/opinion/30blow.html Lost in translation]<br> | |||

New York Times, 29 January 2010<br> | |||

Charles M. Blow<br> | |||

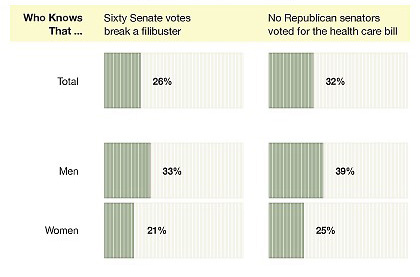

Chance News often features examples of innumeracy or statistical illiteracy, but what about political illiteracy? Congress has spent a year debating health care reform, and the stalled legislation was widely discussed in coverage of President Obama's State of the Union address. Nevertheless, in above article we read that: "According to a survey released this week by the Pew Research Center for the People and the Press, only 1 person in 4 knew that 60 votes are needed in the Senate to break a filibuster and only 1 in 3 knew that no Senate Republicans voted for the health care bill." | |||

<center> | |||

[[Image:Illiteracy.jpg]] | |||

</center> | |||

The above reproduces a portion of an accompanying graphic entitled [http://www.nytimes.com/imagepages/2010/01/30/opinion/30blowimg.html Widespread Political Illiteracy], which breaks out responses further based on age, education, political affiliation, etc. The results are not encouraging. | |||

The article provides a link to an [http://pewresearch.org/pubs/1478/political-iq-quiz-knowledge-filibuster-debt-colbert-steele online quiz] at the Pew Research Center website, where readers can test their own knowledge. Of the dozen questions there, the filibuster item had the worst score in the survey. | |||

Blow suggests that a possible source of all the confusion may be people's choice of news outlets. He cites another recent poll which found that Fox News was the most trusted network news in the country, with 49% of respondents expressing trust. The ABC, NBC and CBS networks all got less than 40%. The full results from Public Policy Polling organization are available [http://www.publicpolicypolling.com/pdf/PPP_Release_National_126.pdf here.] Political affiliation appeared to be a key factor. Fox was trusted by 74% of Republican respondents but only 30% of Democrats. By contrast, the other three networks were all trusted by a majority of Democrats but less than 20% of Republicans. According to Dean Debnan, President of Public Policy | |||

Polling, | |||

<blockquote> | |||

A generation ago you would have expected Americans to place their trust in the most | |||

neutral and unbiased conveyors of news. But the media landscape has really changed and now they’re turning more toward the outlets that tell them what they want to hear. | |||

</blockquote> | |||

Submitted by Paul Alper | |||

==Where's the variability?== | |||

[http://www.fivethirtyeight.com/2010/02/mcgop-virtues-and-vices-of-sameness.html McGOP: The Virtues and Vices of Sameness]<br> | |||

FiveThityEight.com, 3 February 2010<br> | |||

Nate Silver | |||

Think about breaking down polling results by age, sex and educational level, etc. One typically expects to find some variations. For example, try looking again at the results from the Pew Center poll cited in preceding story. | |||

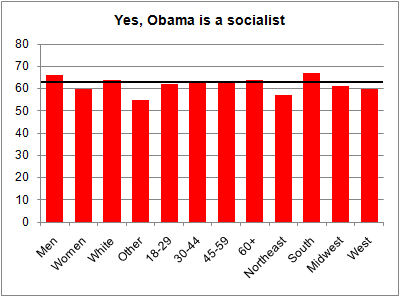

In the present post, however, Nate Silver reports on a striking lack a variability in the [http://www.dailykos.com/storyonly/2010/2/2/832988/-The-2010-Comprehensive-Daily-Kos-Research-2000-Poll-of-Self-Identified-Republicans 2010 Comprehensive Daily Kos/Research 2000 Poll] of opinions among self-identified Republicans. For example, in the poll 63 percent of Republicans agreed with the statement "Barack Obama is a socialist." (This has been a regular complaint from some vocal opponents of Obama's health care proposals.) Now look at the breakdown by subgroups: | |||

<center> | |||

[[Image:Socialist.png]] | |||

</center> | |||

The FiveThiryEight post includes similar graphics for a number of other questions, all of which display a conspicuous uniformity across subgroups. Here is another example: "About 36 percent of Republicans in the poll said they didn't think Obama was born in the United States (another 22 percent weren't sure.) We see a few regional differences on this item -- higher in the South and lower in other regions -- but otherwise the percentages are fairly constant." | |||

Silver concludes, "On just about every question, the results showed essentially no difference based on age, gender, race, or geography -- once we've established that you're a Republican, these differences seem to be rendered moot." | |||

DISCUSSION QUESTION:<br> | |||

Reactions posted to the blog run the gamut: concern that Republican party leaders and/or conservative news outlets are enforcing a strict ideology; questions about whether comparison data for Democrats are needed; concerns about where the Daily Kos gets data (note: here is a link to the [http://www.research2000.us/about-2/ Research 2000] organization). What do you make of all this? | |||

Submitted by Paul Alper | |||

==Baby Einstein wants data== | |||

[http://www.nytimes.com/2010/01/13/education/13einstein.html ‘Baby Einstein’ Founder Goes to Court]. Tamar Lewin, The New York Times, January 12, 2010. | |||

"Baby Einstein" is a series of videos targeted at children from 3 months to 3 years. They expose children to music and images that are intended to be educational. These videos were popularized in part by the so-called [http://en.wikipedia.org/wiki/Mozart_effect Mozart effect]. | |||

The use of such videos had been discouraged by the American Academy of Pediatrics, but a series of peer-reviewed articles showed that exposure to these videos could actually do more harm than good. | |||

So the owner of the Einstein video series did what any red-blooded American would do. He sued the researchers. | |||

<blockquote>A co-founder of the company that created the “Baby Einstein” videos has asked a judge to order the University of Washington to release records relating to two studies that linked television viewing by young children to attention problems and delayed language development.</blockquote> | |||

What would he do with all that data? | |||

<blockquote>“All we’re asking for is the basis for what the university has represented to be groundbreaking research,” the co-founder, William Clark, said in a statement Monday. “Given that other research studies have not shown the same outcomes, we would like the raw data and analytical methods from the Washington studies so we can audit their methodology, and perhaps duplicate the studies, to see if the outcomes are the same."</blockquote> | |||

Asking for the raw data to conduct a re-analysis is a commonly used tactic among commercial sources harmed by unfavorable research published in the peer-reviewed literature. Here is a [http://www.defendingscience.org/case_studies/upload/Michaels_Letter.pdf nice historical summary of these efforts]. | |||

Submitted by Steve Simon | |||

===Questions=== | |||

1. Does a commercial interest have an inherent right to review data that harms the sales of its product? | |||

2. Should the data from taxpayer subsidized research be made available to the general public? | |||

3. What harms might a researcher suffer if he/she was forced to disclose raw data associated with a study? | |||

==Million, billion...whatever== | |||

Milo Schield sent the following article to the ASA Statistical Education eGroup. | |||

[http://www.latimes.com/news/opinion/commentary/la-oe-smith31-2010jan31,0,2185811.story But who's counting?]<br> | |||

Los Angeles Times, 31 January 2010<br> | |||

Doug Smith<br> | |||

This op/ed piece poses the following question: "The million-billion mistake is among the most common in journalism. But why?" Smith's research identified 23 instances of this mix-up in the Times over the last three years, most often in stories about money. For example, the paper reported that California spent $59.7 million on education in 2008-09, when the correct figure was $59.7 billion. | |||

Lynn Arthur Steen, emeritus professor of mathematics at St. Olaf College, is quoted as explaining | |||

<blockquote>Generally people do not have sufficient experience with large numbers to have any intuitive sense of their size. They have no "anchor" to distinguish a million from a billion the way they might "feel" the difference between $10 and $10,000. So mistakes easily slip by unnoticed. | |||

</blockquote> | |||

Milo has developed a course in quantitative journalism at Augsburg College in Minneapolis. The article quotes his recommendation: | |||

<blockquote>Newspapers should always break large numbers down into rates that make sense, he said. Rather than simply talking about California's $59-billion education budget, newspapers should break that out as $4,900 per household (ouch!), not the $4.90 it would be if the figure were in millions. | |||

</blockquote> | |||

Of course, to talk budgets at the national level, eventually we need to understand trillions. The National Numeracy Network has a nice page featuring news stories to address the question [http://serc.carleton.edu/nnn/teaching_news/examples/example1.html How Big is a Trillion?] | |||

Submitted by Bill Peterson | |||

==Surgical risk calculators== | |||

[http://online.wsj.com/article/SB10001424052748703422904575039110166900210.html “New Ways to Calculate the Risks of Surgery”]<br> | |||

by Laura Landro, <i>The Wall Street Journal</i>, February 2, 2010<br> | |||

This article discusses a “new risk calculator that handicaps an individual patient's chances of surgical complications based on personal medical history and physical condition [developed using] data from more than one million patient records gathered as part of the … National Surgical Quality Improvement Program.” While cardiac surgeons have had a risk calculator tool for a while, it is only recently that other surgeons have had a similar tool.<br> | |||

A website, [http://euroscore.org “euroSCORE”], provides access to two automatically calculated scores of cardiac-surgery risk – a “standard" score and a “logistic” score. Both scores are based on the variables Age (years), Gender (Male/Female), LV function (Poor/Moderate/Good), and 14 other medical conditions (Yes/No). A hand calculation of the logistic score may be found at [http://euroscore.org/logisticEuroSCORE.htm “How to calculate the logistic EuroSCORE”]. | |||

These scores are based on 97 risk factors in nearly 20,000 “consecutive patients from 128 hospitals in eight European countries"; see [http://euroscore.org/what_is_euroscore.htm “How was it developed”]. Physicians are “invited to try out both models and to use the one most suitable to [their] practice.”<br> | |||

Outside of the cardiac-surgery specialty, colorectal-surgery risk calculations are based on 15 variables and data from approximately 30,000 surgery patients at 182 hospitals for the period 2006-2007.<br> | |||

One doctor has stated: | |||

<blockquote>[The calculator] not only helps assess whether the patient is a good candidate for surgery, but also helps [the surgeon] make sure patients understand what they are getting into – the process known as "informed consent."</blockquote> | |||

Another physician stated: | |||

<blockquote>Telling a patient there is a risk of dying from a cancer surgery is not an easy conversation to have. …. The calculator is a tool you need to use in a judicious way, so as not to scare the patients, but to make them feel more comfortable that you are being honest and open with them.</blockquote> | |||

Submitted by Margaret Cibes | |||

===Questions=== | |||

1. Besides a patient's pre-surgery medical conditions, what other aspect(s) of physician/ hospital care might affect his/her risk of complications from cardiac surgery?<br> | |||

2. Consider females facing cardiac surgery under a best-case scenario (no identified problems associated with any of the 15 medical conditions on which the risk scores are based - 14 "No"s and a "Good" LV function). At ages 1, 25, 50, 75, or 100 years, the standard risk scores are, respectively, 1, 1, 5, 10, or 10, and the logistic risk scores are, respectively, 1.22%, 1.22%, 1.22%, 3.47%, or 15.97%.<br> | |||

Also consider the same females under a worst-case scenario (14 "Yes"s and a "Poor" LV function). The standard risk scores are, respectively, 34, 34, 34, 38, or 43, and the logistic risk scores are, respectively, 99.91%, 99.91%, 99.91%, 99.97%, or 99.99%.<br> | |||

(a) Does anything about these results strike you as surprising?<br> | |||

(b) How would you interpret a particular standard score, say 20, on this scale?<br> | |||

(c) Which scale has more meaning for you? What meaning? Why?<br> | |||

(d) Comment on the level of precision with which the logistic scores are provided.<br> | |||

3. Would a risk number help you to make a more informed decision about cardiac surgery? Would it make you more "comfortable"? What other statistic(s) might be helpful to you, especially with respect to a specific doctor or hospital?<br> | |||

Submitted by Margaret Cibes | |||

==Autism paper retracted== | |||

[http://online.wsj.com/article/SB10001424052748704022804575041212437364420.html “Lancet Retracts Study Tying Vaccine to Autism”]<br> | |||

by Shirley S. Wang, <i>The Wall Street Journal</i>, February 3, 2010<br> | |||

[http://www.time.com/time/magazine/article/0,9171,1960277,00.html "Debunked"]<br> | |||

by Claudia Wallis, <i>TIME</i>, February 15, 2010<br> | |||

A while ago <i>Lancet</i> published a report about a 1998 study of 12 British children with gastrointestinal problems, 9 of whom exhibited autistic behaviors. The study purported to find a link between the measles-mumps-rubella (MMR) vaccine and autism.<br> | |||

<i>Lancet</i> has now retracted the article in response to a 2008 CDC study that concluded there was no such causal evidence. (Of the 13 authors, 10 “partially retracted” the paper in 2004.)<br> | |||

<blockquote>The Lancet decided to issue a complete retraction after an independent regulator for doctors in the U.K. concluded last week that the study was flawed. The General Medical Council's report on three of the researchers ... found evidence that some of their actions were conducted for experimental purposes, not clinical care, and without ethics approval. The report also found that Dr. Wakefield drew blood for research purposes from children at his son's birthday party, paying each child £5 (about $8).</blockquote> | |||

A Philadelphia chief of infectious diseases commented: | |||

<blockquote>It's very easy to scare people; it's very hard to unscare them.</blockquote> | |||

The <i>TIME</i> article gives more details about the principal investigator's behavior in carrying out the "study": (1) he did not disclose that he was paid to advise the families in their legal suits against the vaccine manufacturers; (2) he handpicked the children, paying some at his son's birthday party to give blood; and (3) he performed invasive procedures on some children in the study without appropriate consent.<br> | |||

Submitted by Margaret Cibes | |||

===Additional references=== | |||

See [http://chance.dartmouth.edu/chancewiki/index.php/Chance_News_45#Autism_Statistics_Lesson Chance News 45] for an earlier discussion of the MMR-autism story. Brian Deer has written a series of investigative articles on the controversy for the Sunday Times (of London); his latest installment is [http://briandeer.com/solved/solved.htm here]. See also his longer discussion of the [http://briandeer.com/solved/story-highlights.htm history of the investigation]. | |||

Submitted by Paul Alper | |||

==Super Bowl XLIV== | |||

[http://fifthdown.blogs.nytimes.com/2010/02/08/analyzing-sean-paytons-three-gutsy-calls/ “Analyzing Sean Payton’s Gutsy Calls”]<br> | |||

by Brian Burke, <i>The New York Times</i>, February 8, 2010<br> | |||

In this column Burke provides probability calculations with respect to several decisions made by the coach of the New Orleans Saints during Super Bowl XLIV. Several bloggers [http://fifthdown.blogs.nytimes.com/2010/02/08/analyzing-sean-paytons-three-gutsy-calls/] pointed out, at the end of the article, that there were other factors that should have been included in Burke’s calculations.<br> | |||

Burke is a former Navy pilot who now operates the website [http://www.advancednflstats.com/ “Advanced NFL Stats”], a website “blog about football, math and human behavior.” This website provides a calculator [http://wp.advancednflstats.com/winprobcalc1.php] that enables a visitor to compute a team’s probability of winning a game, based on score difference, time left, quarter, field position, and “down” and “to go” data.<br> | |||

See Burke's earlier column, [http://fifthdown.blogs.nytimes.com/2010/01/28/be-skeptical-of-my-super-bowl-prediction-colts-in-a-close-one/ “Be Skeptical of My Super Bowl Prediction: Colts in a Close One”], in <i>The New York Times</i>, January 28, 2010. With great prescience, he wrote: | |||

<blockquote>Publishing predictions in public each week has helped me appreciate concepts like hindsight bias. I’ve learned two big lessons over the past four seasons. First, our ability to predict the future, even in bounded and controlled events with plentiful information, is terrible. Second, we quickly forget how wrong our predictions were.</blockquote> | |||

Submitted by Margaret Cibes at the suggestion of Jim Greenwood | |||

==Super Bowl cows== | |||

[http://www.bookofodds.com/Daily-Life-Activities/Sports/Articles/A0147-Super-Bowl-Cows Super Bowl Cows]<br> | |||

Book of Odds blog<br> | |||

posted by David Gassko, Ian Stanczyk | |||

Consider the odds that a cow will make it to the Super Bowl. Sadly for the cow, this means appearing as part of a football. The above post assembles a variety of figures (66.2 million adult cattle in US; approximately 1 in 2 slaughtered each year; each hide produces approximately 20 footballs; etc.) to estimate a probability. Bottom line: the chance is given as about 1 in 17,420,000. | |||

A class discussion might consider the plausibility of these assumptions. | |||

Submitted by Jeanne Albert | |||

Latest revision as of 16:23, 15 February 2010

Quotations

"As a Usenet discussion grows longer, the probability of a comparison involving Nazis or Hitler approaches one."

Godwin's Law, as quoted at Wikipedia.

Submitted by Steve Simon

Chances are, the disparity between the [Commerce Department’s quarterly GDP report and the Labor Department’s monthly unemployment report] was mostly statistical noise. Those who read great meaning into either were deceiving themselves. It's a classic case of information overload making it harder to see the trends and patterns that matter. In other words, we might be better off paying less (or at least less frequent) attention to data. …. Most of us aren't professional forecasters. What should we make of the cacophony of monthly and weekly data? The obvious advice is to focus on trends and ignore the noise. But the most important economic moments come when trends reverse — when what appears to be noise is really a sign that the world has changed. Which is why, in these uncertain times, we jump whenever a new economic number comes out. Even one that will be revised in a month.

“Statistophobia: When Economic Indicators Aren’t Worth That Much”

by Justin Fox, TIME, February 1, 2010

Submitted by Margaret Cibes

Forsooth

"When the average age of the halftime act [at the Superbowl] is older than 47, the NFC team, the New Orleans Saints this year, has won nearly two-thirds of the time, and the games are about three times as likely to be blowouts."

For Sunday's halftime show, bet the over,

by Ben Austen, Wall Street Journal, 2 February 2010.

Submitted by Paul Alper

"Last year, nearly 5,000 teens

died in car crashes. Making it safer for a teen to be in a war zone than on a highway."

Allstate advertisement promoting a national Graduated Driver's License law.

Submitted by Bill Peterson

[My kids' science-fair] experiments never turned out the way they were supposed to, and so we were always having to fudge the results so that the projects wouldn't be screwy. I always felt guilty about that dishonesty ... but now I feel like we were doing real science.

Parent reacting to ongoing scandals in the scientific research community, in "New Episodes of Scientists Behaving Badly", by Eric Felten, The Wall Street Journal, February 4, 2010

Maybe they need 'AA' meetings for scientists..."Hi, my name is ________, I'm a Greedy, Jealous, Pier Reviewer of my scientific colleagues.... I have a PHD and I have lost my ‘moral’ center, and have brought shame to me, my profession, and my University ...."

Chorus ... "Hi ________!”

Blogger responding to parent's comment [1]

Submitted by Margaret Cibes

Does corporate support really subvert the data analysis

Corporate Backing for Research? Get Over It. John Tierney, The New York Times, January 25, 2010.

We've been warned many times to beware of corporate influences on research, and many reserach journals are now demanding more, in terms of disclosure and independent review, from researchers who have a conflict of interest. But John Tierney has argued that this effort gone too far.

Conflict-of-interest accusations have become the simplest strategy for avoiding a substantive debate. The growing obsession with following the money too often leads to nothing but cheap ad hominem attacks.

Mr. Tierney argues that this emphasis on money prevents thoughtful examination of all the motives associated with presentation of results

It is simpler to note a corporate connection than to analyze all the other factors that can bias researchers’ work: their background and ideology, their yearnings for publicity and prestige and power, the politics of their profession, the agendas of the public agencies and foundations and grant committees that finance so much scientific work.

Another emotion is at work, as well, snobbery.

Many scientists, journal editors and journalists see themselves as a sort of priestly class untainted by commerce, even when they work at institutions that regularly collect money from corporations in the form of research grants and advertising. We trust our judgments to be uncorrupted by lucre — and we would be appalled if, say, a national commission to study the publishing industry were composed only of people who had never made any money in the business. (How dare those amateurs tell us how to run our profession!) But we insist that others avoid even “the appearance of impropriety.”

Mr. Tierney cites a controversial requirement imposed by the Journal of the American Medical Association in 2005.

Citing “concerns about misleading reporting of industry-sponsored research,” the journal refused to publish such work unless there was at least one author with no ties to the industry who would formally vouch for the data.

This policy has been criticized by other journals.

That policy was called “manifestly unfair” by BMJ (formerly The British Medical Journal), which criticized JAMA for creating a “hierarchy of purity among authors.”

Submitted by Steve Simon.

Questions

1. Do you side with JAMA or BMJ on the policy of an independent author who can formally vouch for the data?

2. Should conflict of interest requirements be different for research articles involving subjective opinions, such as editorials, than for research involving objective approaches like clinical trials?

Snow-to-liquid ratios

Climatology of Snow-to-Liquid Ratio for the Contiguous United States”

by Martin A. Baxter, Charles E. Graves, and James T. Moore, Weather and Forecasting, October 2005

In this paper, two Saint Louis University professors report the results of a National Weather Service study of the ratio of snow to liquid, which concludes that the mean ratio for much of the country is 13, and not the “often-assumed value of 10.” The NWS studied climatology for 30 years. The study found “considerable spatial variation in the mean,” illustrated in lots of maps, tables, and histograms.

[A quantitative precipitation forecast (QPF)] represents the liquid equivalent expected to precipitate from [a weather] system. To convert this liquid equivalent to a snowfall amount, a snow-to-liquid-equivalent ratio (SLR) must be determined. An SLR value of 10 is often assumed as a mean value; however, this value may not be accurate for many locations and meteorological situations. Even if the forecaster has correctly forecasted the QPF, an error in the predicted SLR value may cause significant errors in forecasted snowfall amount.

The SLR of 10:1, as a rough approximation, dates from 1875. Subsequent similar estimates did “not account for geographic location or in-cloud microphysical processes.”

The goals of this paper are to present the climatological values of SLR for the contiguous United States and examine the typical variability using histograms of SLR for various NWS county warning areas (CWAs). [Sections of the paper describe] the datasets and methodology used to perform this research; [present] the 30-yr climatology of SLR for the contiguous United States; [detail] the frequency of observed SLR values through the use of histograms for selected NWS CWAs; [include] a brief discussion on how the climatology of SLR may be used operationally; and [summarize] the results and [present] suggestions for future research.

Submitted by Margaret Cibes at the suggestion of Jim Greenwood

An interesting problem

This hasn't hit the mainstream news yet but Bob Drake wrote us about an interesting problem. He wrote

Here is an example of e turning up unexpectedly. Select a random number between 0 and 1. Now select another and add it to the first, piling on random numbers. How many random numbers, on average, do you need to make the total greater than 1?

This appears in the notes of Derbyshire's book "Prime Obsession" pg 366 A proof of this can be found http://www.olimu.com/riemann/FAQs.htm here].

A version of the problem was posed in a 2004 Who's Counting column, entitled Imagining a Hit Thriller With Number 'e', where John Allen Paulos wrote:

Using a calculator, pick a random whole number between 1 and 1,000. (Say you pick 381.) Pick another random number (Say 191) and add it to the first (which, in this case, results in 572). Continue picking random numbers between 1 and 1,000 and adding them to the sum of the previously picked random numbers. Stop only when the sum exceeds 1,000. (If the third number were 613, for example, the sum would exceed 1,000 after three picks.)

How many random numbers, on average, will you need to pick?

We mentioned this to Charles Grinstead who wrote:

It appears in Feller, vol. 2. But more interesting than that problem is the following generalization: Pick a positive real number M, and play the same game as before, i.e. stop when the sum first equals or exceeds M. Let f(M) denote the average number of summands in this process (so the game that he was looking at corresponds to M = 1, and he saw that it is known that f(1) = e). Clearly, since the average size of the summands is 1/2, f(M) should be about 2M, or perhaps slightly greater than 2M. For example, when M = 1, f(M) is slightly greater than 2. It can be shown that as M goes to infinity, f(M) is asymptotic to 2M + 2/3.

Submitted by Laurie Snell

Girls and math study

“Female teachers’ math anxiety affects girls’ math achievement”

“Appendices: Questionnaires”

“Supporting Information: Statistics”

by Sian L. Beilock, Elizabeth A. Gunderson, Gerardo Ramirez, and Susan C. Levine, Proceedings of the National Academy of Sciences, January 25, 2010

Four University of Chicago psychologists studied math anxiety and its effect on the math achievement of 65 girls and 52 boys taught by 17 female elementary-school teachers. Extensive details about methodology and statistics are provided in the paper, its two appendices, and the supporting information.

The researchers summarized their conclusions in the Abstract:

…. There was no relation between a teacher’s math anxiety and her students’ math achievement at the beginning of the school year. By the school year’s end, however, the more anxious teachers were about math, the more likely girls (but not boys) were to endorse the commonly held stereotype that “boys are good at math, and girls are good at reading” and the lower these girls’ math achievement.

At the end of the paper they state:

… [W]e did not find gender differences in math achievement at either the beginning ... or end ... of the school year. However, … by the school year’s end, girls who confirmed traditional gender ability roles performed worse than girls who did not and worse than boys more generally. We show that these differences are related to the anxiety these girls’ teachers have about math. .... [I]t is an open question as to whether there would be a relation between teacher math anxiety and student math achievement if we had focused on male instead of female teachers.

Submitted by Margaret Cibes at the suggestion of Cathy Schmidt

Political illiteracy

Lost in translation

New York Times, 29 January 2010

Charles M. Blow

Chance News often features examples of innumeracy or statistical illiteracy, but what about political illiteracy? Congress has spent a year debating health care reform, and the stalled legislation was widely discussed in coverage of President Obama's State of the Union address. Nevertheless, in above article we read that: "According to a survey released this week by the Pew Research Center for the People and the Press, only 1 person in 4 knew that 60 votes are needed in the Senate to break a filibuster and only 1 in 3 knew that no Senate Republicans voted for the health care bill."

The above reproduces a portion of an accompanying graphic entitled Widespread Political Illiteracy, which breaks out responses further based on age, education, political affiliation, etc. The results are not encouraging.

The article provides a link to an online quiz at the Pew Research Center website, where readers can test their own knowledge. Of the dozen questions there, the filibuster item had the worst score in the survey.

Blow suggests that a possible source of all the confusion may be people's choice of news outlets. He cites another recent poll which found that Fox News was the most trusted network news in the country, with 49% of respondents expressing trust. The ABC, NBC and CBS networks all got less than 40%. The full results from Public Policy Polling organization are available here. Political affiliation appeared to be a key factor. Fox was trusted by 74% of Republican respondents but only 30% of Democrats. By contrast, the other three networks were all trusted by a majority of Democrats but less than 20% of Republicans. According to Dean Debnan, President of Public Policy Polling,

A generation ago you would have expected Americans to place their trust in the most neutral and unbiased conveyors of news. But the media landscape has really changed and now they’re turning more toward the outlets that tell them what they want to hear.

Submitted by Paul Alper

Where's the variability?

McGOP: The Virtues and Vices of Sameness

FiveThityEight.com, 3 February 2010

Nate Silver

Think about breaking down polling results by age, sex and educational level, etc. One typically expects to find some variations. For example, try looking again at the results from the Pew Center poll cited in preceding story.

In the present post, however, Nate Silver reports on a striking lack a variability in the 2010 Comprehensive Daily Kos/Research 2000 Poll of opinions among self-identified Republicans. For example, in the poll 63 percent of Republicans agreed with the statement "Barack Obama is a socialist." (This has been a regular complaint from some vocal opponents of Obama's health care proposals.) Now look at the breakdown by subgroups:

The FiveThiryEight post includes similar graphics for a number of other questions, all of which display a conspicuous uniformity across subgroups. Here is another example: "About 36 percent of Republicans in the poll said they didn't think Obama was born in the United States (another 22 percent weren't sure.) We see a few regional differences on this item -- higher in the South and lower in other regions -- but otherwise the percentages are fairly constant."

Silver concludes, "On just about every question, the results showed essentially no difference based on age, gender, race, or geography -- once we've established that you're a Republican, these differences seem to be rendered moot."

DISCUSSION QUESTION:

Reactions posted to the blog run the gamut: concern that Republican party leaders and/or conservative news outlets are enforcing a strict ideology; questions about whether comparison data for Democrats are needed; concerns about where the Daily Kos gets data (note: here is a link to the Research 2000 organization). What do you make of all this?

Submitted by Paul Alper

Baby Einstein wants data

‘Baby Einstein’ Founder Goes to Court. Tamar Lewin, The New York Times, January 12, 2010.

"Baby Einstein" is a series of videos targeted at children from 3 months to 3 years. They expose children to music and images that are intended to be educational. These videos were popularized in part by the so-called Mozart effect.

The use of such videos had been discouraged by the American Academy of Pediatrics, but a series of peer-reviewed articles showed that exposure to these videos could actually do more harm than good.

So the owner of the Einstein video series did what any red-blooded American would do. He sued the researchers.

A co-founder of the company that created the “Baby Einstein” videos has asked a judge to order the University of Washington to release records relating to two studies that linked television viewing by young children to attention problems and delayed language development.

What would he do with all that data?

“All we’re asking for is the basis for what the university has represented to be groundbreaking research,” the co-founder, William Clark, said in a statement Monday. “Given that other research studies have not shown the same outcomes, we would like the raw data and analytical methods from the Washington studies so we can audit their methodology, and perhaps duplicate the studies, to see if the outcomes are the same."

Asking for the raw data to conduct a re-analysis is a commonly used tactic among commercial sources harmed by unfavorable research published in the peer-reviewed literature. Here is a nice historical summary of these efforts.

Submitted by Steve Simon

Questions

1. Does a commercial interest have an inherent right to review data that harms the sales of its product?

2. Should the data from taxpayer subsidized research be made available to the general public?

3. What harms might a researcher suffer if he/she was forced to disclose raw data associated with a study?

Million, billion...whatever

Milo Schield sent the following article to the ASA Statistical Education eGroup.

But who's counting?

Los Angeles Times, 31 January 2010

Doug Smith

This op/ed piece poses the following question: "The million-billion mistake is among the most common in journalism. But why?" Smith's research identified 23 instances of this mix-up in the Times over the last three years, most often in stories about money. For example, the paper reported that California spent $59.7 million on education in 2008-09, when the correct figure was $59.7 billion.

Lynn Arthur Steen, emeritus professor of mathematics at St. Olaf College, is quoted as explaining

Generally people do not have sufficient experience with large numbers to have any intuitive sense of their size. They have no "anchor" to distinguish a million from a billion the way they might "feel" the difference between $10 and $10,000. So mistakes easily slip by unnoticed.

Milo has developed a course in quantitative journalism at Augsburg College in Minneapolis. The article quotes his recommendation:

Newspapers should always break large numbers down into rates that make sense, he said. Rather than simply talking about California's $59-billion education budget, newspapers should break that out as $4,900 per household (ouch!), not the $4.90 it would be if the figure were in millions.

Of course, to talk budgets at the national level, eventually we need to understand trillions. The National Numeracy Network has a nice page featuring news stories to address the question How Big is a Trillion?

Submitted by Bill Peterson

Surgical risk calculators

“New Ways to Calculate the Risks of Surgery”

by Laura Landro, The Wall Street Journal, February 2, 2010

This article discusses a “new risk calculator that handicaps an individual patient's chances of surgical complications based on personal medical history and physical condition [developed using] data from more than one million patient records gathered as part of the … National Surgical Quality Improvement Program.” While cardiac surgeons have had a risk calculator tool for a while, it is only recently that other surgeons have had a similar tool.

A website, “euroSCORE”, provides access to two automatically calculated scores of cardiac-surgery risk – a “standard" score and a “logistic” score. Both scores are based on the variables Age (years), Gender (Male/Female), LV function (Poor/Moderate/Good), and 14 other medical conditions (Yes/No). A hand calculation of the logistic score may be found at “How to calculate the logistic EuroSCORE”.

These scores are based on 97 risk factors in nearly 20,000 “consecutive patients from 128 hospitals in eight European countries"; see “How was it developed”. Physicians are “invited to try out both models and to use the one most suitable to [their] practice.”

Outside of the cardiac-surgery specialty, colorectal-surgery risk calculations are based on 15 variables and data from approximately 30,000 surgery patients at 182 hospitals for the period 2006-2007.

One doctor has stated:

[The calculator] not only helps assess whether the patient is a good candidate for surgery, but also helps [the surgeon] make sure patients understand what they are getting into – the process known as "informed consent."

Another physician stated:

Telling a patient there is a risk of dying from a cancer surgery is not an easy conversation to have. …. The calculator is a tool you need to use in a judicious way, so as not to scare the patients, but to make them feel more comfortable that you are being honest and open with them.

Submitted by Margaret Cibes

Questions

1. Besides a patient's pre-surgery medical conditions, what other aspect(s) of physician/ hospital care might affect his/her risk of complications from cardiac surgery?

2. Consider females facing cardiac surgery under a best-case scenario (no identified problems associated with any of the 15 medical conditions on which the risk scores are based - 14 "No"s and a "Good" LV function). At ages 1, 25, 50, 75, or 100 years, the standard risk scores are, respectively, 1, 1, 5, 10, or 10, and the logistic risk scores are, respectively, 1.22%, 1.22%, 1.22%, 3.47%, or 15.97%.

Also consider the same females under a worst-case scenario (14 "Yes"s and a "Poor" LV function). The standard risk scores are, respectively, 34, 34, 34, 38, or 43, and the logistic risk scores are, respectively, 99.91%, 99.91%, 99.91%, 99.97%, or 99.99%.

(a) Does anything about these results strike you as surprising?

(b) How would you interpret a particular standard score, say 20, on this scale?

(c) Which scale has more meaning for you? What meaning? Why?

(d) Comment on the level of precision with which the logistic scores are provided.

3. Would a risk number help you to make a more informed decision about cardiac surgery? Would it make you more "comfortable"? What other statistic(s) might be helpful to you, especially with respect to a specific doctor or hospital?

Submitted by Margaret Cibes

Autism paper retracted

“Lancet Retracts Study Tying Vaccine to Autism”

by Shirley S. Wang, The Wall Street Journal, February 3, 2010

"Debunked"

by Claudia Wallis, TIME, February 15, 2010

A while ago Lancet published a report about a 1998 study of 12 British children with gastrointestinal problems, 9 of whom exhibited autistic behaviors. The study purported to find a link between the measles-mumps-rubella (MMR) vaccine and autism.

Lancet has now retracted the article in response to a 2008 CDC study that concluded there was no such causal evidence. (Of the 13 authors, 10 “partially retracted” the paper in 2004.)

The Lancet decided to issue a complete retraction after an independent regulator for doctors in the U.K. concluded last week that the study was flawed. The General Medical Council's report on three of the researchers ... found evidence that some of their actions were conducted for experimental purposes, not clinical care, and without ethics approval. The report also found that Dr. Wakefield drew blood for research purposes from children at his son's birthday party, paying each child £5 (about $8).

A Philadelphia chief of infectious diseases commented:

It's very easy to scare people; it's very hard to unscare them.

The TIME article gives more details about the principal investigator's behavior in carrying out the "study": (1) he did not disclose that he was paid to advise the families in their legal suits against the vaccine manufacturers; (2) he handpicked the children, paying some at his son's birthday party to give blood; and (3) he performed invasive procedures on some children in the study without appropriate consent.

Submitted by Margaret Cibes

Additional references

See Chance News 45 for an earlier discussion of the MMR-autism story. Brian Deer has written a series of investigative articles on the controversy for the Sunday Times (of London); his latest installment is here. See also his longer discussion of the history of the investigation.

Submitted by Paul Alper

Super Bowl XLIV

“Analyzing Sean Payton’s Gutsy Calls”

by Brian Burke, The New York Times, February 8, 2010

In this column Burke provides probability calculations with respect to several decisions made by the coach of the New Orleans Saints during Super Bowl XLIV. Several bloggers [2] pointed out, at the end of the article, that there were other factors that should have been included in Burke’s calculations.

Burke is a former Navy pilot who now operates the website “Advanced NFL Stats”, a website “blog about football, math and human behavior.” This website provides a calculator [3] that enables a visitor to compute a team’s probability of winning a game, based on score difference, time left, quarter, field position, and “down” and “to go” data.

See Burke's earlier column, “Be Skeptical of My Super Bowl Prediction: Colts in a Close One”, in The New York Times, January 28, 2010. With great prescience, he wrote:

Publishing predictions in public each week has helped me appreciate concepts like hindsight bias. I’ve learned two big lessons over the past four seasons. First, our ability to predict the future, even in bounded and controlled events with plentiful information, is terrible. Second, we quickly forget how wrong our predictions were.

Submitted by Margaret Cibes at the suggestion of Jim Greenwood

Super Bowl cows

Super Bowl Cows

Book of Odds blog

posted by David Gassko, Ian Stanczyk

Consider the odds that a cow will make it to the Super Bowl. Sadly for the cow, this means appearing as part of a football. The above post assembles a variety of figures (66.2 million adult cattle in US; approximately 1 in 2 slaughtered each year; each hide produces approximately 20 footballs; etc.) to estimate a probability. Bottom line: the chance is given as about 1 in 17,420,000.

A class discussion might consider the plausibility of these assumptions.

Submitted by Jeanne Albert