Chance News 109: Difference between revisions

| (149 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

July 1, 2016 to December 31, 2016 | |||

==Quotations== | ==Quotations== | ||

From an 1840s letter from Charles Babbage to Alfred, Lord Tennyson, about two lines in a Tennyson poem: “Every minute dies a man, / Every minute one is born.”<br> | |||

“I need hardly point out to you that this calculation would tend to keep the sum total of the world’s population in a state of perpetual equipoise, whereas it is a well-known fact that the said sum total is constantly on the increase. I would therefore take the liberty of suggesting that in the next edition of our excellent poem the erroneous calculation to which I refer should be corrected as follows: ‘Every moment dies a man / And one and a sixteenth is born.’ I may add that the exact figures are 1.167, but something must, of course, be conceded to the laws of metre.” | |||

<div align=right>--cited by James Gleick, in [https://www.amazon.com/Information-History-Theory-Flood/dp/1400096235 <i>The Information</i>], 2011</div> | |||

Submitted by Margaret Cibes | |||

---- | |||

"You can slice and dice it any way you like, but this isn’t like ''Consumer Reports'', which tests something to see if it does or doesn’t work. The interaction between a student and an institution is not the same as the interaction between a student and a refrigerator." | |||

<div align=right>-- Willard Dix, quoted in: [http://www.nytimes.com/2016/10/30/opinion/sunday/how-to-make-sense-of-college-rankings.html How to make sense of college rankings], ''New York Times'', 29 October 2016 </div> | |||

---- | |||

"There is no better way to build confidence in a theory than to believe it is not testable." | |||

<div align=right>UChicago economist Richard Thaler in [https://www.amazon.com/Misbehaving-Behavioral-Economics-Richard-Thaler/dp/1501238698 <i>Misbehaving</i>], 2015</div> | |||

Submitted by Margaret Cibes<br> | |||

==Forsooth== | ==Forsooth== | ||

"The LSAT predicted 14 percent of the variance between the first-year grades. And it did a little better the second year: 15 percent. Which means that 85 percent of the time it was wrong." | "The LSAT predicted 14 percent of the variance between the first-year grades [in a study of 981 University of Pennsylvania Law School students]. And it did a little better the second year: 15 percent. Which means that 85 percent of the time it was wrong." | ||

<div align=right>--Lani Guinier, in: ''The Tyranny of the Meritocracy: Democratizing Higher Education in America'' (Beacon Press 2015), p. 19. </div> | <div align=right>--Lani Guinier, in: ''The Tyranny of the Meritocracy: Democratizing Higher Education in America'' (Beacon Press 2015), p. 19. </div> | ||

Submitted by Margaret Cibes | Submitted by Margaret Cibes | ||

---- | |||

“These chemicals are largely unknown,” said David Bellinger, a professor at the Harvard University School of Public Health, whose research has attributed the loss of nearly 17 million I.Q. points among American children 5 years old and under to one class of insecticides. | |||

<div align=right>--Danny Hakim, [http://www.nytimes.com/2016/10/30/business/gmo-promise-falls-short.html "Doubts About the Promised Bounty of Genetically Modified Crops"], <i>New York Times</i>, October 29, 2016</div> | |||

Submitted by Margaret Cibes at the suggestion of Jim Greenwood | |||

==Guide to bad statistics== | |||

[https://www.theguardian.com/science/2016/jul/17/politicians-dodgy-statistics-tricks-guide Our nine-point guide to spotting a dodgy statistic]<br> | |||

by David Spiegelhalter, ''The Guardian'', 17 July 2016 | |||

Published in the wake of the Brexit debate, but obviously applicable to upcoming US presidential election, the article offers these nine strategies for twisting numbers to back a specious claim. | |||

* Use a real number, but change its meaning | |||

* Make the number look big (but not too big) | |||

* Casually imply causation from correlation | |||

* Choose your definitions carefully | |||

* Use total numbers rather than proportions (or whichever way suits your argument) | |||

* Don’t provide any relevant context | |||

* Exaggerate the importance of a possibly illusory change | |||

* Prematurely announce the success of a policy initiative using unofficial selected data | |||

* If all else fails, just make the numbers up | |||

Submitted by Bill Peterson | |||

==Cancer, lifestyle, and luck== | |||

[http://www.nytimes.com/2016/07/06/upshot/helpless-to-prevent-cancer-actually-a-lot-is-in-your-control.html Helpless to prevent cancer? Actually, quite a bit Is in your control]<br> | |||

By Aaron E. Carroll, TheUpshot blog, ''New York Times'', 5 July 2016 | |||

A controversial news story last year suggested that whether or not you get cancer is mostly dependent on luck. For more discussion | |||

see [https://www.causeweb.org/wiki/chance/index.php/Chance_News_103#Cancer_and_luck Cancer and luck] in Chance News 103. | |||

The present article has a different message. Rather than aggregating the analysis across all types of cancer, which led to some of the earlier misinterpretations, it focuses on | |||

how healthy lifestyles can reduce the risk of particular cancers. Data are reported from a | |||

[http://jamanetwork.com/journals/jamaoncology/article-abstract/2522371 study] in the journal ''JAMA Oncology''. | |||

For example, lung cancer is the leading cancer cause of death in the US, and the study found that | |||

"about 82 percent of women and 78 percent of men who got lung cancer might have prevented it through healthy behaviors." Obligatory reminder here that these are observational data, but | |||

the smoking and lung cancer story is of course a famous one in statistics! | |||

Overall, the study estimated that a quarter of cancers in women and a third of those in men are preventable by lifestyle choices. | |||

Submitted by Bill Peterson | |||

==Statistical reasoning in journalism education== | |||

Bob Griffin set a link to the following: | |||

[https://www.researchgate.net/publication/281997603_Chair_support_faculty_entrepreneurship_and_the_teaching_of_statistical_reasoning_to_journalism_undergraduates_in_the_United_States Chair support, faculty entrepreneurship, and the teaching of statistical reasoning to journalism undergraduates in the United States]<br> | |||

by Robert Griffin and Sharon Dunwoody, ''Journalism'', July 2015 | |||

==Did Melania plagiarize?== | |||

[http://www.sciencealert.com/a-physicist-has-calculated-the-probability-melania-trump-didn-t-plagiarise-her-speech A physicist has calculated the probability Melania Trump didn't plagiarise her speech]<br> | |||

by Fiona MacDonald, ''Science Alert'', 20 July 2016 | |||

The reference is to a humorous [https://www.facebook.com/bob.rutledge/posts/10157263563780249 Facebook post] by McGill University physics professor Robert Rutledge. He notes that Trump representative Paul Manafort had argued in Melania's defense that "it's the English language, there are a limited number of words, so what if Melania Trump chose some of the same ones Michelle Obama did?" | |||

From transcripts [http://www.vox.com/2016/7/19/12221566/melania-trump-michelle-obama appearing in ''Vox''], Rutledge identifies 14 key phrases ("values", "work hard" "for what you want in life", "word is your bond", "do what you say", "treat people with...respect", "pass [them] on to many generations", "Because we want our children", "in this nation", "to know", "the only limit", "your achievements", "your dreams", "willingness to work for them") that appear in both speeches, and observes that they also happen to appear in the same order. But 14! = 87,178,291,200. So even if Melania just happened to choose some of the same words as Michelle, he finds that there is less than one chance in 87 billion that they would appear in the same order. | |||

'''Discussion'''<br> | |||

#This is effectively computing a p-value. What assumptions are being made?<br> | |||

#In any case, why is is not "the probability that Melania didn't plagiarize"? | |||

Submitted by Bill Peterson | |||

==Billion dollar lotteries== | |||

[https://www.nytimes.com/2016/08/14/your-money/the-billion-dollar-lottery-jackpot-engineered-to-drain-your-wallet.html The billion dollar lottery jackpot: Engineered-to-drain-your-wallet]<br> | |||

by Jeff Sommer, ''New York Times'', 12 August 2016 | |||

The article described how lotteries have successfully boosted sales by readjusting odds in games like Powerball to generate ever-larger jackpots. It cites analyses by Salil Mehta's Statistical Ideas blog; see this post on [http://statisticalideas.blogspot.com/2016/04/a-losers-lottery.html A loser's lottery]. We read there: | |||

<blockquote> | |||

One should remember that the only objective for the Lottery, anywhere in the world, is not to make you rich. Contrary to their advertisements, the objective is not to show you a good time nor satisfy your dreams. Wasting your money is never a good time. The lottery’s only objective is to maximize the funds you pay for educational activities... | |||

The whole scheme is an educational tax for those who instead could use a free education in probability theory (that’s where this blog comes in!) | |||

</blockquote> | |||

Indeed, here is a discussion of "[https://en.wikipedia.org/wiki/Neglect_of_probability neglect of probability]" that explains [http://meaningring.com/2016/03/28/neglect-of-probability-by-rolf-dobelli/ Why you’ll soon be playing Mega Trillions]. | |||

==Noise in polling== | |||

Here is a series of articles written to help readers cope with the avalanche of polling results as the election approaches. The first was sent by Jeff Witmer to the Isolated Statisticians list: | |||

:[https://www.nytimes.com/2016/07/18/upshot/confused-by-contradictory-polls-take-a-step-back.html?_r=1 Confused by Contradictory Polls? Take a Step Back]<br> | |||

:by Nate Cohn, 'TheUpshot' blog, ''New York Times'', 20 September 2016 | |||

Included in Cohn's analysis is a simulation of 100 polls, generated under the assumption that Hillary Clinton has a 4 point lead over Donald Trump: | |||

<center>[[File:2016PollSim.png | 500px]]</center> | |||

The point of this illustration is that a lot of the apparent disagreement we see in polls taken around the same time might reflect nothing more than random sampling error. | |||

The next article deals with some other sources of error. | |||

:[https://www.nytimes.com/interactive/2016/09/20/upshot/the-error-the-polling-world-rarely-talks-about.html?_r=0 We gave four good pollsters the same raw data. They had four different results.]<br> | |||

:by Nate Cohn, 'TheUpshot' blog, ''New York Times'', 20 September 2016 | |||

The common margin of error statements attached to pollling reports are based on the formula for error in simple random sampling. Thus a survey of around 1000 people is said to have a margin of plus of minus 3 percentage points (1/√<span style="text-decoration: overline">1000</span>) ≈ 0.03). But national political polls are not based on simple random samples. Polls presented the New York Times are usually accompanied by a statement on "How the Poll was Conducted." | |||

In a [https://www.nytimes.com/2016/09/17/us/how-the-new-york-times-cbs-news-poll-was-conducted.html recent example], we read | |||

<blockquote> | |||

The combined results have been weighted to adjust for variation in the sample relating to geographic region, sex, race, Hispanic origin, marital status, age, education and (for landline households) the number of adults and the number of phone lines. In addition, the sample was adjusted to reflect the percentage of the population residing in mostly Democratic counties, mostly Republican counties and counties more closely balanced politically.... | |||

Some results pertaining to the election are expressed in terms of a “probable electorate,” reflecting the probability of each individual’s voting on Election Day. This likelihood is estimated from responses to questions about registration, past voting, intention to vote, interest in the campaign and enthusiasm about voting in this year’s contest. | |||

</blockquote> | |||

The effect of these adjustments is not covered in the margin of sampling error. To gauge the impact, the Upshot did their own analysis of a poll with n=867 respondents, and asked 4 professional pollsters for their adjustments. The five results: Clinton +3, Clinton +1, Clinton +4, Trump +1, Clinton +1. | |||

For comments on how surprised we should be see Andrew Gelman's blog post [http://andrewgelman.com/2016/09/23/trump-1-in-florida-or-a-quick-comment-on-that-5-groups-analyze-the-same-poll-exercise/ Trump +1 in Florida; or, a quick comment on that “5 groups analyze the same poll” exercise]. For teachers, Shonda Kuiper of Grinnell College has developed extensive materials for [http://web.grinnell.edu/individuals/kuipers/stat2labs/weights.html classroom activities] on weighed data. | |||

For an extreme example of what can go wrong with weighting, see: | |||

:[https://www.nytimes.com/2016/10/13/upshot/how-one-19-year-old-illinois-man-is-distorting-national-polling-averages.html How one 19-year-old Illinois man Is distorting national polling averages]<br> | |||

:by Nate Cohn, 'TheUpshot' blog, ''New York Times'', 20 September 2016 | |||

FInally, for good general advice on how to evaluate the quality of a poll, see | |||

:[https://www.nytimes.com/2016/10/13/upshot/the-savvy-persons-guide-to-reading-the-latest-polls.html The savvy person’s guide to reading the latest polls]<br> | |||

:by Nate Cohn, 'TheUpshot' blog, ''New York Times'', 12 October 2016 | |||

==Election post mortem== | |||

Even with all of the collected wisdom about polling, the election results still surprised most professionals. Here are some commentaries on what happened. | |||

:[http://fivethirtyeight.com/features/why-fivethirtyeight-gave-trump-a-better-chance-than-almost-anyone-else/ Why FiveThirtyEight gave Trump a better chance than almost anyone else]<br> | |||

:by Nate Silver, Fivethirtyeight.com, 11 November 2016 | |||

While not predicting a Trump victory, Silver was still out of step with most other analyses. His last analysis before the election gave Trump a 29% chance of winning the electoral college. Silver was criticized in some circles for suggesting that there was any substantial chance of a Trump win. | |||

:[http://www.nytimes.com/interactive/2016/11/13/upshot/putting-the-polling-miss-of-2016-in-perspective.html Putting the polling miss of the 2016 election in perspective]<br> | |||

:by Nate Cohn, Josh Katz and Kevin Quealy, 'TheUpshot' blog, ''New York Times'', 13 November 2016 | |||

The results of the election were certainly stunning. But Hillary Clinton did win the popular vote, by about 1.5 percentage points rather than the 4 percentage points predicted by polls. The article notes that this difference does not exceed the size of normal polling errors. The real problem seems to be the state level errors, which were historically high. Reproduced below are data from a graphic in the article giving the "average absolute difference between polling average and final vote in the ten states closest to the national average with at least three polls." | |||

<center> | |||

{| class="wikitable" style="text-align:center" | |||

|- | |||

! Year !! Difference | |||

|- | |||

| 1988 || 3.4 pts | |||

|- | |||

| 1992 || 3.4 pts | |||

|- | |||

| 1996 || 2.3 pts | |||

|- | |||

| 2000 || 1.8 pts | |||

|- | |||

| 2004 || 1.7 pts | |||

|- | |||

| 2008 || 1.7 pts | |||

|- | |||

| 2012 || 2.3 pts | |||

|- | |||

| 2016 || '''3.9 pts''' | |||

|} | |||

</center> | |||

Bret Larget noted on the Isolated Statisticians list that the ''New York Times'' had an eerily prescient article the day before the election: | |||

[https://www.nytimes.com/interactive/2016/11/07/us/how-trump-can-win.html Donald Trump’s big bet on less educated whites] (7 November 2016). | |||

==Trump succeeds where health is failing== | |||

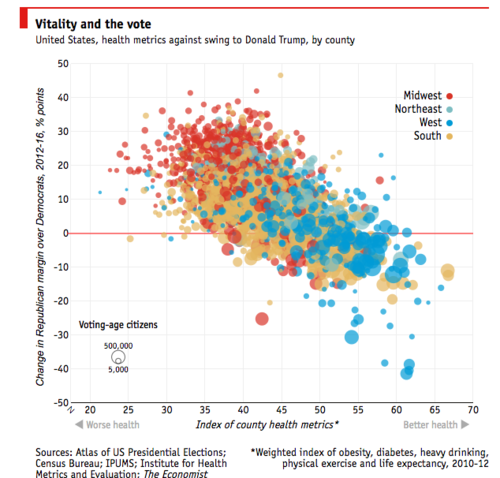

[http://www.economist.com/blogs/graphicdetail/2016/11/daily-chart-13 Daily chart: Trump succeeds where health is failing]<br> | |||

''Economist'', 21 November 2016 | |||

<center> [[File:Trump_health.png | 500px]] </center> | |||

Suggested by Peter Doyle | |||

==Statins and Alzheimer's== | |||

[https://www.healthnewsreview.org/2016/12/statins-probably-dont-reduce-risk-alzheimers-disease-despite-headlines-say/ Why statins probably don’t reduce risk of Alzheimer’s disease, despite what headlines say]<br> | |||

by Alan Cassels, ''HealthNewsReview'' blog, 14 December 2016 | |||

This is an informative post on the familiar theme that "association is not causation" (the lead-in references several items from Tyler Vigen's [https://www.fastcodesign.com/3030529/infographic-of-the-day/hilarious-graphs-prove-that-correlation-isnt-causation/5 spurious correlation] collection, which has been mentioned in previous Chance News installments). | |||

The journal ''JAMA Neurology'' recently published results of a large (400,000 subject) observational study that compared the risk of Alzheimer's disease in patients with "high exposure" to statins vs. those with "low exposure." Higher use of statins was found to be associated with lower Alzheimer's risk. The results were widely covered in the media; several stories featured some version of this quotation from the [https://www.eurekalert.org/pub_releases/2016-12/uosc-cdl121216.php official news release]: | |||

<blockquote> | |||

We may not need to wait for a cure to make a difference for patients currently at risk of the disease. Existing drugs, alone or in combination, may affect Alzheimer’s risk. | |||

</blockquote> | |||

Readers who continued past the headlines were informed that this was not a randomized experiment, so it was premature to draw causal conclusions. Needless to say, leading with this information would not not make for a captivating news story. HealthNewsReview cited the ''Daily Mail'' for the most sensational headline: [http://www.dailymail.co.uk/health/article-4025182/Could-statins-miracle-cure-Alzheimer-s-Taking-tablets-just-6-months-reduces-risk-15.html Could statins be the miracle cure for Alzheimer's? Taking the tablets for just 2 years reduces the risk by up to 15%]. | |||

The post also explains the problem of ''heathy user bias''. For illustration, it cites a 2009 study that found that patients who faithfully followed a statin regime were less likely to be involved in car crash. Of course we didn't see headlines announcing that statins prevented car crashes. The point is that healthier people tend to exhibit a range of positive behaviors, which might reasonably include both sticking to their medications and being careful behind the wheel. | |||

Finally, there is a nice discussion here of the distinction between relative risk and absolute risk. | |||

News stories tended to summarize the results of the study in terms of relative risk. | |||

Again quoting from the news release, “high exposure, defined as taking statins for at least six months in a given year during the study period was associated with a 15 percent decreased risk of Alzheimer’s disease for women and a 12 percent reduced risk for men.” In absolute terms, based on | |||

[http://jamanetwork.com/data/Journals/NEUR/0/noi160080f1.png this chart] from the journal article, the post computes a quick estimate that about 1.99% of non statin users developed Alzheimer’s, compared to 1.5% of the high exposure group and 1.6% of the low exposure group (this ignores other risk factors accounted for in the original study). The differences are less than half a percentage point, suggesting that the drug might have helped about 1 person in 200. Moreover, any such benefit needs to be weighed against the risks associated with taking a drug. | |||

Submitted by Bill Peterson | |||

Latest revision as of 21:58, 7 August 2017

July 1, 2016 to December 31, 2016

Quotations

From an 1840s letter from Charles Babbage to Alfred, Lord Tennyson, about two lines in a Tennyson poem: “Every minute dies a man, / Every minute one is born.”

“I need hardly point out to you that this calculation would tend to keep the sum total of the world’s population in a state of perpetual equipoise, whereas it is a well-known fact that the said sum total is constantly on the increase. I would therefore take the liberty of suggesting that in the next edition of our excellent poem the erroneous calculation to which I refer should be corrected as follows: ‘Every moment dies a man / And one and a sixteenth is born.’ I may add that the exact figures are 1.167, but something must, of course, be conceded to the laws of metre.”

Submitted by Margaret Cibes

"You can slice and dice it any way you like, but this isn’t like Consumer Reports, which tests something to see if it does or doesn’t work. The interaction between a student and an institution is not the same as the interaction between a student and a refrigerator."

"There is no better way to build confidence in a theory than to believe it is not testable."

Submitted by Margaret Cibes

Forsooth

"The LSAT predicted 14 percent of the variance between the first-year grades [in a study of 981 University of Pennsylvania Law School students]. And it did a little better the second year: 15 percent. Which means that 85 percent of the time it was wrong."

Submitted by Margaret Cibes

“These chemicals are largely unknown,” said David Bellinger, a professor at the Harvard University School of Public Health, whose research has attributed the loss of nearly 17 million I.Q. points among American children 5 years old and under to one class of insecticides.

Submitted by Margaret Cibes at the suggestion of Jim Greenwood

Guide to bad statistics

Our nine-point guide to spotting a dodgy statistic

by David Spiegelhalter, The Guardian, 17 July 2016

Published in the wake of the Brexit debate, but obviously applicable to upcoming US presidential election, the article offers these nine strategies for twisting numbers to back a specious claim.

- Use a real number, but change its meaning

- Make the number look big (but not too big)

- Casually imply causation from correlation

- Choose your definitions carefully

- Use total numbers rather than proportions (or whichever way suits your argument)

- Don’t provide any relevant context

- Exaggerate the importance of a possibly illusory change

- Prematurely announce the success of a policy initiative using unofficial selected data

- If all else fails, just make the numbers up

Submitted by Bill Peterson

Cancer, lifestyle, and luck

Helpless to prevent cancer? Actually, quite a bit Is in your control

By Aaron E. Carroll, TheUpshot blog, New York Times, 5 July 2016

A controversial news story last year suggested that whether or not you get cancer is mostly dependent on luck. For more discussion see Cancer and luck in Chance News 103.

The present article has a different message. Rather than aggregating the analysis across all types of cancer, which led to some of the earlier misinterpretations, it focuses on how healthy lifestyles can reduce the risk of particular cancers. Data are reported from a study in the journal JAMA Oncology. For example, lung cancer is the leading cancer cause of death in the US, and the study found that "about 82 percent of women and 78 percent of men who got lung cancer might have prevented it through healthy behaviors." Obligatory reminder here that these are observational data, but the smoking and lung cancer story is of course a famous one in statistics!

Overall, the study estimated that a quarter of cancers in women and a third of those in men are preventable by lifestyle choices.

Submitted by Bill Peterson

Statistical reasoning in journalism education

Bob Griffin set a link to the following:

Chair support, faculty entrepreneurship, and the teaching of statistical reasoning to journalism undergraduates in the United States

by Robert Griffin and Sharon Dunwoody, Journalism, July 2015

Did Melania plagiarize?

A physicist has calculated the probability Melania Trump didn't plagiarise her speech

by Fiona MacDonald, Science Alert, 20 July 2016

The reference is to a humorous Facebook post by McGill University physics professor Robert Rutledge. He notes that Trump representative Paul Manafort had argued in Melania's defense that "it's the English language, there are a limited number of words, so what if Melania Trump chose some of the same ones Michelle Obama did?"

From transcripts appearing in Vox, Rutledge identifies 14 key phrases ("values", "work hard" "for what you want in life", "word is your bond", "do what you say", "treat people with...respect", "pass [them] on to many generations", "Because we want our children", "in this nation", "to know", "the only limit", "your achievements", "your dreams", "willingness to work for them") that appear in both speeches, and observes that they also happen to appear in the same order. But 14! = 87,178,291,200. So even if Melania just happened to choose some of the same words as Michelle, he finds that there is less than one chance in 87 billion that they would appear in the same order.

Discussion

- This is effectively computing a p-value. What assumptions are being made?

- In any case, why is is not "the probability that Melania didn't plagiarize"?

Submitted by Bill Peterson

Billion dollar lotteries

The billion dollar lottery jackpot: Engineered-to-drain-your-wallet

by Jeff Sommer, New York Times, 12 August 2016

The article described how lotteries have successfully boosted sales by readjusting odds in games like Powerball to generate ever-larger jackpots. It cites analyses by Salil Mehta's Statistical Ideas blog; see this post on A loser's lottery. We read there:

One should remember that the only objective for the Lottery, anywhere in the world, is not to make you rich. Contrary to their advertisements, the objective is not to show you a good time nor satisfy your dreams. Wasting your money is never a good time. The lottery’s only objective is to maximize the funds you pay for educational activities...

The whole scheme is an educational tax for those who instead could use a free education in probability theory (that’s where this blog comes in!)

Indeed, here is a discussion of "neglect of probability" that explains Why you’ll soon be playing Mega Trillions.

Noise in polling

Here is a series of articles written to help readers cope with the avalanche of polling results as the election approaches. The first was sent by Jeff Witmer to the Isolated Statisticians list:

- Confused by Contradictory Polls? Take a Step Back

- by Nate Cohn, 'TheUpshot' blog, New York Times, 20 September 2016

Included in Cohn's analysis is a simulation of 100 polls, generated under the assumption that Hillary Clinton has a 4 point lead over Donald Trump:

The point of this illustration is that a lot of the apparent disagreement we see in polls taken around the same time might reflect nothing more than random sampling error.

The next article deals with some other sources of error.

- We gave four good pollsters the same raw data. They had four different results.

- by Nate Cohn, 'TheUpshot' blog, New York Times, 20 September 2016

The common margin of error statements attached to pollling reports are based on the formula for error in simple random sampling. Thus a survey of around 1000 people is said to have a margin of plus of minus 3 percentage points (1/√1000) ≈ 0.03). But national political polls are not based on simple random samples. Polls presented the New York Times are usually accompanied by a statement on "How the Poll was Conducted." In a recent example, we read

The combined results have been weighted to adjust for variation in the sample relating to geographic region, sex, race, Hispanic origin, marital status, age, education and (for landline households) the number of adults and the number of phone lines. In addition, the sample was adjusted to reflect the percentage of the population residing in mostly Democratic counties, mostly Republican counties and counties more closely balanced politically....

Some results pertaining to the election are expressed in terms of a “probable electorate,” reflecting the probability of each individual’s voting on Election Day. This likelihood is estimated from responses to questions about registration, past voting, intention to vote, interest in the campaign and enthusiasm about voting in this year’s contest.

The effect of these adjustments is not covered in the margin of sampling error. To gauge the impact, the Upshot did their own analysis of a poll with n=867 respondents, and asked 4 professional pollsters for their adjustments. The five results: Clinton +3, Clinton +1, Clinton +4, Trump +1, Clinton +1. For comments on how surprised we should be see Andrew Gelman's blog post Trump +1 in Florida; or, a quick comment on that “5 groups analyze the same poll” exercise. For teachers, Shonda Kuiper of Grinnell College has developed extensive materials for classroom activities on weighed data.

For an extreme example of what can go wrong with weighting, see:

- How one 19-year-old Illinois man Is distorting national polling averages

- by Nate Cohn, 'TheUpshot' blog, New York Times, 20 September 2016

FInally, for good general advice on how to evaluate the quality of a poll, see

- The savvy person’s guide to reading the latest polls

- by Nate Cohn, 'TheUpshot' blog, New York Times, 12 October 2016

Election post mortem

Even with all of the collected wisdom about polling, the election results still surprised most professionals. Here are some commentaries on what happened.

- Why FiveThirtyEight gave Trump a better chance than almost anyone else

- by Nate Silver, Fivethirtyeight.com, 11 November 2016

While not predicting a Trump victory, Silver was still out of step with most other analyses. His last analysis before the election gave Trump a 29% chance of winning the electoral college. Silver was criticized in some circles for suggesting that there was any substantial chance of a Trump win.

- Putting the polling miss of the 2016 election in perspective

- by Nate Cohn, Josh Katz and Kevin Quealy, 'TheUpshot' blog, New York Times, 13 November 2016

The results of the election were certainly stunning. But Hillary Clinton did win the popular vote, by about 1.5 percentage points rather than the 4 percentage points predicted by polls. The article notes that this difference does not exceed the size of normal polling errors. The real problem seems to be the state level errors, which were historically high. Reproduced below are data from a graphic in the article giving the "average absolute difference between polling average and final vote in the ten states closest to the national average with at least three polls."

| Year | Difference |

|---|---|

| 1988 | 3.4 pts |

| 1992 | 3.4 pts |

| 1996 | 2.3 pts |

| 2000 | 1.8 pts |

| 2004 | 1.7 pts |

| 2008 | 1.7 pts |

| 2012 | 2.3 pts |

| 2016 | 3.9 pts |

Bret Larget noted on the Isolated Statisticians list that the New York Times had an eerily prescient article the day before the election: Donald Trump’s big bet on less educated whites (7 November 2016).

Trump succeeds where health is failing

Daily chart: Trump succeeds where health is failing

Economist, 21 November 2016

Suggested by Peter Doyle

Statins and Alzheimer's

Why statins probably don’t reduce risk of Alzheimer’s disease, despite what headlines say

by Alan Cassels, HealthNewsReview blog, 14 December 2016

This is an informative post on the familiar theme that "association is not causation" (the lead-in references several items from Tyler Vigen's spurious correlation collection, which has been mentioned in previous Chance News installments).

The journal JAMA Neurology recently published results of a large (400,000 subject) observational study that compared the risk of Alzheimer's disease in patients with "high exposure" to statins vs. those with "low exposure." Higher use of statins was found to be associated with lower Alzheimer's risk. The results were widely covered in the media; several stories featured some version of this quotation from the official news release:

We may not need to wait for a cure to make a difference for patients currently at risk of the disease. Existing drugs, alone or in combination, may affect Alzheimer’s risk.

Readers who continued past the headlines were informed that this was not a randomized experiment, so it was premature to draw causal conclusions. Needless to say, leading with this information would not not make for a captivating news story. HealthNewsReview cited the Daily Mail for the most sensational headline: Could statins be the miracle cure for Alzheimer's? Taking the tablets for just 2 years reduces the risk by up to 15%.

The post also explains the problem of heathy user bias. For illustration, it cites a 2009 study that found that patients who faithfully followed a statin regime were less likely to be involved in car crash. Of course we didn't see headlines announcing that statins prevented car crashes. The point is that healthier people tend to exhibit a range of positive behaviors, which might reasonably include both sticking to their medications and being careful behind the wheel.

Finally, there is a nice discussion here of the distinction between relative risk and absolute risk. News stories tended to summarize the results of the study in terms of relative risk. Again quoting from the news release, “high exposure, defined as taking statins for at least six months in a given year during the study period was associated with a 15 percent decreased risk of Alzheimer’s disease for women and a 12 percent reduced risk for men.” In absolute terms, based on this chart from the journal article, the post computes a quick estimate that about 1.99% of non statin users developed Alzheimer’s, compared to 1.5% of the high exposure group and 1.6% of the low exposure group (this ignores other risk factors accounted for in the original study). The differences are less than half a percentage point, suggesting that the drug might have helped about 1 person in 200. Moreover, any such benefit needs to be weighed against the risks associated with taking a drug.

Submitted by Bill Peterson