Chance News 106: Difference between revisions

m (→Forsooth) |

|||

| (129 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

July 21, 2015 to September 12, 2015 | |||

==Quotations== | ==Quotations== | ||

“[A]ccording to preliminary 2014 statistics from the National Transportation Safety Board, there were no fatal accidents on U.S. commercial airline flights .... But 419 people died in general aviation crashes, a figure that inflates the average American’s odds of dying in a plane crash, even though most of us don’t travel in small, private planes.” | |||

<div align=right>in: [http://blogs.wsj.com/numbers/behind-the-numbers-some-long-odds-2119/ ”What are the odds of disaster?”], <i>The Wall Street Journal</i>, August 15-16, 2015</div> | |||

Submitted by Margaret Cibes | |||

---- | |||

"The problem is not the inference in psychology it’s the psychology of inference. Some scientists have unreasonable expectations of replication of results and, unfortunately, many of those currently fingering p-values have no idea what a reasonable rate of replication should be." | |||

<div align=right> -- Stephen Senn, in: [http://www.statslife.org.uk/opinion/2114-journal-s-ban-on-null-hypothesis-significance-testing-reactions-from-the-statistical-arena Journal’s ban on null hypothesis significance testing: reactions from the statistical arena], <br> | |||

''StatsLife'' (Royal Statistical Society), 4 March 2015</div> | |||

Submitted by Bill Peterson | |||

---- | |||

'''Two "Holmes" quotes''' | |||

"It must be clearly recognized , however, that purely speculative ideas of this kind, specially invented to match the requirements, can have no scientific value until they acquire support from independent evidence." | |||

<div align=right>--Arthur Holmes, a geologist researching continental drift</div> | |||

"It is a capital mistake to theorise before one has data. Insensibly, one begins to twist facts to suit theories, instead of theories to suit facts." | |||

<div align=right>--Sherlock Holmes (Scandal in Bohemia by Arthur Conan Doyle)</div> | |||

Both quotations appear on page 453 of John Gribbin's [https://books.google.com/books/about/The_Scientists.html?id=DLnCnCWecBIC book], ''The Scientists''. | |||

Submitted by Paul Alper | |||

---- | |||

"Believing a theory is correct because someone reported p less than .05 in a Psychological Science paper is like believing that a player belongs in the Hall of Fame because hit .300 once in Fenway Park." | |||

<div align=right>--Andrew Gelman, in his blog post [http://andrewgelman.com/2015/09/02/to-understand-the-replication-crisis-imagine-a-world-in-which-everything-was-published/ To understand the replication crisis, imagine a world in which everything was published], 2 September 2015</div> | |||

"[When the] effect size is tiny and measurement error is huge... [you’re] essentially trying to use a bathroom scale to weigh a feather—and the feather is resting loosely in the pouch of a kangaroo that is vigorously jumping up and down." | |||

<div align=right>--Gelman, [http://andrewgelman.com/2015/04/21/feather-bathroom-scale-kangaroo/ The feather, the bathroom scale, and the kangaroo], 21 April 2015</div> | |||

Submitted by Paul Alper | |||

---- | |||

"Psychology studies also suffer from a certain limitation of the study population. Journalists who find themselves tempted to write 'studies show that people ...' should try replacing that phrase with 'studies show that small groups of affluent psychology majors ...' and see if they still want to write the article." | |||

"Effectively, academia selects for outliers, and then we select for the outliers among the outliers, and then everyone's surprised that so many 'facts' about diet and human psychology turn out to be overstated, or just plain wrong." | |||

<div align=right>--Megan McArdle, in: [http://andrewgelman.com/2015/09/02/to-understand-the-replication-crisis-imagine-a-world-in-which-everything-was-published/ Why we fall for bogus research], ''Bloomberg View'', 31 August 2015</div> | |||

Submitted by Paul Alper | |||

==Forsooth== | ==Forsooth== | ||

| Line 6: | Line 50: | ||

<div align=right> | <div align=right> | ||

in: The Week, July 24, 2015, page 17 | in: ''The Week'', July 24, 2015, page 17 (online at [http://theweek.com theweek.com] for subscribers).</div> | ||

Submitted by Chris Andrews | Submitted by Chris Andrews | ||

== | ---- | ||

<center>[[File:Obamacare.jpg|300px]]</center> | |||

<div align=right>[http://imgur.com/gallery/KgsYK "The math isn't that hard"], isgur.com, July 2015</div> | |||

See amazing comments [http://imgur.com/gallery/KgsYK here], as bloggers try to figure out the arithmetic.<br> | |||

Submitted by Margaret Cibes at the suggestion of Sarah Bedichek | |||

---- | |||

“On another recent project for the energy modeler, the lighting system, with daylight responsive dimming and vacancy sensors, out-performed modeled performance by 100%.” | |||

<div align=right>in: [http://www.hpbmagazine.org/Case-Studies/Edith-Green-Wendell-Wyatt-Federal-Building-Portland-OR/ Edith Green-Wendell Wyatt Federal Building: Portland, OR: Optimizing a landmark], ''High Performing Buildings'', Summer 2015<br> | |||

</div> | |||

So--the actual lighting control system kept the lights off 100% of the time? Couldn’t they have achieved the same result at less cost by not installing the lights in the first place? | |||

Submitted by Marc & Carolyn Hurwitz | |||

---- | |||

Most Common Movie Title: Alice in Wonderland<br> | |||

Average IMDb Rating: 6.0376514<br> | |||

Standard Deviation: <b>1.2209091978981308</b><br> | |||

Average Movie Length: 100 minutes<br> | |||

Standard Deviation: <b>31.94308331064542</b><br> | |||

Average Movie Year: 1984<br> | |||

Standard Deviation: <b>25.087728495416965</b><br> | |||

<div align=right>Tweeted by Edward Tufte to [https://twitter.com/JamesGleick James Gleick’s twitter page], August 5, 2015</div> | |||

Submitted by Margaret Cibes at the suggestion of James Greenwood | |||

---- | |||

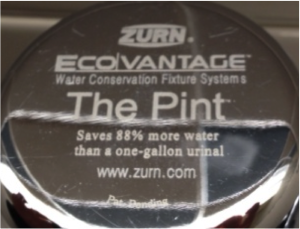

“Saves 88% more water than a one-gallon urinal.” | |||

<div align=right>--in: washrooms throughout Washington State Convention Center, JSM 2015</div> | |||

We are not the first to notice. The image below | |||

<center> | |||

[[File:pint_flush.png]] | |||

</center> | |||

appears with commentary at [http://thearmchairmba.com/tag/88-more-water/ What a urinal can teach us about statistics], "Armchair MBA" blog, 15 December 2014 | |||

Submitted by Bill Peterson | |||

---- | |||

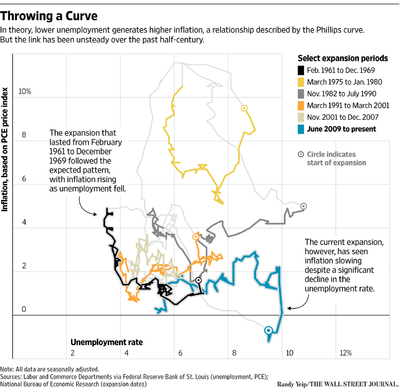

<center>[[File:PhillipsCurve.png|400px]]</center> | |||

<div align=right>Chart by Randy Yelp, in [http://www.wsj.com/articles/the-fed-has-a-theory-trouble-is-the-proof-is-patchy-1440352846 “The Fed Has a Theory”], <i>The Wall Street Journal</i>, August 23, 2015</div> | |||

Submitted by Margaret Cibes | |||

---- | |||

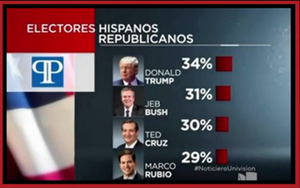

<center>[[File:TrumpFavorability.png|300px]]</center> | |||

<div align=right>Chris Hayes, [http://www.msnbc.com/all “All In”], MSNBC, September 3, 2015</div> | |||

This was a handout at a Donald Trump rally, in which Trump claimed, “I’m #1 with Hispanics.”<br> | |||

Chris Hayes pointed out that the poll had asked about favorability, not support relative to the other candidates, and that 2/3 of the respondents regarded him unfavorably.<br> | |||

Hayes: “It’s a pretty novel interpretation of poll results.” | |||

Submitted by Margaret Cibes | |||

---- | |||

“Included in my own large collection of dice, I have several that do in fact have sixes on all faces. I call them my beginner’s dice: for people who want to practice throwing double sixes.” | |||

<div align=right>David Hand in [http://www.amazon.com/Improbability-Principle-Coincidences-Miracles-Events/dp/0374535000/ref=sr_1_1?ie=UTF8&qid=1442326655&sr=8-1&keywords=improbability+principle <i>The Improbability Principle: Why Coincidences, Miracles, and Rare Events Happen Every Day</i>], 2015</div> | |||

Submitted by Margaret Cibes | |||

==Followup on the hot hand== | |||

In Chance News 105, the last item was titled [https://www.causeweb.org/wiki/chance/index.php/Chance_News_105#Does_selection_bias_explain_the_.22hot_hand.22.3F Does selection bias explain the hot hand?]. It described how in their July 6 article, Miller and Sanjurjo assert that to determine the probability of a heads following a heads in a fixed sequence, you may calculate the proportion of times a head is followed by a head for each possible sequence and then compute the average proportion, giving each sequence an equal weighting on the grounds that each possible sequence is equally likely to occur. I agree that each possible sequence is equally likely to occur. But I assert that it is illegitimate to weight each sequence equally because some sequences have more chances for a head to follow a second head than others. | |||

Let us assume, as Miller and Sanjurjo do, that we are considering the 14 possible sequences of four flips containing at least one head in the first three flips. A head is followed by another head in only one of the six sequences (see below) that contain only one head that could be followed by another, making the probability of a head being followed by another 1/6 for this set of six sequences. | |||

:{| class="wikitable" style="text-align:left" | |||

|- | |||

| TTHT || Heads follows heads 0 time | |||

|- | |||

| THTT || Heads follows heads 0 times | |||

|- | |||

| HTTT || Heads follows heads 0 times | |||

|- | |||

| TTHH || Heads follows heads 1 time | |||

|- | |||

| THTH || Heads follows heads 0 times | |||

|- | |||

| HTTH || Heads follows heads 0 times | |||

|} | |||

A head is followed by another head six out of 12 times in the six sequences (see below) that contain two heads that could be followed by another head, making the probability of a head being followed by another 6/12 = 1/2 for this set of six sequences. | |||

:{| class="wikitable" style="text-align:left" | |||

|- | |||

| THHT || Heads follows heads 1 time | |||

|- | |||

| HTHT || Heads follows heads 0 times | |||

|- | |||

| HHTT || Heads follows heads 1 time | |||

|- | |||

| THHH || Heads follows heads 2 times | |||

|- | |||

| HTHH || Heads follows heads 1 time | |||

|- | |||

| HHTH || Heads follows heads 1 time | |||

|} | |||

A head is followed by another head five out of six times in the two sequences (see below) that contain three heads that could be followed by another head, making the probability of a head being followed by another 5/6 this set of two sequences. | |||

:{| class="wikitable" style="text-align:left" | |||

|- | |||

| HHHT || Heads follows heads 2 times | |||

|- | |||

| HHHH || Heads follows heads 3 times | |||

|} | |||

An unweighted average of the 14 sequences gives | |||

:[(6 × 1/6) + (6 × 1/2) + (2 × 5/6)] / 14 = [17/3] / 14 = 0.405, | |||

which is what Miller and Sanjurjo report. | |||

A weighted average of the 14 sequences gives | |||

:[(1)(6 × 1/6) + (2)(6 × 1/2) + (3)(2 × 5/6)] / [(1×6) + (2 × 6) + (3 × 2)] <br> | |||

::= [1 + 6 + 5] / [6 + 12 + 6] = 12/24 = 0.50. | |||

Using an unweighted average instead of a weighted average is the pattern of reasoning underlying the statistical artifact known as Simpson’s paradox. And as is the case with Simpson’s paradox, it leads to faulty conclusions about how the world works. | |||

Submitted by Jeff Eiseman, University of Massachusetts | |||

==Quantitative literacy in ''The New York Review of Books''== | |||

Mike Olinick sent a link to the following exchange: | |||

:[http://www.nybooks.com/articles/archives/2015/jun/04/poor-college/ The poor in college]<br> | |||

:by John S. Bowman, William Brigham, and Frank Robertson, reply by Christopher Jencks,<br> | |||

:''The New York Review of Books'', 4 June 2015 | |||

The discussion refers to two earlier ''NYR'' articles by Jencks: | |||

:[http://www.nybooks.com/articles/archives/2015/apr/02/war-poverty-was-it-lost/ The war on poverty: Was it lost?], 2 April 2015 | |||

:[http://www.nybooks.com/articles/archives/2015/apr/23/did-we-lose-war-poverty-ii/ Did we lose the war on poverty?—II], 23 April 2015 | |||

The letters from Brigham and Robertson both fault Jencks's presentation of percentage data. From Brigham: | |||

<blockquote> | |||

Professor Jencks (or his cited source) seems to have made the freshman error of confusing percentage points with percentage [“Did We Win the War on Poverty?—II,” NYR, April 23]. Rather than the bottom quarter of the income distribution having half the increase of the top quarter in college admissions, by the figures cited they had a 36 percent increase versus a 29 percent increase. That error is repeated further on vis-à-vis college graduation rates. Again, the bottom quarter did not have half the increase as the top but, rather, exactly the same: 14 percent. | |||

</blockquote> | |||

Similarly, Robertson writes: | |||

<blockquote> | |||

Jencks states that the percentage of upper-income students entering college rose from 51 to 66 percent between 1972 and 1992, while the percentage of low income students rose from 22 to 30 percent, “only about half as much.” However, the percentage increase needs to be calculated as a percentage of the starting figure. It is not simply the subtraction of the start from the final. Thus, the percentage increase of low-income students is actually 8/22, or 36 percent, while the increase among upper income students is 15/51, or only 29 percent. The error is repeated in Jencks’s discussion of graduation rates. | |||

</blockquote> | |||

Earlier in the article in question, in a discussion of Head Start, Jencks had written “… when they compared white siblings, those who attended Head Start were 22 percentage points more likely to finish high school and 19 percentage points more likely to enter college than those who did not attend Head Start.” So he certainly knows what percentage points mean. In his reply to Brigham and Robertson, he defended his college admissions comparison. He argues that the logic proposed by the Brigham and Robertson leads to trouble as follows: | |||

<blockquote> | |||

But now suppose we say federal policy sought to reduce the fraction of students not attending college. Among low-income students, nonattendance declined from 78 to 70 percent, so the decline was 8/78, or 10 percent. Among high-income students, nonattendance declined from 49 to 34 percent, so the decline was 15/49, or 31 percent. Using this approach, therefore, high-income students gained three times as much as low-income students. The fact that measuring percentage declines in non-attendance leads to opposite conclusions from measuring percentage increases in attendance should serve as a clear warning: this approach to comparing changes among the two groups is misguided. | |||

</blockquote> | |||

'''Discussion'''<br> | |||

Which is the correct way to describe the comparison? Or do you see pros and cons for each? | |||

==Predicting GOP debate participants== | |||

Ethan Brown posted this following link on the Isolated Statisticians list: | |||

:[http://www.nytimes.com/interactive/2015/07/21/upshot/election-2015-the-first-gop-debate-and-the-role-of-chance.html The first G.O.P. debate: Who’s in, who’s out and the role of chance]<br> | |||

:by Kevin Quealy and Amanda Cox , "Upshot" blog ''New York Times'', 21 July 2015 | |||

Because of the large number of declared candidates (16 and growing at the time of the article), Fox News has limited participation in its August 6 debate to those who meet the [http://press.foxnews.com/2015/05/fox-news-and-facebook-partner-to-host-first-republican-presidential-primary-debate-of-2016-election/ following criterion] | |||

<blockquote>Must place in the top 10 of an average of the five most recent national polls, as recognized by FOX News leading up to August 4th at 5 PM/ET. Such polling must be conducted by major, nationally recognized organizations that use standard methodological techniques. | |||

</blockquote> | |||

But of course, polls are subject to sampling error. The ''NYT'' article uses simulation to illustrate how this could affect participation. Supposing the latest polling averages represent the "correct" values, they simulate 5 additional polls (as Ethan noted, this is a bootstrapping approach). The results demonstrate that this can affect who's in and who's out, as well as the location on the stage for those who do make the cut. | |||

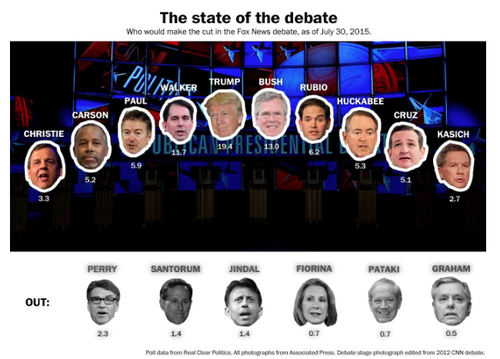

The ''Washington Post'' maintains a [http://www.washingtonpost.com/blogs/the-fix/wp/2015/06/02/whos-in-and-whos-out-in-the-first-republican-debate/?tid=trending_strip_1 State of the debate] widget that updates the current top 10 based on the most recent polling results. For July 30 we see | |||

<center>[[File:WP GOP.png | 500px]]</center> | |||

Note that the top figure is not a bar chart; it represents the positions of the candidates on the stage. | |||

===Updates=== | |||

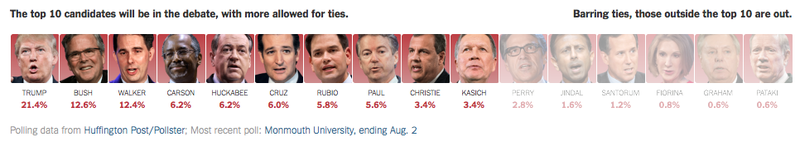

[http://www.nytimes.com/2015/08/04/upshot/2016-presidential-election-who-gets-into-the-republican-debate-rounding-could-decide.html?hp&action=click&pgtype=Homepage&module=first-column-region®ion=top-news&WT.nav=top-news&abt=0002&abg=0 Who gets into the Republican debate: Rounding could decide]<br> | |||

by Kevin Quealy, "Upshot" blog, ''New York Times'', 3 August 2015 | |||

The article points out that Fox has not specified if or how it will round the polling figures. For example, the 9th, 10th and 11th places as of July 30 (shown in the display above) were Chris Christie (3.3%), John Kasich (2.7%) and Rick Perry (2.3%). These are all within a one percentage point range, but Christie and Kasich currently in in the top 10, while Perry is not. More recent data presented in the present article have Christie and Kasich at 3.8% and Perry at 2.8%, a tie figures are rounded to 3%. | |||

<center>[[File:Upshot_GOP.png | 800px]]</center> | |||

Quealy wonders if Fox might then consider having 11 debaters? | |||

The ''NYT'' reported on August 4 that [http://www.nytimes.com/2015/08/05/us/politics/election-2016-republican-debate.html John Kasich is in, Rick Perry Is out in first Republican debate]. The article quotes a statement from Fox News: | |||

<blockquote> | |||

Each poll has a different margin of error, and averaging requires a distinct test of statistical significance. Given the over 2,400 interviews contained within the five polls, from a purely statistical perspective it is at least 90 percent likely that the 10th place Kasich is ahead of the 11th place Perry.” | |||

</blockquote> | |||

Summing all this up, Justin Wolfers wrote on TheUpshot blog that [https://www.nytimes.com/2015/08/07/upshot/why-fox-failed-statistics-in-explaining-its-gop-debate-decision.html Fox failed statistics in explaining Its G.O.P. debate decision]. | |||

'''Discussion'''<br> | |||

How do you think Fox arrived at their "90 percent likely" statement? | |||

(For a dissenting opinion, see [http://www.nytimes.com/2015/08/07/upshot/why-fox-failed-statistics-in-explaining-its-gop-debate-decision.html Fox failed statistics in explaining its G.O.P. debate decision] by Justin Wolfers in "The Upshot" blog, ''New York Times'', 6 August 2015). | |||

Submitted by Bill Peterson | |||

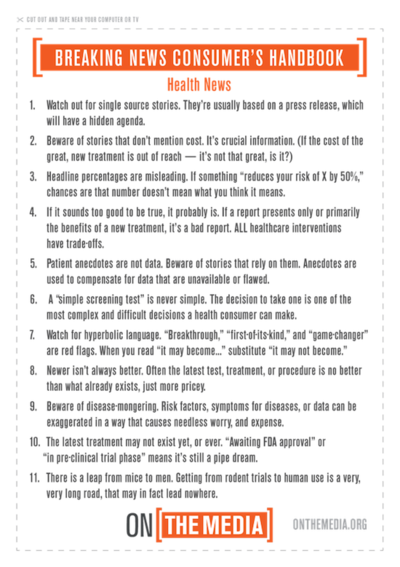

==How to evaluate health studies== | |||

We all know that as general as statistics is, particular subject matter knowledge and familiarity are all important. Here is a useful link for understanding and critically evaluating health news: | |||

:[http://www.healthnewsreview.org/2015/07/nprs-on-the-media-with-a-skeptics-guide-to-health-newsdiet-fads/ NPR’s On the Media with a skeptic’s guide to health news/diet fads]<br> | |||

:by Gary Schwitzer, HealthNewsReviews.org, 31 July 2015 | |||

From which we find a consumer’s handbook for evaluating a health study: | |||

<center>[[File:OnTheMedia healthnews.png | 400px]]</center> | |||

The On the Media web site also contains a [https://www.wnyc.org/widgets/ondemand_player/onthemedia/#file=%2Faudio%2Fxspf%2F520523%2F lengthy radio interview] dealing with most of the above items. | |||

See also: | |||

: [http://www.nytimes.com/2015/08/18/upshot/how-to-know-whether-to-believe-a-health-study.html?rref=upshot How to Know Whether to Believe a Health Study]<br> | |||

: by Austin Frakt, "Upshot" blog, ''New York Times'', 17 August 2015 | |||

One of the links there is the another handbook [http://cdn.journalism.cuny.edu/blogs.dir/422/files/2012/04/Covering-Medical-Research.pdf Covering Medical Research] written for journalists by Gary Schwitzer. | |||

'''Discussion''' | |||

#Although Healthnewsreview.org has often mentioned this in the past, somehow the above consumer’s handbook failed to list “surrogate criterion” as a pitfall. Search Chance News and elsewhere to see why it belongs in a consumer’s handbook. | |||

#Number 3 on the above list, risk reduction, depends on what is the denominator of a fraction. It is often unclear as to whether relative risk or absolute risk is being quoted. That is, the comparison might be to a/b or to (a/c) / (b/d) with all numbers positive and c > a and d > b. With this in mind, show that while Bill Gates gives more absolutely to charity than you do, nevertheless you give more relatively than he does. Relate this to risk reduction. | |||

#Number 6 criticizes the notion of a “simple screening test.” Go to [https://en.wikipedia.org/wiki/Screening_(medicine) Screening (medicine)] (Wikipedia) and its subsection, [https://en.wikipedia.org/wiki/Screening_(medicine)#Limitations_of_screening Limitations of screening] to see why a simple screening test may be dangerous to your health. | |||

#For a discussion of Number 9, see [https://en.wikipedia.org/wiki/Disease_mongering Disease mongering] (Wikipedia) and [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1434508 The fight against disease mongering] (PLoS Medicine). | |||

#Relative risk vs. absolute risk and survival rates can be trickier than one might imagine at first glance. For an entertaining and informative discussion on how easy it is to be mislead, see Dr. Gilbert Welch's [https://www.youtube.com/watch?v=rcHQElKhWFc video presentation] entitled, "The Two Most Misleading Numbers in Medicine." | |||

Submitted by Paul Alper | |||

==Lightning and the lottery== | |||

[http://www.ctvnews.ca/canada/man-who-survived-lightning-strike-wins-1m-jackpot-with-co-worker-1.2478542 Man who survived lightning strike wins $1M jackpot with co-worker]<br> | |||

CTV News (Canada), 20 July 2015 | |||

A Canadian man who survived a lightning strike at age 14 has just won a $1 million prize in the Atlantic Lotto, a 6/49 lottery game. Compounding the coincidence, his daughter is also a lightning survivor. This is, of course, just the kind of situation for which newspapers love to print astronomical odds. By combining the 1 in 13,983,816 chance of winning the Lotto with the reported (for Canada) 1 in a million risk of being stuck by lightning, a local math professor came up with the following "By assuming that these events happened independently … so probability of lotto … times another probability of lightning – since there are two people that got hit by lightning – we get approximately 1 in 2.6 trillion." | |||

The story is amusing in light of the often-heard claim that one's chances of winning the lottery are the same as being stuck by lightning, a comparison of dubious utility. A quick web search yields the following figures. According to the [http://www.lightningsafety.noaa.gov/fatalities.shtml National Weather Service], there have been 24 US lightning deaths so far in 2015 (since 2010, the annual totals have been in the twenties). The accompanying map shows that one of the 2015 victims was from Texas; on the other hand the [http://www.txlottery.org/export/sites/lottery/Winners/Winners_Gallery/ Winner's Gallery] from the Texas Lottery displays 40 photos of happy winners. To be fair, this includes some minor games in addition to the large jackpots that people might typically associate with the phrase "winning the lottery." For more on this see the third discussion question below. | |||

'''Discussion''' | |||

#The 1 in a million figure given for lightning risk is presumably an annual figure. But the man in the story was stuck as a teenager. How does this affect the calculation?<br><br> | |||

#From the CTV story, we learn that both the winner and his daughter were struck in "eerily similar" scenarios, as they were tying up boats on a lake. How does this affect your probability assessment? (It may be instructive to look at the table of locations and activities for the US data on the Weather Service web site.)<br><br> | |||

#In his blog post [https://talkingaboutnumbers.wordpress.com/2011/02/28/how-many-lottery-winners-are-there-in-a-year/ How many lottery winners are there in a year?], Dan Ma presents a more careful attempt to count lottery winners. Ma reports that data are not easy to come by. Looking at the 26-year period from the introduction of the California lottery until 2011, he counts 257 prizes of $1 million or more. This gives average of 10 per year. From this, he estimates that the total for the US is likely in the hundreds. Comment on the comparison with lightning strikes. (Note. The mortality rate from lightning strikes is [https://en.wikipedia.org/wiki/Lightning_strike#Lightning.27s_interaction_with_the_body estimated] as 10-30%). | |||

Submitted by Bill Peterson | |||

==Cautions on hypothesis testing== | |||

[http://www.nature.com/news/scientific-method-statistical-errors-1.14700 Scientific method: Statistical errors]<br> | |||

by Regina Nuzzo, ''Nature News'', 12 February 2014 | |||

The subtitle is "''P'' values, the 'gold standard' of statistical validity, are not as reliable as many scientists assume." We read: | |||

<blockquote> | |||

P values have always had critics. In their almost nine decades of existence, they have been likened to mosquitoes (annoying and impossible to swat away), the emperor's new clothes (fraught with obvious problems that everyone ignores) and the tool of a “sterile intellectual rake” who ravishes science but leaves it with no progeny[http://www.nature.com/news/scientific-method-statistical-errors-1.14700#b3]. One researcher suggested rechristening the methodology “statistical hypothesis inference testing”[http://www.nature.com/news/scientific-method-statistical-errors-1.14700#b3], presumably for the acronym it would yield. | |||

</blockquote> | |||

Later on we have: | |||

<blockquote> | |||

But while the rivals feuded — Neyman called some of Fisher's work mathematically “worse than useless”; Fisher called Neyman's approach “childish” and “horrifying [for] intellectual freedom in the west” — other researchers lost patience and began to write statistics manuals for working scientists. And because many of the authors were non-statisticians without a thorough understanding of either approach, they created a hybrid system that crammed Fisher's easy-to-calculate P value into Neyman and Pearson's reassuringly rigorous rule-based system. This is when a P value of 0.05 became enshrined as 'statistically significant', for example. “The P value was never meant to be used the way it's used today,” says [Steven] Goodman. | |||

</blockquote> | |||

I have always bemoaned the conflation of exploratory and confirmatory: | |||

<blockquote> | |||

Such practices have the effect of turning discoveries from exploratory studies — which should be treated with scepticism — into what look like sound confirmations but vanish on replication. | |||

</blockquote> | |||

Submitted by Paul Alper | |||

===More on reproducibility=== | |||

For much more on these ideas from ''Nature'', see the Special Issue entitled [http://www.nature.com/nature/focus/reproducibility/index.html Challenges in irreproducible research]. | |||

In a related vein, Jeff Witmer sent the following link to the Isolated Statisticians list: [http://www.sciencemag.org/content/349/6251/aac4716 Estimating the reproducibility of psychological science], from ''Science'', 28 August 2015. As Jeff noted, a number of interesting graphs and tables accompany the article. For teaching purposes, the site includes a [http://www.sciencemag.org/content/349/6251/aac4716/F1.expansion.html link to a Powerpoint slide] of a key summary plot of original study effect size versus replication effect size. | |||

Our quotations section above includes a number of items related to reproducibility. From the Conclusions section of the Science abstract we have the following: | |||

<blockquote> | |||

Reproducibility is not well understood because the incentives for individual scientists prioritize novelty over replication. Innovation is the engine of discovery and is vital for a productive, effective scientific enterprise. However, innovative ideas become old news fast. Journal reviewers and editors may dismiss a new test of a published idea as unoriginal. The claim that “we already know this” belies the uncertainty of scientific evidence. Innovation points out paths that are possible; replication points out paths that are likely; progress relies on both. | |||

</blockquote> | |||

==Help for the aging brain?== | |||

[http://www.minnpost.com/second-opinion/2015/08/omega-3-supplements-and-exercise-have-no-protective-effect-aging-brain-studie Omega-3 supplements and exercise have no protective effect on the aging brain, studies find]<br> | |||

by Susan Perry, Minneapolis Post, 26 August 2015 | |||

Reporting on the results of two randomized trials published in JAMA, Perry writes, "Neither exercise nor omega-3 supplements has a protective effect on the brains of older adults, according to the results of two large randomized controlled studies published Tuesday [August 25, 2015] in JAMA." | |||

The details of the two studies may be found (behind a pay wall) at | |||

*[http://jama.jamanetwork.com/article.aspx?articleid=2429713 Effect of omega-3 fatty acids, lutein/zeaxanthin, or other nutrient supplementation on cognitive function] | |||

*[http://jama.jamanetwork.com/article.aspx?articleid=2429712 Effect of a 24-month physical activity intervention vs health education on cognitive outcomes in sedentary older adults] | |||

For the omega-3 study, Perry points out that | |||

<blockquote> | |||

Half of the [approximately 3500] participants were given supplements with omega-3, a fatty acid found most abundantly in fish, but also in flaxseed, walnuts, soy products and a few other plant sources. The other half took a placebo. All had their cognitive skills tested before the study started and then twice more, at two-year intervals. | |||

At the end of five years, no difference in cognitive abilities was found between the groups. | |||

</blockquote> | |||

With regard to the exercise study, she reports that | |||

<blockquote> | |||

After two years, the [1635] participants’ cognitive function was assessed through a series of tests. No significant differences in scores were found between the two groups [exercise or health education]. | |||

</blockquote> | |||

Perry asks, | |||

<blockquote> | |||

Do these results mean that exercising and eating nutrient-rich healthful foods (not supplements) is a waste of time and effort for older adults?” | |||

</blockquote> | |||

She concludes, | |||

<blockquote> | |||

Absolutely not, as the authors of a JAMA [http://jama.jamanetwork.com/article.aspx?articleid=2429694 editorial] that accompanies the studies stress. While these two studies "failed to demonstrate significant cognitive benefits, these results should not lead to nihilism involving lifestyle factors in older adults," they write. | |||

</blockquote> | |||

'''Discussion''' | |||

#For what it is worth, the nutrient study (omega-3 and other supplements) reported p-values of .66 and .63 regarding the difference between treatment and control. What sort of reaction follows from those numbers? | |||

#For what it is worth, the exercise study (of several different cognitive tests) reported p-values of .97 and .84 regarding the difference between treatment and control. Again, what sort of reaction follows from those numbers? | |||

#The studies mentioned in JAMA involved elderly people who presumably by virtue of age have already declined. The editorial asserts, “It is likely the biggest gains in reducing the overall burden of dementia will be achieved through policy and public health initiatives promoting primary prevention of cognitive decline rather than efforts directed toward individuals who have already developed significant cognitive deficits.” The editorial lauds life-long adherence to the so-called Mediterranean Diet as a way of staying mentally and physically healthy. For a more caustic look at the Mediterranean Diet see [https://www.causeweb.org/wiki/chance/index.php/Chance_News_92#Mediterranean_diet this discussion] from Chance News 92. | |||

Submitted by Paul Alper | |||

==Scoring standardized-test essays== | |||

[http://www.amazon.com/Making-Grades-Misadventures-Standardized-Industry/dp/098170915X <i>Making the Grades</i>], by Todd Farley, 2009<br> | |||

Todd Farley worked in the standardized-testing industry for 15 years, beginning while he was in grad school in Iowa in the mid-1990s. His book is subtitled “My Misadventures in the Standardized Testing Industry.”<br> | |||

He describes the main issue for scorers as not “whether or not you appreciate or comprehend an essay” but rather “whether or not you can formulate exactly the same opinion about it as do all the people sitting around you.” And he describes one scoring scale as “flopp[ing] about like a puppy on a frozen pond.”<br> | |||

Early on in his career, a table leader explained the “Mystery of High Reliability”: | |||

<blockquote>“It’s simple …. If I go into the system and see you gave a 0 to some student response and another scorer gave it a 1, I change one of the scores.” …. “The computer counts it as a disagreement only as long as the scores don’t match. Once I change one of the scores, the reliability number goes up.”<br> | |||

“The reliability numbers aren’t legit?” I asked.<br> | |||

“The reliability numbers are what we make them,” he said. ….<br> | |||

“Man,” I said, “I … thought we were in the business of <i>education</i>.” ….<br> | |||

“Maybe,” he said. “But I’d say we are in the <i>business</i> of education.”</blockquote> | |||

And later in his career, a trainer expressed concerned about the distribution of scores: | |||

<blockquote>“It seems we haven’t been scoring correctly.” …. “[O]ur numbers don’t match up with what the psychometricians predicted.” …. “They need us to find more 1’s.” </blockquote> | |||

Farley’s reaction: | |||

<blockquote>I assumed that if the psychometricians could tell, without actually looking at the student responses, there should be more 1’s, I figured there should be more 1’s.</blockquote> | |||

Submitted by Margaret Cibes | |||

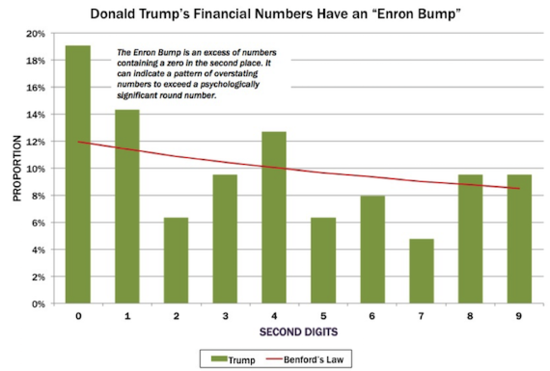

==Trump meets Benford== | |||

[http://www.salon.com/2015/08/20/the_truth_about_donald_trumps_money_the_scientific_case_for_doubting_his_fantastical_claims/ The truth about Donald Trump’s money: The scientific case for doubting his fantastical claims]<br> | |||

by William Poundstone, ''Salon'', 20 August 2015 | |||

Throughout his presidential campaign, Donald Trump has repeatedly claimed that his personal wealth is on the order of $10 billion. But not everyone believes him. For example, Poundstone cites an [http://www.forbes.com/sites/erincarlyle/2015/06/16/trump-exaggerating-his-net-worth-by-100-in-presidential-bid/ analysis] by ''Forbes'' that puts the true figure closer to $4 billion. | |||

In the present article, Poundstone presents a statistical reason to suspect Trump of exaggerating, based on Benford's Law. As background, he considers the case of Enron: | |||

<blockquote> | |||

Enron was notorious for claiming revenues and earnings that narrowly exceeded round numbers. For instance, in 1997 Enron reported revenues of $20.373 billion. A number like $20-plus billion “feels” bigger than $19 billion, say. So Enron’s accountants nipped and tucked and found ways to claim they’d just beaten a psychologically significant threshold. This boosted Enron’s stock price, up until the company crashed and burned in 2001. | |||

</blockquote> | |||

When numbers have been massaged in this way, the result is an overabundance of zeros as second digits, and the digit distribution will deviate from what is expected via Benford. | |||

The application of Benford's Law to detect accounting fraud has been [http://www.nigrini.com championed by Mark Nigrini]. By now, many people are familiar with the Benford [https://plus.maths.org/content/looking-out-number-one distribution for the leading digit] of "naturally occurring numbers." | |||

<center><math> p(x) = \log_{10}(1 + 1/x), \quad x=1,2,\ldots,9.</math></center> | |||

Perhaps less well-known is that the logarithmic law leads to distributions for all digits. For example, the joint distribution of the first and second digits is | |||

<center> | |||

<math> p(x,y) = \log_{10}(1 + 1/(10x+y)), \quad x=1,2,\ldots,9; \,y = 0, \ldots, 9.</math> | |||

</center>. | |||

Summing on ''x'' leads to the marginal distribution for the second digit. If an empirical distribution of second digits deviates from this there may be reason for suspicion. As Poundstone notes, | |||

it is not necessary to do the calculations by hand, since Nigrini has made freely available [http://www.nigrini.com/BenfordsLaw/NigriniCycle.xlsx a spreadsheet] that automates such analyses. In the case of Trump's financial reports, there is evidence of exaggeration, as shown by this plot from the article. | |||

<center> | |||

[[File:Trump.png | 550px]] | |||

</center> | |||

(See [https://www.causeweb.org/wiki/chance/index.php/Chance_News_100#Anchors.2C_ultimatums_and_randomization Anchors, ultimatums and randomization] from Chance News 100 | |||

for additional discussion of Poundstone's work). | |||

Submitted by Bill Peterson | |||

Latest revision as of 16:04, 14 August 2018

July 21, 2015 to September 12, 2015

Quotations

“[A]ccording to preliminary 2014 statistics from the National Transportation Safety Board, there were no fatal accidents on U.S. commercial airline flights .... But 419 people died in general aviation crashes, a figure that inflates the average American’s odds of dying in a plane crash, even though most of us don’t travel in small, private planes.”

Submitted by Margaret Cibes

"The problem is not the inference in psychology it’s the psychology of inference. Some scientists have unreasonable expectations of replication of results and, unfortunately, many of those currently fingering p-values have no idea what a reasonable rate of replication should be."

StatsLife (Royal Statistical Society), 4 March 2015

Submitted by Bill Peterson

Two "Holmes" quotes

"It must be clearly recognized , however, that purely speculative ideas of this kind, specially invented to match the requirements, can have no scientific value until they acquire support from independent evidence."

"It is a capital mistake to theorise before one has data. Insensibly, one begins to twist facts to suit theories, instead of theories to suit facts."

Both quotations appear on page 453 of John Gribbin's book, The Scientists.

Submitted by Paul Alper

"Believing a theory is correct because someone reported p less than .05 in a Psychological Science paper is like believing that a player belongs in the Hall of Fame because hit .300 once in Fenway Park."

"[When the] effect size is tiny and measurement error is huge... [you’re] essentially trying to use a bathroom scale to weigh a feather—and the feather is resting loosely in the pouch of a kangaroo that is vigorously jumping up and down."

Submitted by Paul Alper

"Psychology studies also suffer from a certain limitation of the study population. Journalists who find themselves tempted to write 'studies show that people ...' should try replacing that phrase with 'studies show that small groups of affluent psychology majors ...' and see if they still want to write the article."

"Effectively, academia selects for outliers, and then we select for the outliers among the outliers, and then everyone's surprised that so many 'facts' about diet and human psychology turn out to be overstated, or just plain wrong."

Submitted by Paul Alper

Forsooth

You really are old as you feel

"Some of the subjects 'aged physiologically not at all [while] at the other extreme there were folks aging two to three times as much.'"

Submitted by Chris Andrews

See amazing comments here, as bloggers try to figure out the arithmetic.

Submitted by Margaret Cibes at the suggestion of Sarah Bedichek

“On another recent project for the energy modeler, the lighting system, with daylight responsive dimming and vacancy sensors, out-performed modeled performance by 100%.”

So--the actual lighting control system kept the lights off 100% of the time? Couldn’t they have achieved the same result at less cost by not installing the lights in the first place?

Submitted by Marc & Carolyn Hurwitz

Most Common Movie Title: Alice in Wonderland

Average IMDb Rating: 6.0376514

Standard Deviation: 1.2209091978981308

Average Movie Length: 100 minutes

Standard Deviation: 31.94308331064542

Average Movie Year: 1984

Standard Deviation: 25.087728495416965

Submitted by Margaret Cibes at the suggestion of James Greenwood

“Saves 88% more water than a one-gallon urinal.”

We are not the first to notice. The image below

appears with commentary at What a urinal can teach us about statistics, "Armchair MBA" blog, 15 December 2014

Submitted by Bill Peterson

Submitted by Margaret Cibes

This was a handout at a Donald Trump rally, in which Trump claimed, “I’m #1 with Hispanics.”

Chris Hayes pointed out that the poll had asked about favorability, not support relative to the other candidates, and that 2/3 of the respondents regarded him unfavorably.

Hayes: “It’s a pretty novel interpretation of poll results.”

Submitted by Margaret Cibes

“Included in my own large collection of dice, I have several that do in fact have sixes on all faces. I call them my beginner’s dice: for people who want to practice throwing double sixes.”

Submitted by Margaret Cibes

Followup on the hot hand

In Chance News 105, the last item was titled Does selection bias explain the hot hand?. It described how in their July 6 article, Miller and Sanjurjo assert that to determine the probability of a heads following a heads in a fixed sequence, you may calculate the proportion of times a head is followed by a head for each possible sequence and then compute the average proportion, giving each sequence an equal weighting on the grounds that each possible sequence is equally likely to occur. I agree that each possible sequence is equally likely to occur. But I assert that it is illegitimate to weight each sequence equally because some sequences have more chances for a head to follow a second head than others.

Let us assume, as Miller and Sanjurjo do, that we are considering the 14 possible sequences of four flips containing at least one head in the first three flips. A head is followed by another head in only one of the six sequences (see below) that contain only one head that could be followed by another, making the probability of a head being followed by another 1/6 for this set of six sequences.

TTHT Heads follows heads 0 time THTT Heads follows heads 0 times HTTT Heads follows heads 0 times TTHH Heads follows heads 1 time THTH Heads follows heads 0 times HTTH Heads follows heads 0 times

A head is followed by another head six out of 12 times in the six sequences (see below) that contain two heads that could be followed by another head, making the probability of a head being followed by another 6/12 = 1/2 for this set of six sequences.

THHT Heads follows heads 1 time HTHT Heads follows heads 0 times HHTT Heads follows heads 1 time THHH Heads follows heads 2 times HTHH Heads follows heads 1 time HHTH Heads follows heads 1 time

A head is followed by another head five out of six times in the two sequences (see below) that contain three heads that could be followed by another head, making the probability of a head being followed by another 5/6 this set of two sequences.

HHHT Heads follows heads 2 times HHHH Heads follows heads 3 times

An unweighted average of the 14 sequences gives

- [(6 × 1/6) + (6 × 1/2) + (2 × 5/6)] / 14 = [17/3] / 14 = 0.405,

which is what Miller and Sanjurjo report. A weighted average of the 14 sequences gives

- [(1)(6 × 1/6) + (2)(6 × 1/2) + (3)(2 × 5/6)] / [(1×6) + (2 × 6) + (3 × 2)]

- = [1 + 6 + 5] / [6 + 12 + 6] = 12/24 = 0.50.

Using an unweighted average instead of a weighted average is the pattern of reasoning underlying the statistical artifact known as Simpson’s paradox. And as is the case with Simpson’s paradox, it leads to faulty conclusions about how the world works.

Submitted by Jeff Eiseman, University of Massachusetts

Quantitative literacy in The New York Review of Books

Mike Olinick sent a link to the following exchange:

- The poor in college

- by John S. Bowman, William Brigham, and Frank Robertson, reply by Christopher Jencks,

- The New York Review of Books, 4 June 2015

The discussion refers to two earlier NYR articles by Jencks:

- The war on poverty: Was it lost?, 2 April 2015

- Did we lose the war on poverty?—II, 23 April 2015

The letters from Brigham and Robertson both fault Jencks's presentation of percentage data. From Brigham:

Professor Jencks (or his cited source) seems to have made the freshman error of confusing percentage points with percentage [“Did We Win the War on Poverty?—II,” NYR, April 23]. Rather than the bottom quarter of the income distribution having half the increase of the top quarter in college admissions, by the figures cited they had a 36 percent increase versus a 29 percent increase. That error is repeated further on vis-à-vis college graduation rates. Again, the bottom quarter did not have half the increase as the top but, rather, exactly the same: 14 percent.

Similarly, Robertson writes:

Jencks states that the percentage of upper-income students entering college rose from 51 to 66 percent between 1972 and 1992, while the percentage of low income students rose from 22 to 30 percent, “only about half as much.” However, the percentage increase needs to be calculated as a percentage of the starting figure. It is not simply the subtraction of the start from the final. Thus, the percentage increase of low-income students is actually 8/22, or 36 percent, while the increase among upper income students is 15/51, or only 29 percent. The error is repeated in Jencks’s discussion of graduation rates.

Earlier in the article in question, in a discussion of Head Start, Jencks had written “… when they compared white siblings, those who attended Head Start were 22 percentage points more likely to finish high school and 19 percentage points more likely to enter college than those who did not attend Head Start.” So he certainly knows what percentage points mean. In his reply to Brigham and Robertson, he defended his college admissions comparison. He argues that the logic proposed by the Brigham and Robertson leads to trouble as follows:

But now suppose we say federal policy sought to reduce the fraction of students not attending college. Among low-income students, nonattendance declined from 78 to 70 percent, so the decline was 8/78, or 10 percent. Among high-income students, nonattendance declined from 49 to 34 percent, so the decline was 15/49, or 31 percent. Using this approach, therefore, high-income students gained three times as much as low-income students. The fact that measuring percentage declines in non-attendance leads to opposite conclusions from measuring percentage increases in attendance should serve as a clear warning: this approach to comparing changes among the two groups is misguided.

Discussion

Which is the correct way to describe the comparison? Or do you see pros and cons for each?

Predicting GOP debate participants

Ethan Brown posted this following link on the Isolated Statisticians list:

- The first G.O.P. debate: Who’s in, who’s out and the role of chance

- by Kevin Quealy and Amanda Cox , "Upshot" blog New York Times, 21 July 2015

Because of the large number of declared candidates (16 and growing at the time of the article), Fox News has limited participation in its August 6 debate to those who meet the following criterion

Must place in the top 10 of an average of the five most recent national polls, as recognized by FOX News leading up to August 4th at 5 PM/ET. Such polling must be conducted by major, nationally recognized organizations that use standard methodological techniques.

But of course, polls are subject to sampling error. The NYT article uses simulation to illustrate how this could affect participation. Supposing the latest polling averages represent the "correct" values, they simulate 5 additional polls (as Ethan noted, this is a bootstrapping approach). The results demonstrate that this can affect who's in and who's out, as well as the location on the stage for those who do make the cut.

The Washington Post maintains a State of the debate widget that updates the current top 10 based on the most recent polling results. For July 30 we see

Note that the top figure is not a bar chart; it represents the positions of the candidates on the stage.

Updates

Who gets into the Republican debate: Rounding could decide

by Kevin Quealy, "Upshot" blog, New York Times, 3 August 2015

The article points out that Fox has not specified if or how it will round the polling figures. For example, the 9th, 10th and 11th places as of July 30 (shown in the display above) were Chris Christie (3.3%), John Kasich (2.7%) and Rick Perry (2.3%). These are all within a one percentage point range, but Christie and Kasich currently in in the top 10, while Perry is not. More recent data presented in the present article have Christie and Kasich at 3.8% and Perry at 2.8%, a tie figures are rounded to 3%.

Quealy wonders if Fox might then consider having 11 debaters?

The NYT reported on August 4 that John Kasich is in, Rick Perry Is out in first Republican debate. The article quotes a statement from Fox News:

Each poll has a different margin of error, and averaging requires a distinct test of statistical significance. Given the over 2,400 interviews contained within the five polls, from a purely statistical perspective it is at least 90 percent likely that the 10th place Kasich is ahead of the 11th place Perry.”

Summing all this up, Justin Wolfers wrote on TheUpshot blog that Fox failed statistics in explaining Its G.O.P. debate decision.

Discussion

How do you think Fox arrived at their "90 percent likely" statement?

(For a dissenting opinion, see Fox failed statistics in explaining its G.O.P. debate decision by Justin Wolfers in "The Upshot" blog, New York Times, 6 August 2015).

Submitted by Bill Peterson

How to evaluate health studies

We all know that as general as statistics is, particular subject matter knowledge and familiarity are all important. Here is a useful link for understanding and critically evaluating health news:

- NPR’s On the Media with a skeptic’s guide to health news/diet fads

- by Gary Schwitzer, HealthNewsReviews.org, 31 July 2015

From which we find a consumer’s handbook for evaluating a health study:

The On the Media web site also contains a lengthy radio interview dealing with most of the above items.

See also:

- How to Know Whether to Believe a Health Study

- by Austin Frakt, "Upshot" blog, New York Times, 17 August 2015

One of the links there is the another handbook Covering Medical Research written for journalists by Gary Schwitzer.

Discussion

- Although Healthnewsreview.org has often mentioned this in the past, somehow the above consumer’s handbook failed to list “surrogate criterion” as a pitfall. Search Chance News and elsewhere to see why it belongs in a consumer’s handbook.

- Number 3 on the above list, risk reduction, depends on what is the denominator of a fraction. It is often unclear as to whether relative risk or absolute risk is being quoted. That is, the comparison might be to a/b or to (a/c) / (b/d) with all numbers positive and c > a and d > b. With this in mind, show that while Bill Gates gives more absolutely to charity than you do, nevertheless you give more relatively than he does. Relate this to risk reduction.

- Number 6 criticizes the notion of a “simple screening test.” Go to Screening (medicine) (Wikipedia) and its subsection, Limitations of screening to see why a simple screening test may be dangerous to your health.

- For a discussion of Number 9, see Disease mongering (Wikipedia) and The fight against disease mongering (PLoS Medicine).

- Relative risk vs. absolute risk and survival rates can be trickier than one might imagine at first glance. For an entertaining and informative discussion on how easy it is to be mislead, see Dr. Gilbert Welch's video presentation entitled, "The Two Most Misleading Numbers in Medicine."

Submitted by Paul Alper

Lightning and the lottery

Man who survived lightning strike wins $1M jackpot with co-worker

CTV News (Canada), 20 July 2015

A Canadian man who survived a lightning strike at age 14 has just won a $1 million prize in the Atlantic Lotto, a 6/49 lottery game. Compounding the coincidence, his daughter is also a lightning survivor. This is, of course, just the kind of situation for which newspapers love to print astronomical odds. By combining the 1 in 13,983,816 chance of winning the Lotto with the reported (for Canada) 1 in a million risk of being stuck by lightning, a local math professor came up with the following "By assuming that these events happened independently … so probability of lotto … times another probability of lightning – since there are two people that got hit by lightning – we get approximately 1 in 2.6 trillion."

The story is amusing in light of the often-heard claim that one's chances of winning the lottery are the same as being stuck by lightning, a comparison of dubious utility. A quick web search yields the following figures. According to the National Weather Service, there have been 24 US lightning deaths so far in 2015 (since 2010, the annual totals have been in the twenties). The accompanying map shows that one of the 2015 victims was from Texas; on the other hand the Winner's Gallery from the Texas Lottery displays 40 photos of happy winners. To be fair, this includes some minor games in addition to the large jackpots that people might typically associate with the phrase "winning the lottery." For more on this see the third discussion question below.

Discussion

- The 1 in a million figure given for lightning risk is presumably an annual figure. But the man in the story was stuck as a teenager. How does this affect the calculation?

- From the CTV story, we learn that both the winner and his daughter were struck in "eerily similar" scenarios, as they were tying up boats on a lake. How does this affect your probability assessment? (It may be instructive to look at the table of locations and activities for the US data on the Weather Service web site.)

- In his blog post How many lottery winners are there in a year?, Dan Ma presents a more careful attempt to count lottery winners. Ma reports that data are not easy to come by. Looking at the 26-year period from the introduction of the California lottery until 2011, he counts 257 prizes of $1 million or more. This gives average of 10 per year. From this, he estimates that the total for the US is likely in the hundreds. Comment on the comparison with lightning strikes. (Note. The mortality rate from lightning strikes is estimated as 10-30%).

Submitted by Bill Peterson

Cautions on hypothesis testing

Scientific method: Statistical errors

by Regina Nuzzo, Nature News, 12 February 2014

The subtitle is "P values, the 'gold standard' of statistical validity, are not as reliable as many scientists assume." We read:

P values have always had critics. In their almost nine decades of existence, they have been likened to mosquitoes (annoying and impossible to swat away), the emperor's new clothes (fraught with obvious problems that everyone ignores) and the tool of a “sterile intellectual rake” who ravishes science but leaves it with no progeny[1]. One researcher suggested rechristening the methodology “statistical hypothesis inference testing”[2], presumably for the acronym it would yield.

Later on we have:

But while the rivals feuded — Neyman called some of Fisher's work mathematically “worse than useless”; Fisher called Neyman's approach “childish” and “horrifying [for] intellectual freedom in the west” — other researchers lost patience and began to write statistics manuals for working scientists. And because many of the authors were non-statisticians without a thorough understanding of either approach, they created a hybrid system that crammed Fisher's easy-to-calculate P value into Neyman and Pearson's reassuringly rigorous rule-based system. This is when a P value of 0.05 became enshrined as 'statistically significant', for example. “The P value was never meant to be used the way it's used today,” says [Steven] Goodman.

I have always bemoaned the conflation of exploratory and confirmatory:

Such practices have the effect of turning discoveries from exploratory studies — which should be treated with scepticism — into what look like sound confirmations but vanish on replication.

Submitted by Paul Alper

More on reproducibility

For much more on these ideas from Nature, see the Special Issue entitled Challenges in irreproducible research.

In a related vein, Jeff Witmer sent the following link to the Isolated Statisticians list: Estimating the reproducibility of psychological science, from Science, 28 August 2015. As Jeff noted, a number of interesting graphs and tables accompany the article. For teaching purposes, the site includes a link to a Powerpoint slide of a key summary plot of original study effect size versus replication effect size.

Our quotations section above includes a number of items related to reproducibility. From the Conclusions section of the Science abstract we have the following:

Reproducibility is not well understood because the incentives for individual scientists prioritize novelty over replication. Innovation is the engine of discovery and is vital for a productive, effective scientific enterprise. However, innovative ideas become old news fast. Journal reviewers and editors may dismiss a new test of a published idea as unoriginal. The claim that “we already know this” belies the uncertainty of scientific evidence. Innovation points out paths that are possible; replication points out paths that are likely; progress relies on both.

Help for the aging brain?

Omega-3 supplements and exercise have no protective effect on the aging brain, studies find

by Susan Perry, Minneapolis Post, 26 August 2015

Reporting on the results of two randomized trials published in JAMA, Perry writes, "Neither exercise nor omega-3 supplements has a protective effect on the brains of older adults, according to the results of two large randomized controlled studies published Tuesday [August 25, 2015] in JAMA." The details of the two studies may be found (behind a pay wall) at

- Effect of omega-3 fatty acids, lutein/zeaxanthin, or other nutrient supplementation on cognitive function

- Effect of a 24-month physical activity intervention vs health education on cognitive outcomes in sedentary older adults

For the omega-3 study, Perry points out that

Half of the [approximately 3500] participants were given supplements with omega-3, a fatty acid found most abundantly in fish, but also in flaxseed, walnuts, soy products and a few other plant sources. The other half took a placebo. All had their cognitive skills tested before the study started and then twice more, at two-year intervals. At the end of five years, no difference in cognitive abilities was found between the groups.

With regard to the exercise study, she reports that

After two years, the [1635] participants’ cognitive function was assessed through a series of tests. No significant differences in scores were found between the two groups [exercise or health education].

Perry asks,

Do these results mean that exercising and eating nutrient-rich healthful foods (not supplements) is a waste of time and effort for older adults?”

She concludes,

Absolutely not, as the authors of a JAMA editorial that accompanies the studies stress. While these two studies "failed to demonstrate significant cognitive benefits, these results should not lead to nihilism involving lifestyle factors in older adults," they write.

Discussion

- For what it is worth, the nutrient study (omega-3 and other supplements) reported p-values of .66 and .63 regarding the difference between treatment and control. What sort of reaction follows from those numbers?

- For what it is worth, the exercise study (of several different cognitive tests) reported p-values of .97 and .84 regarding the difference between treatment and control. Again, what sort of reaction follows from those numbers?

- The studies mentioned in JAMA involved elderly people who presumably by virtue of age have already declined. The editorial asserts, “It is likely the biggest gains in reducing the overall burden of dementia will be achieved through policy and public health initiatives promoting primary prevention of cognitive decline rather than efforts directed toward individuals who have already developed significant cognitive deficits.” The editorial lauds life-long adherence to the so-called Mediterranean Diet as a way of staying mentally and physically healthy. For a more caustic look at the Mediterranean Diet see this discussion from Chance News 92.

Submitted by Paul Alper

Scoring standardized-test essays

Making the Grades, by Todd Farley, 2009

Todd Farley worked in the standardized-testing industry for 15 years, beginning while he was in grad school in Iowa in the mid-1990s. His book is subtitled “My Misadventures in the Standardized Testing Industry.”

He describes the main issue for scorers as not “whether or not you appreciate or comprehend an essay” but rather “whether or not you can formulate exactly the same opinion about it as do all the people sitting around you.” And he describes one scoring scale as “flopp[ing] about like a puppy on a frozen pond.”

Early on in his career, a table leader explained the “Mystery of High Reliability”:

“It’s simple …. If I go into the system and see you gave a 0 to some student response and another scorer gave it a 1, I change one of the scores.” …. “The computer counts it as a disagreement only as long as the scores don’t match. Once I change one of the scores, the reliability number goes up.”

“The reliability numbers aren’t legit?” I asked.

“The reliability numbers are what we make them,” he said. ….

“Man,” I said, “I … thought we were in the business of education.” ….

“Maybe,” he said. “But I’d say we are in the business of education.”

And later in his career, a trainer expressed concerned about the distribution of scores:

“It seems we haven’t been scoring correctly.” …. “[O]ur numbers don’t match up with what the psychometricians predicted.” …. “They need us to find more 1’s.”

Farley’s reaction:

I assumed that if the psychometricians could tell, without actually looking at the student responses, there should be more 1’s, I figured there should be more 1’s.

Submitted by Margaret Cibes

Trump meets Benford

The truth about Donald Trump’s money: The scientific case for doubting his fantastical claims

by William Poundstone, Salon, 20 August 2015

Throughout his presidential campaign, Donald Trump has repeatedly claimed that his personal wealth is on the order of $10 billion. But not everyone believes him. For example, Poundstone cites an analysis by Forbes that puts the true figure closer to $4 billion.

In the present article, Poundstone presents a statistical reason to suspect Trump of exaggerating, based on Benford's Law. As background, he considers the case of Enron:

Enron was notorious for claiming revenues and earnings that narrowly exceeded round numbers. For instance, in 1997 Enron reported revenues of $20.373 billion. A number like $20-plus billion “feels” bigger than $19 billion, say. So Enron’s accountants nipped and tucked and found ways to claim they’d just beaten a psychologically significant threshold. This boosted Enron’s stock price, up until the company crashed and burned in 2001.

When numbers have been massaged in this way, the result is an overabundance of zeros as second digits, and the digit distribution will deviate from what is expected via Benford.

The application of Benford's Law to detect accounting fraud has been championed by Mark Nigrini. By now, many people are familiar with the Benford distribution for the leading digit of "naturally occurring numbers."

Perhaps less well-known is that the logarithmic law leads to distributions for all digits. For example, the joint distribution of the first and second digits is

<math> p(x,y) = \log_{10}(1 + 1/(10x+y)), \quad x=1,2,\ldots,9; \,y = 0, \ldots, 9.</math>

.

Summing on x leads to the marginal distribution for the second digit. If an empirical distribution of second digits deviates from this there may be reason for suspicion. As Poundstone notes, it is not necessary to do the calculations by hand, since Nigrini has made freely available a spreadsheet that automates such analyses. In the case of Trump's financial reports, there is evidence of exaggeration, as shown by this plot from the article.

(See Anchors, ultimatums and randomization from Chance News 100 for additional discussion of Poundstone's work).

Submitted by Bill Peterson