Chance News 87: Difference between revisions

m (→Quotations) |

|||

| (One intermediate revision by the same user not shown) | |||

| Line 29: | Line 29: | ||

Submitted by Margaret Cibes | Submitted by Margaret Cibes | ||

'''Note'''. The School of Mathematics at the University of Edinburgh has a [http://www.maths.ed.ac.uk/~aar/dreyfus.htm Poincaré and Dreyfus web page], with links to numerous reports about Poincaré's role in this case. | |||

---- | ---- | ||

"I forgot to factor in duplicity, mendacity, perfidy and incestuous amplification." | "I forgot to factor in duplicity, mendacity, perfidy and incestuous amplification." | ||

Latest revision as of 15:00, 3 September 2012

July 19, 2012 to August 25, 2012

Quotations

From The Theory That Would Not Die, by Sharon McGrayne, 2011:

- “There has not been a single date in the history of the law of gravitation when a modern significance test would not have rejected all laws [about gravitation] and left us with no law.”

- “No one has ever claimed that statistics was the queen of the sciences…. The best alternative that has occurred to me is ‘bedfellow.’ Statistics – bedfellow of the sciences – may not be the banner under which we would choose to march in the next academic procession, but it is as close to the mark as I can come.”

- When asked how to differentiate one Bayesian from another, a biostatician cracked, “Ye shall know them by their posteriors.”

Submitted by Margaret Cibes

"Among the mutual funds that were in the top half of performers in late 2009, according to Standard & Poor's, only 49% of them still remained in the upper half a year later; a year after that, only 24% were left. That is just about what you would get if you flipped a coin. Trying to find the winners is futile if victory is determined largely by luck."

The Wall Street Journal, July 21, 2012

Submitted by Margaret Cibes

"How definite is the attribution [of global warming] to humans? The carbon dioxide curve gives a better match than anything else we’ve tried. Its magnitude is consistent with the calculated greenhouse effect — extra warming from trapped heat radiation. These facts don’t prove causality and they shouldn’t end skepticism, but they raise the bar: to be considered seriously, an alternative explanation must match the data at least as well as carbon dioxide does."

Submitted by Bill Peterson

“At [Alfred] Dreyfus’s military trial in 1899, his lawyer called on … Henri Poincaré, who had taught probability at the Sorbonne for more than ten years. Poincaré believed in frequency-based statistics. But when asked whether Bertillon’s document was written by Dreyfus or someone else, he invoked Bayes’ rule. Poincaré considered it the only sensible way for a court of law to update a prior hypothesis with new evidence, and he regarded the forgery as a typical problem in Bayesian hypothesis testing. ….

“The judges issued a compromise verdict, again finding Dreyfus guilty but reducing his sentence to five years. …. [T]he president of the Republic issued a pardon two weeks later. …. Many American lawyers, unaware that probability helped to free Dreyfus, have considered his trial an example of mathematics run amok and a reason to limit the use of probability in criminal cases."

Submitted by Margaret Cibes

Note. The School of Mathematics at the University of Edinburgh has a Poincaré and Dreyfus web page, with links to numerous reports about Poincaré's role in this case.

"I forgot to factor in duplicity, mendacity, perfidy and incestuous amplification."

cited at “Pax Vobis”[1], October 23, 2008

Submitted by Margaret Cibes at the suggestion of Jim Greenwood

"True greatness is when your name is like ampere, watt, and fourier—when it's spelled with a lower case letter."

"When you are famous it is hard to work on small problems. This is what did [Claude Elwood] Shannon in. After information theory, what do you do for an encore? The great scientists often make this error. They fail to continue to plant the little acorns from which the mighty oak trees grow. They try to get the big thing right off. And that isn't the way things go. So that is another reason why you find that when you get early recognition it seems to sterilize you."

Submitted by Paul Alper, as retrieved from the Today in Science History website. Paul also notes that Hamming's famous dictum, "The purpose of computation is insight, not numbers", was paraphrased here by Bill Jefferys

- "To turn Richard W. Hamming's phrase, the purpose of statistics is insight, not numbers."

Forsooth

"The money involved in big-time college sports is staggering, and it grows almost exponentially every couple of years."

Submitted by Bill Peterson

The chicken or the egg?

“Teams that touch more at the beginning of the season win more over the course of the entire season. The two touchiest teams in the study, the Boston Celtics and Los Angeles Lakers, finished the season with two of the NBA's top three records, and the Celtics Kevin Garnett was the touchiest player in the league by at least a 15% margin, said … one of the study's authors.”

The Wall Street Journal, August 10, 2012

Submitted by Margaret Cibes

MHC dating and mating

Immunology is a branch of biomedical science that covers the study of all aspects of the immune system in all organisms.[2] It deals with the physiological functioning of the immune system in states of both health and diseases; malfunctions of the immune system in immunological disorders (autoimmune diseases, hypersensitivities, immune deficiency, transplant rejection); the physical, chemical and physiological characteristics of the components of the immune system in vitro, in situ, and in vivo. Immunology has applications in several disciplines of science, and as such is further divided.

Within one of those divisions is the study of MHC, the major histocompatibility complex. From Chance News 39: MHC which referenced Wikipedia,

The major histocompatibility complex (MHC) is a large genomic region or gene family found in most vertebrates. It is the most gene-dense region of the mammalian genome and plays an important role in the immune system, autoimmunity, and reproductive success.

It has been suggested that MHC plays a role in the selection of potential mates, via olfaction. MHC genes make molecules that enable the immune system to recognise invaders; generally, the more diverse the MHC genes of the parents, the stronger the immune system of the offspring. It would obviously be beneficial, therefore, to have evolved systems of recognizing individuals with different MHC genes and preferentially selecting them to breed with.

It has been further proposed that despite humans having a poor sense of smell compared to other organisms, the strength and pleasantness of sweat can influence mate selection. Statistically proving all of this--that is, by means of olfaction we somehow sense and select mates who are different in MHC-- via a convincing clinical trial is a challenge. Nevertheless, several things are in its favor. For one, unlike a medical trial, it is quite inexpensive to have subjects smelling a series of odoriferous T-shirts of contributors. For another, the lay media are sure to publicize the study with lame and juvenile headlines. The previously mentioned Chance News post describes several clinical trials and lay media reaction.

The latest manifestation of the linkage between MHC and mate selection is illustrated by this newspaper article which appeared throughout the country. Pheromone parties would appear to be the next big thing, replacing online dating. The only thing needed to make a match is a smelly T-shirt in a freezer bag. The article refers to Prof. Martha McClintock, founder of the Institute for Mind and Biology at the University of Chicago. "Humans can pick up this incredibly small chemical difference with their noses. It is like an initial screen.”

Discussion

1. MHC in humans is often called HLA, human leukocyte antigen.

2. An expert in the field informed Chance News that the cost of MHC serological typing is about $100 per person but is “not as accurate as molecular methods.” Previous T-shirt studies mentioned in Chance News involved about 100 people, thus an outlay of about $10,000.

3. The expert referred to in #2 says he serologically typed his former girlfriends and, as is often alleged, indeed, each was MHC dissimilar to him. No T-shirt was involved. He is “convinced it [olfaction] is a real biological phenomenon” because “it makes sense” and “the large amount of non-human data that supports it.” However, he further adds,

I would not recommend HLA testing for couples, because there are too many other sociological and physical variable that play into human ideas of attractiveness and mate preference. And from a strictly biological advantage standpoint, it doesn’t really matter anymore (see below).

The alleles that provide a selective advantage for pathogen protection are presumably the alleles that are most common, especially when maintained as a haplotype, which is the A-B-C-DR complex on one chromosome. For instance, A1, B8, DR17 haplotype is found in approx. 5% of Caucasians. But now that medical science allows for survival from infections via antimicrobics (antibiotics), there really is no such thing as immunologic evolution, because an infection that would have killed someone in childhood without the appropriate HLA 100 years ago can be saved, and go on to breed and pass the genes to their offspring. Science has, in essence, negated the Darwinian evolution process, particularly when it comes to the immune system.

4. Chance News also contacted several research immunologists to obtain their opinion on the T-shirt phenomenon. One had never heard of it and the other was very skeptical.

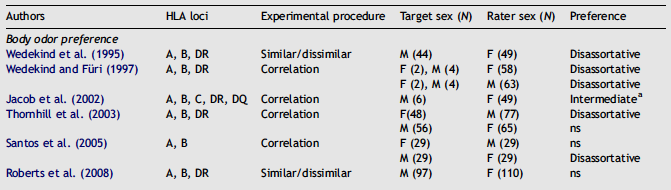

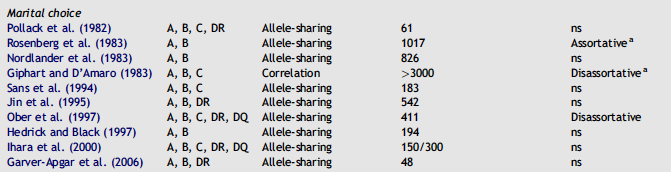

5. The following table of T-shirt smelling is taken from a review article by Roberts where “ns” stands for not [statistically] significant.

The following table regarding marital choice is from the same article. How does this compare and contrast with the T-shirt table above?

6. A study not mentioned in the above table regarding marital (mating) choice is Is Mate Choice in Humans MHC-Dependent?.

In this study, we tested the existence of MHC-disassortative mating in humans by directly measuring the genetic similarity at the MHC level between spouses. These data were extracted from the HapMap II dataset, which includes 30 European American couples from Utah and 30 African couples from the Yoruba population in Nigeria.

For the 30 African couples, the authors conclude

African spouses show no significant pattern of similarity/dissimilarity across the MHC region (relatedness coefficient, R = 0.015, p = 0.23), whereas across the genome, they are more similar than random pairs of individuals (genome-wide R = 0.00185, p<10−3).

For the 30 Utah couples, the authors conclude

On the other hand, the sampled European American couples are significantly more MHC-dissimilar than random pairs of individuals (R = −0.043, p = 0.015), and this pattern of dissimilarity is extreme when compared to the rest of the genome, both globally (genome-wide R= −0.00016, p = 0.739) and when broken into windows having the same length and recombination rate as the MHC (only nine genomic regions exhibit a higher level of genetic dissimilarity between spouses than does the MHC). This study thus supports the hypothesis that the MHC influences mate choice in some human populations.

Comment on the last sentence especially with regard to the size of the sample(s) and the strength of the conclusion.

7. Even if you are not of Yoruba ancestry nor reside in Utah, ask your parents if they are willing to have a serological MHC test to determine if they contributed to your genetic wellbeing.

8. Chance News was unable to find any MHC studies, T-shirt or otherwise, regarding the reproductive strategy or mate selection for homosexuals.

Submitted by Paul Alper

Grading on the curve

“Microsoft’s Lost Decade,” Vanity Fair, August 2012

(Preview here; access to full article requires subscription)

This article is an extensive critique of Bill Gates’ successor at Microsoft. One alleged management problem was related to the staff performance evaluation system.

At the center of the cultural problems was a management system called “stack ranking.” …. The system – also referred to as “the performance model,” “the bell curve,” or just “the employee review” – has, with certain variations over the years, worked like this: every unit was forced to declare a certain percentage of employees as top performers, then good performers, then average, then below average, then poor.

“If you were on a team of 10 people, you walked in the first day knowing that, no matter how good everyone was, two people were going to get a great review, seven were going to get mediocre reviews, and one was going to get a terrible review,” said a former software developer. ….

Supposing Microsoft had managed to hire technology’s top players into a single unit before they made their names elsewhere – Steve Jobs of Apple, Mark Zuckerberg of Facebook, Larry Page of Google, Larry Ellison of Oracle, and Jeff Bezos of Amazon – regardless of performance, under one of the iterations of stack ranking, two of them would have to be rated as below average, with one deemed disastrous.

Discussion

The stack ranking system is said to have sometimes been referred to as a “bell curve.” If a system were supposed to follow a bell, or normal, curve, would you have agreed with any of the outcomes described for a team of 10 people? For a team of the for the 5 “top players”? Why or why not?

Submitted by Margaret Cibes

Big, brief bang from new stadiums

“Do new stadiums really bring the crowds flocking”

by Mike Aylott, Significance web exclusive, April 11, 2012

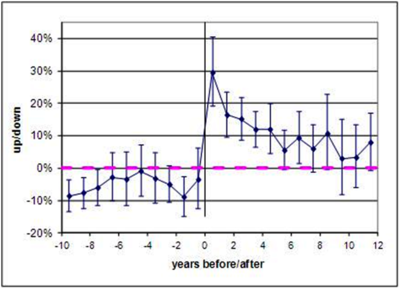

The author has studied how long the boom of a new stadium lasts, by considering all 30 relocating British soccer clubs up until 2011, and their average home league attendance for all ten seasons before and after relocation.

[T]he most important three factors have been accounted for in this analysis: a) the division the club is playing in that season, b) what position in this division the club finishes, and c) the overall average attendance in this division for this season.

See the graph below for the percent change in average attendance after adjusting for the three factors. The author notes that the pink dotted line refers to non-relocating clubs and that the error bars denote ±2 standard errors.

Submitted by Margaret Cibes

Communicating chances

“Safer gambling”, by Tristan Barnett, Significance web exclusive, April 4, 2012

In [Gaming Law Review and Economics] 2010 I suggested, as an approach towards responsible gambling and to increase consumer protection, to amend poker machine regulations such that the probabilities associated with each payout are displayed on each machine along with information that would advise players of the chances of ending up with a certain amount of profit after playing for a certain amount of time.

Questions

What do you think of this idea? Do you think it would encourage “responsible gambling”?

Submitted by Margaret Cibes

Blue dot technique

In the last edition of Chance News, we reported on the the fraudulent data case involving Dutch researcher Dirk Smeesters. More on the case can be found in this ScienceInsider story (26 June 2012), which references the Erasmus University report for the following:

Smeesters conceded to employing the so-called "blue-dot technique," in which subjects who have apparently not read study instructions carefully are identified and excluded from analysis if it helps bolster the outcome. According to the report, Smeesters said this type of massaging was nothing out of the ordinary. He "repeatedly indicates that the culture in his field and his department is such that he does not feel personally responsible, and is convinced that in the area of marketing and (to a lesser extent) social psychology, many consciously leave out data to reach significance without saying so."

But, what in the world is "blue-dot technique"? An answer can be found in this Research Digest post (a blog from the British Psychological Society), where a comment by Richard Gill at Leiden University (Same Prof. Gill as in Chance News 86) explains that

The blue dot test is that there's a blue dot somewhere in the form which your respondents have to fill in, and one of the last questions is "and did you see the blue dot"? Those who didn't see it apparently didn't read the instructions carefully. Seems to me fine to have such a question and routinely, in advance, remove all respondents who gave the wrong answer to this question. The question is whether Smeesters only used the blue dot test as an excuse to remove some of the respondents, and only used it after an initial analysis gave results which were decent but in need of further "sexing up" as he called it.

Submitted by Paul Alper

Simonsohn’s fraud detection technique

Uri Simonsohn was one of the investigators responsible for exposing Smeesters' fraud (see above). A draft of Simonsohn's latest paper, Just post it: the lesson from two cases of fabricated data detected by statistics alone, is now available. In it, he argues that researchers should be required to post their raw data when they publish. In the Discussion section, we read (pp. 18-19):

Why require it?

While working on this project I solicited data from a number of authors, sometimes due to suspicion, sometimes in the process of creating some of the benchmarks, sometimes due to pure curiosity. Consistent with previous efforts of obtaining raw data (Wicherts, 2011; Wicherts et al., 2006), the modal response was that they were no longer available. Hard disk failures, stolen laptops, ruined files, server meltdowns, corrupted spreadsheets, software incompatibility, sloppy record keeping, etc., all happen sufficiently often, self-reports suggest, that a forced backup by journals seems advisable.

Is raw data really needed?

The two cases were detected by analyzing means and standard deviations, why do we need raw data then? There is a third case, actually, where fraud took place with almost certainty, but due to lack of access to raw data, the suspicions cannot be properly addressed. If raw data were available, additional analyses could vindicate the author, or confirm her/his findings should be ignored. Because journals do not require raw data these analyses will never be conducted. Furthermore, I’ve come across a couple of papers where data suggest fabrication but other papers by the same authors do not show the pattern. One possibility is that these are mere coincidences. Another is that other people, e.g., their research assistants, tampered with the data.

Our research is often conducted by assistants whose honesty is seldom evaluated, and who have minimal reputation concerns. How many of them would we entrust with a bag filled with an uncounted number of $100 bills for a study? Trustworthy evidence is worth much more than $100. The availability of raw data would allow us to detect and prevent also these cases.

More discussion, from David Nussbaum's "Random Assignment" blog, can be found in these two posts: Simonsohn’s fraud detection technique revealed (20 July 2012) and Just post it (update) (22 July 2012).

Submitted by Paul Alper

More on the American Community Survey

As previously reported in Chance News 85, the US House of Representatives voted to cut the American Community Survey and the economic census from its appropriations bill. Margaret Cibes wrote to provide the following additional links:

- Robert Groves, director of the U.S. Census Bureau, had an op-ed Census surveys: Information that we need in the Washington Post (July 19), reacting to the vote to eliminate the ACS.

- The Post had its own editorial (May 15), advocating for these two programs.

Misleading mammography statistics

Komen charged with using deceptive statistics to 'sell' mammograms

by Susan Perry, MinnPost.com, 3 August, 2012

The article quotes a recent BMJ report Not So Stories: How a charity oversells mammography:

“If there were an Oscar for misleading statistics, [Susan G. Komen for the Cure] using survival statistics to judge the benefit of screening would win a lifetime achievement award hands down,” write the commentary’s authors, Dr. Steven Woloshin and Dr. Lisa Schwartz of the Center for Medicine and the Media at the Dartmouth Institute for Health Policy and Clinical Practice.

Komen ads state that the 5-year survival rates are 98% when breast cancer is detected early through screening, compared with 23% when it is not. But this ignores the phenomenon of "lead time bias." A little further down in the MinnPost article is a vivid description from BMJ of what this means:

Barnett Kramer, director of the National Cancer Institutes’ Division of Cancer Prevention, explained lead time bias by using an analogy to The Rocky and Bullwinkle Show, an old television cartoon popular in the US in the 1960s. In a recurring segment, Snidely Whiplash, a spoof on villains of the silent movie era, ties Nell Fenwick to the railroad tracks to extort money from her family. She will die when the train arrives. Kramer says, “Lead time bias is like giving Nell binoculars. She will see the train — be ‘diagnosed’ — when it is much further away. She’ll live longer from diagnosis, but the train still hits her at exactly the same moment.”

Even further down is a reference to a PubMed study, Do physicians understand cancer screening statistics?, where "Woloshin and Schwartz made the troubling finding that most primary-care physicians in the U.S. mistakenly believe improved survival rates are evidence that screening saves lives."

Additional coverage of this story can be found at:

- Screening news: Komen mammography ads criticized, HealthNewsReview.org

- Professors: Komen overstating benefits of mammograms, CNN.com

- BMJ OpEd says Komen ads false, MedPageToday

Submitted by Paul Alper

Investigating fitness products

The unproven claims of fitness products

by Nikolas Bakalar, Well blog, New York Times, 23 July 2012

We are accustomed to seeing frequent advertisements for sports drinks, nutritional supplements, athletic shoes, etc. But how many of the claims made in these ads stand up to scientific scrutiny? The NYT article reports on a BMJ study entitled The evidence underpinning sports performance products: a systematic assessment. The news is not encouraging.

Investigators culled advertisements from leading print magazines and links on the associated websites, with the aim of reviewing any studies cited in the ads to support product claims. As described in the abstract of the BMJ paper:

The authors viewed 1035 web pages and identified 431 performance-enhancing claims for 104 different products. The authors found 146 references that underpinned these claims. More than half (52.8%) of the websites that made performance claims did not provide any references, and the authors were unable to perform critical appraisal for approximately half (72/146) of the identified references. None of the references referred to systematic reviews (level 1 evidence). Of the critically appraised studies, 84% were judged to be at high risk of bias. Randomisation was used in just over half of the studies (58.1%), allocation concealment was only clear in five (6.8%) studies; and blinding of the investigators, outcome assessors or participants was only clearly reported as used in 20 (27.0%) studies. Only three of the 74 (2.7%) studies were judged to be of high quality and at low risk of bias.

The distinction between "allocation concealment" and "blinding" may not be familiar (it was new vocabulary for this reviewer). A fuller discussion can be found here, where we read:

Allocation concealment should not be confused with blinding. Allocation concealment concentrates on preventing selection and confounding biases, safeguards the assignment sequence [of treatments to participants] before and until allocation, and can always be successfully implemented. Blinding concentrates on preventing study personnel and participants from determining the group to which participants have been assigned (which leads to ascertainment bias), safeguards the sequence after allocation, and cannot always be implemented.

The BMJ site includes a 9 minute video in which the study authors discuss their work. Among the topics covered are the difficulty of tracking down the actual references to research. The advertisements prominently feature testimonials from athletes, whereas the actual studies were much harder to locate. Also, the measurements in the studies were typically not of actual athletic performance, but rather of biological "proxies" such as blood or muscle tissue. Finally, there was little recognition of the limitations of study designs with regard to (lack of) blinding or randomization, and, in particular, small sample sizes. Instead, the references tended to present their particular findings as definitive. Indeed, as noted in the abstract above, none of references cited systematic reviews. The authors conjecture that this may in part reflect an inability to understand the research literature.

Submitted by Bill Peterson

Statistics2013

The year 2013 has been designated as the International Year of Statistics. As described on the web site this is

[A] worldwide celebration and recognition of the contributions of statistical science. Through the combined energies of organizations worldwide, Statistics2013 will promote the importance of Statistics to the broader scientific community, business and government data users, the media, policy makers, employers, students, and the general public.

Thanks to Jeff Witmer for sending this link to the Isolated Statisticians list.