Chance News 85: Difference between revisions

m (→Quotations) |

|||

| (78 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

May 1, 2012 to June 11, 2012 | |||

==Quotations== | ==Quotations== | ||

| Line 14: | Line 16: | ||

<div align=right>-- Robert Gentleman, quoted at [http://onertipaday.blogspot.com/ One R Tip A Day]</div> | <div align=right>-- Robert Gentleman, quoted at [http://onertipaday.blogspot.com/ One R Tip A Day]</div> | ||

Submitted by Bill Peterson | |||

---- | |||

"Statistics [from observational studies] cannot turn sow's ears into silk purses, no matter how large the number of sow's ears available for study. Nor can adding up large numbers of scientifically impoverished studies yield scientific information. The appeal of statistics is that it is (a) very cheap compared to scientific testing, and (b) it can produce results to order because the data itself imposes relatively few constraints on the statistical conclusion drawn from it. Both of these render such methods irresistible to politicians and advocacy groups.” | |||

<div align=right>Stephen Krumpe blogging[http://online.wsj.com/article/SB10001424052702303916904577377841427001840.html?KEYWORDS=gautam+naik&_nocache=1336253792402&user=welcome&mg=id-wsj#articleTabs%3Dcomments] in response to<br> | |||

[http://online.wsj.com/article/SB10001424052702303916904577377841427001840.html?KEYWORDS=gautam+naik&_nocache=1336253792402&user=welcome&mg=id-wsj “Analytical Trend Troubles Scientists”], <i>The Wall Street Journal</i>, May 4, 2012</div> | |||

Submitted by Margaret Cibes | |||

Submitted by | ---- | ||

“The first principle [of scientific integrity] is that you must not fool yourself – and you are the easiest person to fool. …. I would like to add something that’s not essential to the science, but something I kind of believe, which is that you should not fool the layman when you’re talking as a scientist. ….One example of the principle is this: If you’ve made up your mind to test a theory, or you want to explain some idea, you should always decide to publish it whichever way it comes out. If we only publish results of a certain kind, we can make the argument look good. We must publish <i>both</i> kinds of results.” | |||

<div align=right>Richard Feynman, in [http://calteches.library.caltech.edu/3043/1/CargoCult.pdf “Cargo Cult Science”]<br> | |||

Caltech’s 1974 commencement address</div> | |||

Submitted by Margaret Cibes | |||

---- | |||

From <i>Significance</i> magazine, April 2012:<br> | |||

“It was a cause of great sorrow to me that I had absolutely no talent for the game [Liverpool football], or any sport other than chess, and I had to accept that I am a centre forward trapped inside a statistician’s body.” | |||

<div align=right>"Dr Fisher’s casebook: Sporting life"</div> | |||

“We see an apparently unending upward spiral in remarkable levels of athletic achievement …. I think a major contributor to this remarkable increase in proficiency is population size. …. A simple statistical model that captures this idea posits that human running ability has not changed over the past century. That, in both 1900 and 2000 the distribution of running ability of the human race is well characterized by a normal curve with the same average and the same variability. What has changed is how many people live under that curve. …. [T]wo factors are working together. There is the growth of the total population of the world. There is also the (non-parallel) growth of the population who can participate. …. The best of a billion is likely better than the best of a million.” | |||

<div align=right>Howard Wainer in [http://www.significancemagazine.org/details/magazine/1755673/Piano-virtuosos-and-the-fourminute-mile.html Piano virtuosos and the four-minute mile].</div> | |||

Submitted by Margaret Cibes | |||

---- | |||

"This chapter [37: Bayesian Inference and Sampling Theory] is only provided for those readers who are curious about the sampling theory / Bayesian methods debate. If you find any of this chapter tough to understand, please skip it. There is no point trying to understand the debate. Just use Bayesian methods--they are easier to understand than the debate itself!" | |||

<div align=right>David MacKay in [http://www.inference.phy.cam.ac.uk/mackay/itila/book.html ''Information Theory, Inference, and Learning Algorithms''], p. 457</div> | |||

Submitted by Paul Alper | |||

==Forsooth== | ==Forsooth== | ||

"We're spending $70 per person to fill this [the American Community Survey] out. That’s just not cost effective," he continued, "especially since in the end this is not a scientific survey. It’s a random survey." | |||

<div align=right> --Daniel Webster, first term Republican congressman from Florida</div> | |||

As quoted in [http://www.nytimes.com/2012/05/20/sunday-review/the-debate-over-the-american-community-survey.html The beginning of the end of the Census?], by Catherine Rampell, ''New York Times'', 19 May 2012. Webster sponsored legislation in the House of Representatives that would eliminate the Survey. (More on this story [http://test.causeweb.org/wiki/chance/index.php/Chance_News_85#House_cuts_American_Community_Survey below].) | |||

Submitted by Steve Simon | |||

==Fish oil== | ==Fish oil== | ||

| Line 72: | Line 105: | ||

'''Discussion''' | '''Discussion''' | ||

1. They almost got the description of the optimal | 1. They almost got the description of the optimal strategy for the famous [http://en.wikipedia.org/wiki/Secretary_problem Secretary Problem] correct. What is missing from this argument? | ||

2. Do you believe that Conard actually used this system himself? | 2. Do you believe that Conard actually used this system himself? | ||

| Line 79: | Line 112: | ||

===Comments=== | ===Comments=== | ||

1. This is a long article, which | 1. This is a long article, which presents Conard's argument as a defense of unbridled, winner-take-all competition. He rejects the idea that income inequality is a problem; indeed, he thinks even greater rewards are needed as incentives for risk-taking entrepreneurs, whose efforts to boost the economy will benefit everyone. As a reminder that we have heard this before, Paul Alper sent the following quote from a previous century: | ||

<blockquote>The American Beauty Rose can be produced in the splendor and fragrance which bring cheer to its beholder only by sacrificing the early buds which grow up around it. This is not an evil tendency in business. It is merely the working-out of a law of nature and a law of God.</blockquote> | <blockquote>The American Beauty Rose can be produced in the splendor and fragrance which bring cheer to its beholder only by sacrificing the early buds which grow up around it. This is not an evil tendency in business. It is merely the working-out of a law of nature and a law of God.</blockquote> | ||

<div align=right>--John D. Rockefeller, address to the students of Brown University, quoted in Ida Tarbell (1904) ''The History of the Standard Oil Company''</div> | <div align=right>--John D. Rockefeller, address to the students of Brown University, quoted in Ida Tarbell (1904) ''The History of the Standard Oil Company''</div> | ||

2. See also the post [http://economix.blogs.nytimes.com/2012/05/07/incentive-perversity/ Incentive perversity], Economix blog, ''New York Times'', 7 May 2012. University of Massachusetts economist Nancy Folbre | 2. See also the post [http://economix.blogs.nytimes.com/2012/05/07/incentive-perversity/ Incentive perversity], Economix blog, ''New York Times'', 7 May 2012. University of Massachusetts economist Nancy Folbre reminds us that the links between rewards and performance are not so clear cut, and warns that incentives can be distorted when economic rewards grow too extreme. As evidence, she points out that high-stakes educational testing led schools to cheat on exams, and out-sized contracts for star athletes led to an era tainted by performance-enhancing drugs. She concludes that, "Good incentives are always a good idea. But it’s not as easy to design them as it might seem, because they should discourage a host of economic sins — not just sloth and fear, but also cruelty and greed." | ||

==TV and the shortening of life== | ==TV and the shortening of life== | ||

| Line 97: | Line 130: | ||

</blockquote> | </blockquote> | ||

Needless to say, this highly | Needless to say, this highly speculative--but very quotable--statistical analysis has been picked up by every conceivable web site since last August. It just made its appearance in the Minneapolis ''Star Tribune'': [http://www.startribune.com/lifestyle/150359955.html Can TV cut your life short?], by Jeff Strickler, 7 May 2012. The ''New York Times'' mentioned it a week earlier: [http://www.nytimes.com/2012/04/29/sunday-review/stand-up-for-fitness.html?_r=1&hp Don’t just sit there], by Gretchen Reynolds, 28 April 2012. | ||

Submitted by Paul Alper | Submitted by Paul Alper | ||

| Line 103: | Line 136: | ||

===Discussion=== | ===Discussion=== | ||

1. The NYT article describes a number of studies | 1. The NYT article describes a number of studies concerning the ill effects of inactivity. Their entire description of the Australian study reads, "researchers determined that watching an hour of television can snip 22 minutes from someone’s life. If an average man watched no TV in his adult life, the authors concluded, his life span might be 1.8 years longer, and a TV-less woman might live for a year and half longer than otherwise." What is missing here? | ||

2. Elsewhere in the story, the NYT notes that "Television viewing is a widely used measure of sedentary time." What | 2. Elsewhere in the story, however, the NYT notes that "Television viewing is a widely used measure of sedentary time." What does this suggest about interpreting the Australian study? | ||

==Approval statistic== | =="Approval" statistic== | ||

[http://jonathanpelto.com/2012/05/07/memo-to-connecticut-democrats-only-all-others-should-skip-this-post/ “Memo to Connecticut Democrats”], May 7, 2012<br | [http://jonathanpelto.com/2012/05/07/memo-to-connecticut-democrats-only-all-others-should-skip-this-post/ “Memo to Connecticut Democrats”], by Jonathan Pelto, May 7, 2012<br> | ||

From a CT blogger's website: | |||

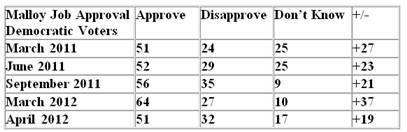

<blockquote>The following chart indicates how Connecticut Democratic voters rate Governor Malloy’s job performance. In politics we use a statistic that measures the rate of approval compared to the rate of disapproval – we call that the overall positive or negative rating of an individual (i.e. +/-). The higher the positive rating the better the candidate or elected official is doing.</blockquote> | |||

[[File:Malloy_polls.jpg]] | [[File:Malloy_polls.jpg]] | ||

===Questions=== | ===Questions=== | ||

1. Can you think of a reason why the June 2011 poll figures sum to 106?<br> | 1. Can you think of a reason why the June 2011 poll figures sum to 106?<br> | ||

2. The rightmost column heading might suggest that these figures are margins of error (percentage points), except for their size. If they had been margins of error, about how many people would have been in the sample on March 2011? Is that realistic?<br> | 2. The rightmost column heading might suggest that these figures are margins of error (percentage points), except for their size. If they had been margins of error, about how many people would have been in the sample on March 2011? Is that realistic?<br> | ||

3. According to the text, the rightmost column contains a “statistic that measures the rate of approval compared to the rate of disapproval.” How do you think that the | 3. According to the text, the rightmost column contains a “statistic that measures the rate of approval compared to the rate of disapproval.” How do you think that the blogger compared approval/disapproval figures to come up with the figures in the rightmost column? (While I couldn’t find a definition of “approval rating,” I did found that the blogger's "statistic" is pretty common; for example, see Wikipedia's [http://en.wikipedia.org/wiki/United_States_presidential_approval_rating "United States presidential approval rating"].)<br> | ||

4. How might you have entitled the +/- column, in order to clarify its meaning?<br> | 4. How might you have entitled the +/- column, in order to clarify its meaning?<br> | ||

5. The blogger opens the article by stating that Malloy's "support from members of [his] own party ... is at a breathtakingly low + 19 percent." Do you agree? What would you have said?<br> | |||

Submitted by Margaret Cibes | Submitted by Margaret Cibes | ||

==Happiness and variability== | |||

[http://www.usatoday.com/news/health/wellness/story/2012-05-03/parents-happiness-population-survey/54767508/1? Parents today are happier than non-parents, studies suggest]<br> | |||

by Sharon Jayson, ''USA Today'', 5 March 2012 | |||

This article drew commentary on Andrew Gelman's blog ([http://andrewgelman.com/2012/05/happy-news-on-happiness-what-can-we-believe/ Happy news on happiness; what can we believe?], 7 May 2012). Gelman notes that the article concludes with the following quote: <blockquote>The first child increases happiness quite a lot. The second child a little. The third not at all.</blockquote> | |||

The quote is attributed to Mikko Myrskylä, the coauthor of [http://onlinelibrary.wiley.com/doi/10.1111/j.1728-4457.2011.00389.x/abstract A Global Perspective on Happiness and Fertility], one of the two studies described in the article. | |||

Gelman then complains, "As a statistician, I hate hate hate hate hate when people ignore variability and present results deterministically. The above statement might be an accurate summary of average patterns but is certainly not true in every case!" | |||

Submitted by Paul Alper | |||

==House cuts American Community Survey == | |||

[http://www.nytimes.com/2012/05/14/opinion/operating-in-the-dark.html?_r=2 “Operating in the dark”], Editorial, <i>New York Times</i>, May 13, 2012<br> | |||

[http://www.washingtonpost.com/blogs/ezra-klein/post/does-government-knowledge-mean-government-intrusion/2012/05/13/gIQAznUtMU_blog.html “Does government knowledge mean government intrusion?”], by Suzy Khimm,<i>Washington Post</i>, May 13, 2012<br> | |||

Last week the U.S. House of Representatives voted to cut funds for the American Community Survey and at least part of the Economic Census. The former, “a bipartisan creation” in 2005, provides <i>annual</i> updates of economic, demographic and housing characteristics, which supplement the <i>decennial</i> census information. Let’s see what the Senate does …. | |||

See the U.S. Census Bureau Director’s statement (and video) [http://directorsblog.blogs.census.gov/2012/05/11/a-future-without-key-social-and-economic-statistics-for-the-country/ here]. | |||

A blog post from the American Statistical Association website can be found [http://community.amstat.org/AMSTAT/Blogs/BlogViewer/?BlogKey=05b8d607-c521-4d51-8228-b6b2f0826073 here]. It calls the vote "a blow to smart, efficient government and data-driven decisionmaking." | |||

Submitted by Margaret Cibes | |||

==Falling survey response rates== | |||

[http://www.washingtonpost.com/blogs/behind-the-numbers/post/political-surveys-survive-response-fall-off-pew-finds/2012/05/15/gIQAdo0gRU_blog.html Political surveys survive response fall-off, Pew finds]<br> | |||

by Scott Clement, Behind the Numbers blog, ''Washington Post'', 15 May 2012 | |||

When I discuss polling in my introductory statistics classes, and mention random digit dialing, like to reference the sidebar "How the Poll Was Conducted" that accompanies NYT/CBS polls, since it includes an unusually complete summary of the process. Regarding a [http://www.nytimes.com/2012/04/19/us/how-the-poll-was-conducted.html?ref=politics poll from this spring] we read | |||

<blockquote>The sample of land-line telephone exchanges called was randomly selected by a computer from a complete list of more than 72,000 active residential exchanges across the country. The exchanges were chosen so as to ensure that each region of the country was represented in proportion to its share of all telephone numbers. | |||

<br><br> | |||

Within each exchange, random digits were added to form a complete telephone number, thus permitting access to listed and unlisted numbers alike. Within each household, one adult was designated by a random procedure to be the respondent for the survey. | |||

<br><br> | |||

To increase coverage, this land-line sample was supplemented by respondents reached through random dialing of cellphone numbers. The two samples were then combined and adjusted to assure the proper ratio of land-line-only, cellphone-only, and dual phone users. | |||

<br><br> | |||

Interviewers made multiple attempts to reach every phone number in the survey, calling back unanswered numbers on different days at different times of both day and evening. | |||

</blockquote> | |||

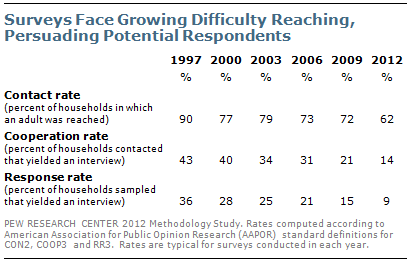

This sounds impressive. Not mentioned, however, is the actual success rate in reaching potential respondents. The ''Washington Post'' blog references a recent Pew Rearch Center report, [http://www.people-press.org/2012/05/15/assessing-the-representativeness-of-public-opinion-surveys/ Assessing the Representativeness of Public Opinion Surveys], which finds that the average response rate to political polls has steadily fallen over the last decade and a half, and is now below 10%! The table below is reproduced from the report. | |||

<center>[[File:Pew_surveys.png]]</center> | |||

This sounds truly abysmal. Surprisingly, Pew reports that the accuracy of polling results does not appear to be suffering. To assess this, they conducted surveys using their standard methodology, which involves dialing randomly selected cellphone and land line numbers over a 5-day period, and then weighting results to reflect demographic proportions in the overall population. Interviewers solicited information that could be compared to data from major government surveys where sustained followups produce response rates over 75%. Substantial agreement was found on variables such as gender, age, race, citizenship, marital status, home ownership and health status. One conspicuous exception was education: 39% of respondents in the Pew survey say they graduated from college, as opposed to the 28% figure found by the Current Population Survey. | |||

Some complementary ideas are discussed in [http://jama.jamanetwork.com/article.aspx?volume=307&issue=17&page=1805 Response rates and nonresponse errors in surveys], by Timothy P. Johnson and Joseph S. Wislar, ''JAMA'', 2 May, 2012. | |||

'''Discussion''' | |||

Can you suggest possible reasons for the disparity on education in the Pew report? | |||

Submitted by Bill Peterson | |||

==Overdiagnosis== | |||

[http://www.bmj.com/content/344/bmj.e3502.full?ijkey=tzRK2ncLto2JJ9I&keytype=ref Preventing overdiagnosis: how to stop harming the healthy]<br> | |||

by Ray Moynihan, et. al.,, ''BMJ'', 29 May 2012 | |||

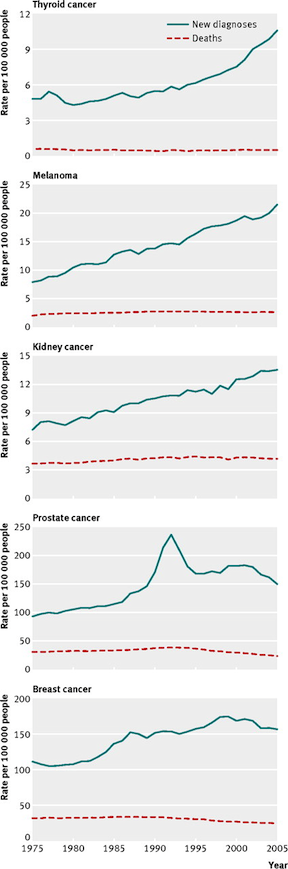

These graphs below are reproduced from the article (full-sized version [http://www.bmj.com/highwire/filestream/586982/field_highwire_fragment_image_l/0.jpg here]). They show mortality rates and rates of new diagnosis for five kinds of cancer over the 30-year period 1975-2005. Observe that while mortality has been roughly constant, treatment is way up: | |||

<center>[[File:cancer_rates.png]]</center> | |||

The authors contend that | |||

<blockquote>Medicine’s much hailed ability to help the sick is fast being challenged by its propensity to harm the healthy. A burgeoning scientific literature is fuelling public concerns that too many people are being overdosed, overtreated, and overdiagnosed. Screening programmes are detecting early cancers that will never cause symptoms or death, sensitive diagnostic technologies identify 'abnormalities' so tiny they will remain benign, while widening disease definitions mean people at ever lower risks receive permanent medical labels and lifelong treatments that will fail to benefit many of them. With estimates that more than $200bn (£128bn; €160bn) may be wasted on unnecessary treatment every year in the United States, the cumulative burden from overdiagnosis poses a significant threat to human health. | |||

</blockquote> | |||

Submitted by Paul Alper | |||

===Notes=== | |||

For related discussion, see [http://test.causeweb.org/wiki/chance/index.php/Chance_News_74#Overdiagnosed.2C_overtreated Chance News 74: Overdiagnosed, overtreated], which described H. Gilbert Welch’s book [http://www.amazon.com/Overdiagnosed-Making-People-Pursuit-Health/dp/0807022004/ref=sr_1_1?ie=UTF8&qid=1308760393&sr=8-1 <i>Overdiagnosed: Making People Sick In The Pursuit of Health</i>]. More recently, | |||

Welch wrote an op/ed piece entitled [http://www.nytimes.com/2012/02/28/opinion/overdiagnosis-as-a-flaw-in-health-care.html If you feel O.K., maybe you are O.K.] for the ''New York Times'' (27 February 2012). | |||

==PSA, overdiagnosis, and anecdotal evidence== | |||

[http://www.npr.org/blogs/health/2012/05/28/153879847/with-psa-testing-the-power-of-anecdote-often-trumps-statistics With PSA testing, the power of anecdote often trumps statistics]<br> | |||

by Richard Knox, ''NPR: All Things Considered'', 28 May 2012 | |||

One of the charts in the preceding post concerns prostate cancer. The US Preventative Services Task Force has recently issued a recommendation against routine PSA screening for prostate cancer; NPR reported that story [http://www.npr.org/blogs/health/2012/05/21/153234671/all-routine-psa-tests-for-prostate-cancer-should-end-task-force-says here]. The Task Force recommendation statement and related materials are freely available from the [http://www.annals.org/content/early/2012/05/21/0003-4819-157-2-201207170-00459.full ''Annals of Internal Medicine''], where we read | |||

<blockquote> | |||

There is convincing evidence that PSA-based screening leads to substantial overdiagnosis of prostate tumors. The amount of overdiagnosis of prostate cancer is of important concern because a man with cancer that would remain asymptomatic for the remainder of his life cannot benefit from screening or treatment. There is a high propensity for physicians and patients to elect to treat most cases of screen-detected cancer, given our current inability to distinguish tumors that will remain indolent from those destined to be lethal. Thus, many men are being subjected to the harms of treatment of prostate cancer that will never become symptomatic. | |||

</blockquote> | |||

Such recommendations predictably generate a backlash from those who feel they have benefited from the procedures. The present story chronicles some of these reactions for the case of PSA screening. It quotes one survivor, who believes that surgery after a positive test result saved his life: | |||

<blockquote> | |||

My theory on statistics is anybody can look at the same stats and come up with their own opinion. Government does it; each political party does it. Whatever you want it to come up to read, you can fine-tune it and make it come up to that. | |||

</blockquote> | |||

Ohio State University Psychology Professor Hal Arkes finds that for most people, such anecdotal testimony trumps statistical reasoning, which leads to "gross over-estimation of the benefits of PSA screening." He says: | |||

<blockquote> | |||

Statistics are dry and they're boring and they're hard to understand. They don't have the impact of someone standing in front of you telling their heart-rending story. I think this is common to just about everybody. | |||

</blockquote> | |||

The NPR story concludes with remarks from Michael Barry, who heads the Informed Medical Decisions Foundation in Boston. Barry worries about the reaction to a blanket recommendation from an expert panel. Instead, he recommends that doctors explain individually to patients that the screening has shown to be of little benefit. He says, "I think many men won't want the test in that circumstance. But some will, and I'm comfortable with that." | |||

'''Discussion'''<br> | |||

For most people, do you think their doctor's description of the statistical evidence would be able to counter a compelling anecdote from someone they know? | |||

Submitted by Bill Peterson | |||

==Commas and communication== | |||

[http://opinionator.blogs.nytimes.com/2012/05/25/some-comma-questions/?hp Some comma questions]<br> | |||

by Ben Yagoda, ''New York Times'', 25 May 2012 | |||

Most of the article is about the use of the comma in (American) English writing. But it includes the following quote below is from Geron James Spray, an English teacher in Santa Fe, N.M. | |||

<blockquote> | |||

Being an English teacher puts one in an interesting conundrum. Many people think that if you understand what they say or write, then they’ve said it or written it correctly and it needs no correction or improvement. For example, if a student walks into a math class and says, “Five times five equals 20,” then of course it’s the math teacher’s job to correct that student and the student won’t take offense. However, if a student walks into my class and says, “I did that real good,” and I correct her, then she’ll roll her eyes and say, “Oh, whatever, you know what I meant.” | |||

</blockquote> | |||

Although not strictly about probability / statistics, it illustrates the difference between the two kinds of discourses, scientific and humanistic. | |||

Submitted by Paul Alper | |||

==Can exercise be bad?== | |||

[http://well.blogs.nytimes.com/2012/05/30/can-exercise-be-bad-for-you/ For some, exercise may increase heart risk]<br> | |||

by Gina Kolata, ''New York Times'', 30 May 2012 | |||

Is exercise good for everyone? Maybe not according to a recent analysis. We read here that: | |||

<blockquote> | |||

By analyzing data from six rigorous exercise studies involving 1,687 people, the group found that about 10 percent actually got worse on at least one of the measures related to heart disease: blood pressure and levels of insulin, HDL cholesterol or triglycerides. About 7 percent got worse on at least two measures. And the researchers say they do not know why. | |||

</blockquote> | |||

The story drew comment on Andrew Gelman's blog [http://andrewgelman.com/2012/06/massive-confusion-about-a-study-that-purports-to-show-that-exercise-may-increase-heart-risk/ Massive confusion about a study that purports to show that exercise may increase heart risk] (4 June 2012). Andrew points out that, since we know that individuals vary, we should not be utterly surprised that some people failed to improve or even declined. Furthermore, this is a simple before-and-after observational comparison, and Andrew is unconvinced by assertions that other potential explanations for the decline had been ruled out. See the full post and submitted comments for interesting discussion. | |||

Finally, one ''NYT'' reader posted this comment to the article | |||

<blockquote> | |||

It is possible, that 10% of the participants in this study would have showed these symptoms anyway-regardless of their participation in this exercise study. The research design, should have included a "return to baseline" phase. In other words, treatment/exercise should have been removed and participants should have been observed for changes. If this was done, it is not mentioned. | |||

</blockquote> | |||

Submitted by Bill Peterson | |||

Latest revision as of 20:11, 5 July 2013

May 1, 2012 to June 11, 2012

Quotations

“Journalists could help people grasp uncertainty and help them apply critical thinking to health care decision-making issues…rather than promote false certainty, shibboleths and non-evidence-based, cheerleading advocacy.”

"To treat your facts with imagination is one thing; to imagine your facts is another."

Science Writing in the Age of Denial, University of Wisconsin, Madison

Submitted by Paul Alper

"A big computer, a complex algorithm and a long time does not equal science."

Submitted by Bill Peterson

"Statistics [from observational studies] cannot turn sow's ears into silk purses, no matter how large the number of sow's ears available for study. Nor can adding up large numbers of scientifically impoverished studies yield scientific information. The appeal of statistics is that it is (a) very cheap compared to scientific testing, and (b) it can produce results to order because the data itself imposes relatively few constraints on the statistical conclusion drawn from it. Both of these render such methods irresistible to politicians and advocacy groups.”

“Analytical Trend Troubles Scientists”, The Wall Street Journal, May 4, 2012

Submitted by Margaret Cibes

“The first principle [of scientific integrity] is that you must not fool yourself – and you are the easiest person to fool. …. I would like to add something that’s not essential to the science, but something I kind of believe, which is that you should not fool the layman when you’re talking as a scientist. ….One example of the principle is this: If you’ve made up your mind to test a theory, or you want to explain some idea, you should always decide to publish it whichever way it comes out. If we only publish results of a certain kind, we can make the argument look good. We must publish both kinds of results.”

Caltech’s 1974 commencement address

Submitted by Margaret Cibes

From Significance magazine, April 2012:

“It was a cause of great sorrow to me that I had absolutely no talent for the game [Liverpool football], or any sport other than chess, and I had to accept that I am a centre forward trapped inside a statistician’s body.”

“We see an apparently unending upward spiral in remarkable levels of athletic achievement …. I think a major contributor to this remarkable increase in proficiency is population size. …. A simple statistical model that captures this idea posits that human running ability has not changed over the past century. That, in both 1900 and 2000 the distribution of running ability of the human race is well characterized by a normal curve with the same average and the same variability. What has changed is how many people live under that curve. …. [T]wo factors are working together. There is the growth of the total population of the world. There is also the (non-parallel) growth of the population who can participate. …. The best of a billion is likely better than the best of a million.”

Submitted by Margaret Cibes

"This chapter [37: Bayesian Inference and Sampling Theory] is only provided for those readers who are curious about the sampling theory / Bayesian methods debate. If you find any of this chapter tough to understand, please skip it. There is no point trying to understand the debate. Just use Bayesian methods--they are easier to understand than the debate itself!"

Submitted by Paul Alper

Forsooth

"We're spending $70 per person to fill this [the American Community Survey] out. That’s just not cost effective," he continued, "especially since in the end this is not a scientific survey. It’s a random survey."

As quoted in The beginning of the end of the Census?, by Catherine Rampell, New York Times, 19 May 2012. Webster sponsored legislation in the House of Representatives that would eliminate the Survey. (More on this story below.)

Submitted by Steve Simon

Fish oil

Weighing the evidence on fish oils for heart health

by Anahad O’Connor, Well blog, New York Times, 11 April 2012

According to O'Connor,

Fish oil supplements have become some of the most popular dietary pills on the market, largely on the strength of medical research linking diets high in baked and broiled fish to lower rates of heart disease. Across the United States, annual sales of purified fish oil, commonly sold as omega-3 fatty acids, are in the neighborhood of a billion dollars. And in some parts of Europe, doctors routinely prescribe fish oils to patients with heart disease.

People who put their faith in fish oil supplements may want to reconsider. A new analysis of the evidence casts doubt on the widely touted notion that the pills can prevent heart attacks in people at risk for cardiovascular disease.

And well the people might. O’Connor is referring to “Efficacy of Omega-3 Fatty Acid Supplements (Eicosapentaenoic Acid and Docosahexaenoic Acid) in the Secondary Prevention of Cardiovascular Disease; A Meta-analysis of Randomized, Double-blind, Placebo-Controlled Trials” by S.M. Kwak, et al., to appear in the Archives of Internal Medicine. Not only did:

Our meta-analysis showed insufficient evidence of a secondary preventive effect of omega-3 fatty acid supplements against overall cardiovascular events among patients with a history of cardiovascular disease,

But also:

Furthermore, no significant preventive effect was observed in subgroup analyses by the following: country location, inland or coastal geographic area, history of CVD, concomitant medication use, type of placebo material in the trial, methodological quality of the trial, duration of treatment, dosage of eicosapentaenoic acid [EPA] or docosahexaenoic acid [DHA], or use of fish oil supplementation only as treatment.

Discussion

1. The authors started their meta-analysis with 1007 articles; eventually, after 181 studies were excluded as duplicates and others were dropped out for various other reasons, they were left with “14 randomized, double blind, placebo-controlled trials.” The total number of subjects in the 14 trials was 20, 485. As stated above, statistical significance was not to be seen. Two large studies of 11,234 and 18, 645 subjects, respectively which did show beneficial effects from fish oil were not included in the 14; they were rejected because they were “open-label” studies. Why are open-label studies suspect?

2. Why did the subjects in the placebo arm of the 14 studies receive various vegetable oils? Some of those subjects in the placebo arm received olive oil. Why might this “have disguised the ‘true’ benefit of omega-3 fatty acid supplementation?”

3. If not fish oil, O’Connor says the authors conclude that

it may make the most sense to spend your money on actual fish, rather than fish oil supplements.

They argue that by eating fish, you end up replacing other less healthy protein sources, like processed foods and red meat. For that reason, a diet high in fatty fish — one that includes at least two servings a week — may make a difference over the long term, they say.

If the above is correct, why are so many people eschewing fish for fish oil?

Submitted by Paul Alper

Choosing a spouse--really?

The purpose of spectacular wealth, according to a spectacularly wealthy guy

by Adam Davidson, New York Times Magazine, 1 May 2012

Davidson describes an interview with Edward Conard, one of Mitt Romney's former associates from Bain Capital, who has written a book entitled Unintended Consequences: Why Everything You’ve Been Told About the Economy Is Wrong. It is amusing to note the following, which appears that about halfway through the article:

There’s also the fact that Conard applies a relentless, mathematical logic to nearly everything, even finding a good spouse. He advocates, in utter seriousness, using demographic data to calculate the number of potential mates in your geographic area. Then, he says, you should set aside a bit of time for “calibration” — dating as many people as you can so that you have a sense of what the marriage marketplace is like. Then you enter the selection phase, this time with the goal of picking a permanent mate. The first woman you date who is a better match than the best woman you met during the calibration phase is, therefore, the person you should marry. By statistical probability, she is as good a match as you’re going to get. (Conard used this system himself.)

Discussion

1. They almost got the description of the optimal strategy for the famous Secretary Problem correct. What is missing from this argument?

2. Do you believe that Conard actually used this system himself?

Submitted by Charles Grinstead

Comments

1. This is a long article, which presents Conard's argument as a defense of unbridled, winner-take-all competition. He rejects the idea that income inequality is a problem; indeed, he thinks even greater rewards are needed as incentives for risk-taking entrepreneurs, whose efforts to boost the economy will benefit everyone. As a reminder that we have heard this before, Paul Alper sent the following quote from a previous century:

The American Beauty Rose can be produced in the splendor and fragrance which bring cheer to its beholder only by sacrificing the early buds which grow up around it. This is not an evil tendency in business. It is merely the working-out of a law of nature and a law of God.

2. See also the post Incentive perversity, Economix blog, New York Times, 7 May 2012. University of Massachusetts economist Nancy Folbre reminds us that the links between rewards and performance are not so clear cut, and warns that incentives can be distorted when economic rewards grow too extreme. As evidence, she points out that high-stakes educational testing led schools to cheat on exams, and out-sized contracts for star athletes led to an era tainted by performance-enhancing drugs. She concludes that, "Good incentives are always a good idea. But it’s not as easy to design them as it might seem, because they should discourage a host of economic sins — not just sloth and fear, but also cruelty and greed."

TV and the shortening of life

Television viewing time and reduced life expectancy: a life table analysis

by J Lennert Veerman, et. al., British Journal of Sports Medicine, 15 August 2011

From the online abstract we read:

Results The amount of TV viewed in Australia in 2008 reduced life expectancy at birth by 1.8 years (95% uncertainty interval (UI): 8.4 days to 3.7 years) for men and 1.5 years (95% UI: 6.8 days to 3.1 years) for women. Compared with persons who watch no TV, those who spend a lifetime average of 6 h/day watching TV can expect to live 4.8 years (95% UI: 11 days to 10.4 years) less. On average, every single hour of TV viewed after the age of 25 reduces the viewer's life expectancy by 21.8 (95% UI: 0.3–44.7) min. This study is limited by the low precision with which the relationship between TV viewing time and mortality is currently known.

Conclusions TV viewing time may be associated with a loss of life that is comparable to other major chronic disease risk factors such as physical inactivity and obesity.

Needless to say, this highly speculative--but very quotable--statistical analysis has been picked up by every conceivable web site since last August. It just made its appearance in the Minneapolis Star Tribune: Can TV cut your life short?, by Jeff Strickler, 7 May 2012. The New York Times mentioned it a week earlier: Don’t just sit there, by Gretchen Reynolds, 28 April 2012.

Submitted by Paul Alper

Discussion

1. The NYT article describes a number of studies concerning the ill effects of inactivity. Their entire description of the Australian study reads, "researchers determined that watching an hour of television can snip 22 minutes from someone’s life. If an average man watched no TV in his adult life, the authors concluded, his life span might be 1.8 years longer, and a TV-less woman might live for a year and half longer than otherwise." What is missing here?

2. Elsewhere in the story, however, the NYT notes that "Television viewing is a widely used measure of sedentary time." What does this suggest about interpreting the Australian study?

"Approval" statistic

“Memo to Connecticut Democrats”, by Jonathan Pelto, May 7, 2012

From a CT blogger's website:

The following chart indicates how Connecticut Democratic voters rate Governor Malloy’s job performance. In politics we use a statistic that measures the rate of approval compared to the rate of disapproval – we call that the overall positive or negative rating of an individual (i.e. +/-). The higher the positive rating the better the candidate or elected official is doing.

Questions

1. Can you think of a reason why the June 2011 poll figures sum to 106?

2. The rightmost column heading might suggest that these figures are margins of error (percentage points), except for their size. If they had been margins of error, about how many people would have been in the sample on March 2011? Is that realistic?

3. According to the text, the rightmost column contains a “statistic that measures the rate of approval compared to the rate of disapproval.” How do you think that the blogger compared approval/disapproval figures to come up with the figures in the rightmost column? (While I couldn’t find a definition of “approval rating,” I did found that the blogger's "statistic" is pretty common; for example, see Wikipedia's "United States presidential approval rating".)

4. How might you have entitled the +/- column, in order to clarify its meaning?

5. The blogger opens the article by stating that Malloy's "support from members of [his] own party ... is at a breathtakingly low + 19 percent." Do you agree? What would you have said?

Submitted by Margaret Cibes

Happiness and variability

Parents today are happier than non-parents, studies suggest

by Sharon Jayson, USA Today, 5 March 2012

This article drew commentary on Andrew Gelman's blog (Happy news on happiness; what can we believe?, 7 May 2012). Gelman notes that the article concludes with the following quote:

The first child increases happiness quite a lot. The second child a little. The third not at all.

The quote is attributed to Mikko Myrskylä, the coauthor of A Global Perspective on Happiness and Fertility, one of the two studies described in the article.

Gelman then complains, "As a statistician, I hate hate hate hate hate when people ignore variability and present results deterministically. The above statement might be an accurate summary of average patterns but is certainly not true in every case!"

Submitted by Paul Alper

House cuts American Community Survey

“Operating in the dark”, Editorial, New York Times, May 13, 2012

“Does government knowledge mean government intrusion?”, by Suzy Khimm,Washington Post, May 13, 2012

Last week the U.S. House of Representatives voted to cut funds for the American Community Survey and at least part of the Economic Census. The former, “a bipartisan creation” in 2005, provides annual updates of economic, demographic and housing characteristics, which supplement the decennial census information. Let’s see what the Senate does ….

See the U.S. Census Bureau Director’s statement (and video) here.

A blog post from the American Statistical Association website can be found here. It calls the vote "a blow to smart, efficient government and data-driven decisionmaking."

Submitted by Margaret Cibes

Falling survey response rates

Political surveys survive response fall-off, Pew finds

by Scott Clement, Behind the Numbers blog, Washington Post, 15 May 2012

When I discuss polling in my introductory statistics classes, and mention random digit dialing, like to reference the sidebar "How the Poll Was Conducted" that accompanies NYT/CBS polls, since it includes an unusually complete summary of the process. Regarding a poll from this spring we read

The sample of land-line telephone exchanges called was randomly selected by a computer from a complete list of more than 72,000 active residential exchanges across the country. The exchanges were chosen so as to ensure that each region of the country was represented in proportion to its share of all telephone numbers.

Within each exchange, random digits were added to form a complete telephone number, thus permitting access to listed and unlisted numbers alike. Within each household, one adult was designated by a random procedure to be the respondent for the survey.

To increase coverage, this land-line sample was supplemented by respondents reached through random dialing of cellphone numbers. The two samples were then combined and adjusted to assure the proper ratio of land-line-only, cellphone-only, and dual phone users.

Interviewers made multiple attempts to reach every phone number in the survey, calling back unanswered numbers on different days at different times of both day and evening.

This sounds impressive. Not mentioned, however, is the actual success rate in reaching potential respondents. The Washington Post blog references a recent Pew Rearch Center report, Assessing the Representativeness of Public Opinion Surveys, which finds that the average response rate to political polls has steadily fallen over the last decade and a half, and is now below 10%! The table below is reproduced from the report.

This sounds truly abysmal. Surprisingly, Pew reports that the accuracy of polling results does not appear to be suffering. To assess this, they conducted surveys using their standard methodology, which involves dialing randomly selected cellphone and land line numbers over a 5-day period, and then weighting results to reflect demographic proportions in the overall population. Interviewers solicited information that could be compared to data from major government surveys where sustained followups produce response rates over 75%. Substantial agreement was found on variables such as gender, age, race, citizenship, marital status, home ownership and health status. One conspicuous exception was education: 39% of respondents in the Pew survey say they graduated from college, as opposed to the 28% figure found by the Current Population Survey.

Some complementary ideas are discussed in Response rates and nonresponse errors in surveys, by Timothy P. Johnson and Joseph S. Wislar, JAMA, 2 May, 2012.

Discussion

Can you suggest possible reasons for the disparity on education in the Pew report?

Submitted by Bill Peterson

Overdiagnosis

Preventing overdiagnosis: how to stop harming the healthy

by Ray Moynihan, et. al.,, BMJ, 29 May 2012

These graphs below are reproduced from the article (full-sized version here). They show mortality rates and rates of new diagnosis for five kinds of cancer over the 30-year period 1975-2005. Observe that while mortality has been roughly constant, treatment is way up:

The authors contend that

Medicine’s much hailed ability to help the sick is fast being challenged by its propensity to harm the healthy. A burgeoning scientific literature is fuelling public concerns that too many people are being overdosed, overtreated, and overdiagnosed. Screening programmes are detecting early cancers that will never cause symptoms or death, sensitive diagnostic technologies identify 'abnormalities' so tiny they will remain benign, while widening disease definitions mean people at ever lower risks receive permanent medical labels and lifelong treatments that will fail to benefit many of them. With estimates that more than $200bn (£128bn; €160bn) may be wasted on unnecessary treatment every year in the United States, the cumulative burden from overdiagnosis poses a significant threat to human health.

Submitted by Paul Alper

Notes

For related discussion, see Chance News 74: Overdiagnosed, overtreated, which described H. Gilbert Welch’s book Overdiagnosed: Making People Sick In The Pursuit of Health. More recently, Welch wrote an op/ed piece entitled If you feel O.K., maybe you are O.K. for the New York Times (27 February 2012).

PSA, overdiagnosis, and anecdotal evidence

With PSA testing, the power of anecdote often trumps statistics

by Richard Knox, NPR: All Things Considered, 28 May 2012

One of the charts in the preceding post concerns prostate cancer. The US Preventative Services Task Force has recently issued a recommendation against routine PSA screening for prostate cancer; NPR reported that story here. The Task Force recommendation statement and related materials are freely available from the Annals of Internal Medicine, where we read

There is convincing evidence that PSA-based screening leads to substantial overdiagnosis of prostate tumors. The amount of overdiagnosis of prostate cancer is of important concern because a man with cancer that would remain asymptomatic for the remainder of his life cannot benefit from screening or treatment. There is a high propensity for physicians and patients to elect to treat most cases of screen-detected cancer, given our current inability to distinguish tumors that will remain indolent from those destined to be lethal. Thus, many men are being subjected to the harms of treatment of prostate cancer that will never become symptomatic.

Such recommendations predictably generate a backlash from those who feel they have benefited from the procedures. The present story chronicles some of these reactions for the case of PSA screening. It quotes one survivor, who believes that surgery after a positive test result saved his life:

My theory on statistics is anybody can look at the same stats and come up with their own opinion. Government does it; each political party does it. Whatever you want it to come up to read, you can fine-tune it and make it come up to that.

Ohio State University Psychology Professor Hal Arkes finds that for most people, such anecdotal testimony trumps statistical reasoning, which leads to "gross over-estimation of the benefits of PSA screening." He says:

Statistics are dry and they're boring and they're hard to understand. They don't have the impact of someone standing in front of you telling their heart-rending story. I think this is common to just about everybody.

The NPR story concludes with remarks from Michael Barry, who heads the Informed Medical Decisions Foundation in Boston. Barry worries about the reaction to a blanket recommendation from an expert panel. Instead, he recommends that doctors explain individually to patients that the screening has shown to be of little benefit. He says, "I think many men won't want the test in that circumstance. But some will, and I'm comfortable with that."

Discussion

For most people, do you think their doctor's description of the statistical evidence would be able to counter a compelling anecdote from someone they know?

Submitted by Bill Peterson

Commas and communication

Some comma questions

by Ben Yagoda, New York Times, 25 May 2012

Most of the article is about the use of the comma in (American) English writing. But it includes the following quote below is from Geron James Spray, an English teacher in Santa Fe, N.M.

Being an English teacher puts one in an interesting conundrum. Many people think that if you understand what they say or write, then they’ve said it or written it correctly and it needs no correction or improvement. For example, if a student walks into a math class and says, “Five times five equals 20,” then of course it’s the math teacher’s job to correct that student and the student won’t take offense. However, if a student walks into my class and says, “I did that real good,” and I correct her, then she’ll roll her eyes and say, “Oh, whatever, you know what I meant.”

Although not strictly about probability / statistics, it illustrates the difference between the two kinds of discourses, scientific and humanistic.

Submitted by Paul Alper

Can exercise be bad?

For some, exercise may increase heart risk

by Gina Kolata, New York Times, 30 May 2012

Is exercise good for everyone? Maybe not according to a recent analysis. We read here that:

By analyzing data from six rigorous exercise studies involving 1,687 people, the group found that about 10 percent actually got worse on at least one of the measures related to heart disease: blood pressure and levels of insulin, HDL cholesterol or triglycerides. About 7 percent got worse on at least two measures. And the researchers say they do not know why.

The story drew comment on Andrew Gelman's blog Massive confusion about a study that purports to show that exercise may increase heart risk (4 June 2012). Andrew points out that, since we know that individuals vary, we should not be utterly surprised that some people failed to improve or even declined. Furthermore, this is a simple before-and-after observational comparison, and Andrew is unconvinced by assertions that other potential explanations for the decline had been ruled out. See the full post and submitted comments for interesting discussion.

Finally, one NYT reader posted this comment to the article

It is possible, that 10% of the participants in this study would have showed these symptoms anyway-regardless of their participation in this exercise study. The research design, should have included a "return to baseline" phase. In other words, treatment/exercise should have been removed and participants should have been observed for changes. If this was done, it is not mentioned.

Submitted by Bill Peterson